Google Webmaster Tools Guide

1

BEST USE CASES FOR GOOGLE WEBMASTER TOOLS

TABLE OF CONTENTS

- 1. What Are The Not-Provided Keywords That Are Sending Traffic to Your Site?

- 2. View the Exact Backlinks That Google Has About Your Site

- 3. Disavow Unnatural Links & Recover Your Site

- 4. How to Get Instantly Notified When Google Penalizes Your Site

- 5. How to Get Instantly Notified When Google Finds Issues With Your Site

- 6. Understand How Google Sees And Ranks Your Site

- 7. Find Broken Pages on Your Site

- 8. Find Duplicate Pages on Your Site

- 9. Setup Your Structured Data Markup And Insights

- 10. Check The Google+ Author Stats

- 11. How to Link GWT With Google Analytics And Get Better Insights

- 12. How to Setup Your Preferred Domain For The Google SERPS

- 13. How to Speed up a Google Disavow Recovery Useing The Remove URLs feature

- 14. How to Block or Remove Unwanted/Obsolete Pages from the Google Index

- 15. How to Change a Site’s Domain And Maintain Its Google Rankings

1. What Are The Not-Provided Keywords That Are Sending Traffic to Your Site?

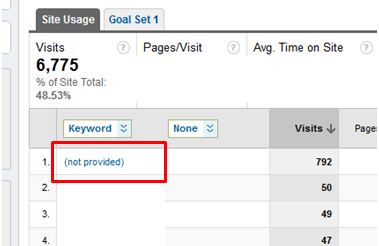

A while back Google decided they are not happy with the fact that people had access to keyword data and decided to flip a switch and leave everybody in the dark. It made all searches encrypted and all of a sudden the shining light of the keywords that guided SEO specialists and marketers faded in the distance.

The organic analytical data that people have been accustomed to receive doesn’t exist anymore, but there are still ways to figure out which keywords are most important and which are the most profitable. Having this valuable information is still very important, and the only viable method that you can use it is by using Webmaster Tools!

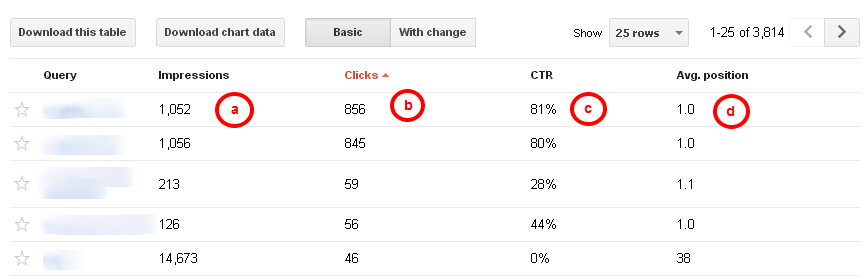

Of course it’s not the same as it used to be, and you can only view (a) “Impressions” which shows you the number of times your pages has appeared in Google’s search results; (b)“Clicks” that gives you an estimate of the number of times someone has clicked on the listing from search results for a particular query; (c) “CTR” ( clickthrough rate ) which is defined as the percentage of impressions that have preceded a site click and finally (d) “Average position” - which shows a rough number calculated by Google that represents the average top position of your site.in search results.

While you’re logged in your GWT, you can go to Search Traffic -> Search Queries to find out more about those Search queries from Google that are displaying URLs from your website and view a classification of pages on your site that are most seen in search results. You will also know their average position in search results.

This is the only place where you can still see the keywords that are sending traffic your way. Until Google removes this one too. I suggest you take advantage of this Free and Unique insight that you get from Google.

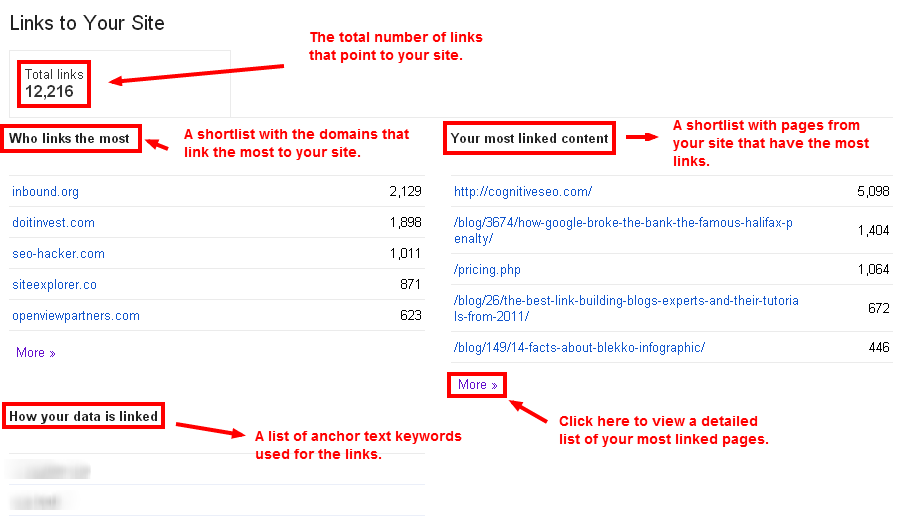

View the Exact Backlinks That Google Has About Your Site

In order to see the links that are pointing to your site, you can just type “link:www.example.com” in the Google search bar and it will display some of the websites. But to get a more detailed view of the status of your link structure on the web, you can use the Webmaster Tools feature named "Links to your site". You will be provided a total list of links in the left corner of the page, a short list featuring who links the most and one of the most linked page (these lists can be expanded by clicking “More”).

These lists of links are created during the crawling and indexing process of GoogleBot and they also include intermediate links that a user used to reach your site . Besides this information, you have a list with the most used anchor texts.

All the data from the “Links to Your Site” section can be downloaded in a CSV or a Google Docs file and can be used to remove or disavow unnatural links. You can also use it to see which links have been created lately and on which dates ( by clicking on the “Download latest links” you will have a list with the latest links and the dates on which they were added ).

The detailed list of domains that link the most to your site can show up to 1,000 elements which can be ordered either by number of links or by number of linked pages. For a more comprehensive report on your backlinks which includes a historical, webpage type and site authority analysis, you could also use the tool designed by cognitiveSEO.

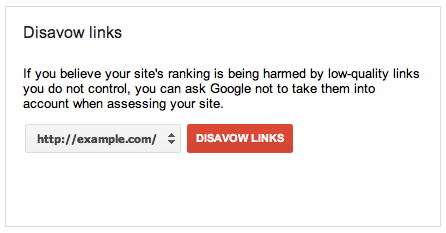

Disavow Unnatural Links & Recover Your Site

When Google detects that you may have built bad links, you get notified on your GWT account of any manual actions taken against your site for violating the guidelines. And you’re going to want to be as reactive as possible so you can repair the situation. After you’ve put a considerable amount of effort into removing all the harmful links, there may be some left, that couldn’t be removed. (this practice might not be necessary though, based on a lot of people’s recovery stories. The disavow only, if made correctly, saved their sites without the need to actually remove any of the links.) These bad weeds may be abolished by disavowing them. Simply put, this action asks Google to ignore these certain links when it checks your site.

Google qualifies links as unnatural when they think that the links have been created with the sole purpose of influencing ranking, and they have not been created organically. They can be any kind of links. Some examples might shed some light on how disavowing works and how unnatural links violate Google’s guidelines:

- If you’re going to insert links into a page that has little to no relevance

- If you’re going to add links into web-directories of inferior quality or bookmark sites only to influence ranking

- If you spam with links that are embedded into the footer and appear on every page.

Sine the newest Penguin update, the algorithm has started punishing sites left and right for their low quality “unnatural links”. Within GWT you can spot the links that Google marks as those directed to your site by using the “Links to your site” feature. You can download a list of these links so you can thoroughly investigate the potential problems and find what links you may have been penalized for. You can log into Google Webmaster Tools and use their Disavow Links feature.

At first you’ll be prompted with a simple menu where you can easily pick the site you can work on from a drop down menu, which will take you to a secondary menu where you’ll have the possibility to upload the file you with the links you wish to disavow.

Spotting unnatural links could be a tedious process and going through tens of thousands of links to spot the unnatural ones could take a really long time.

You could use third-party tools to guide you and speed up the process in order to be sure that the disavow will be successful. You will need a tool to point you to the links that need to be disavowed, which is easy to work with and efficient. The Unnatural Link Detection Tool, by cognitiveSEO, can do all that and more, it will detect which inbound links are interpreted as unnatural and also bring out which have a suspect profile.

One conclusive thought on this would be that you should use this feature with care since, if given imprecise data, it may potentially alter your site’s ranking in search results.

You should also be aware of the possibility that your rankings won’t recover, even if the disavow is successful. A successful disavow would mean the lift of a growth blockage. It is like you never had those links that you disavowed. They helped you for a while … but now they don’t anymore. So you need to start building new high quality links to your site while you are doing the disavow.

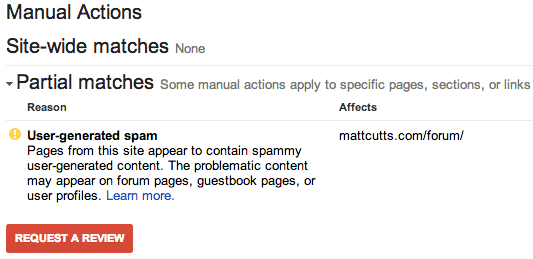

How to Get Instantly Notified when Google Penalizes Your Site

Most actions made against your site are automatized and driven by an algorithm, but sometimes the red flags that your site puts up might trigger Google to take manual action. It’s one notification you should always watch for, because it means you’re in trouble! Once you get such a notification you will need to carefully read the guidelines on linking and see what you have done wrong, to take immediate action.

While in most cases Google is very transparent with the updates done to the algorithm, you can always miss something and as a result, you can immediately be on the wrong side of Google’s law. We cannot say for sure what are the grounds on which Google gives a penalty, we can give you an idea on what are the ingredients in it’s composition. And repairing all those errors can be a handful. We will give you a couple of reasons which are commonly atributed to Google penalizing your site:

- Buying links. Without question this is one of the worst actions that can definitely harm your site's performance in search results. Nobody talks about it, nobody admits to actually performing such a deed, but Google sees this malicious intent of influencing its rankings. So if you’re paying for links, they won’t be helpful for long.

- Page footer links. This is mainly a method used by web designers to leave their “signature” on a site, but it can also be used with an ill-natured intent to manipulate Google’s SERPs.

- Keyword stuffing. There are a lot of rules when it comes to the number of keywords you put in your content, and one thing is for sure - being too spammy with your keywords makes Google angry.

- 404 pages. Well this reason kinThereda explains itself. It’s not normal for people to click on indexed pages and find errors instead of the content they were looking for.

- Association with dubious sites. As a ground rule, Google doesn’t like it if you associate yourself with sites that are suspicious.

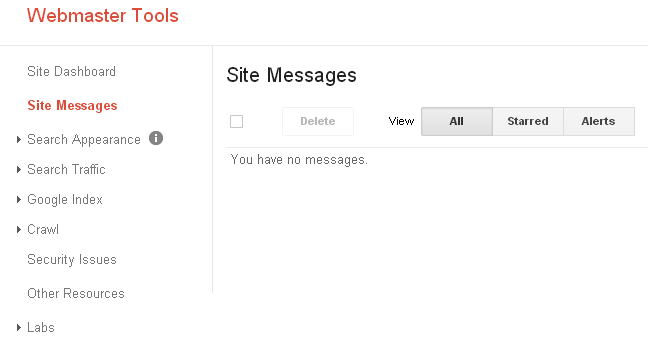

How to Get Instantly Notified When Google Finds Issues With Your Site

One important fact you should know about this tool - in case of a problem with your site, Google sends an email to your Webmaster Tools account if it detects any problems. Through these notifications you may encounter warnings on different issues or just notifications with informative value. So you could already sense why every website administrator should take a look, once in a while, at the messages they receive.

Here are some of the possible issues that Google might alert you about:

- Robots.txt problems - Problems detected by GoogleBot while accessing the robots.txt file will be reported

- Your site is down - You will recieve a message if Google detects that your site can’t be accessed because it is down

- Crawl errors - You may receive Site/URL errors if GoogleBot encounters problems crawling your site. There may be problems accessing the pages or you may have blocked content

- Big traffic changes for a URL or the entire site - The traffic spikes may represent a site overload and may be the result of some difficulties encountered by GoogleBot while crawling your site.

The first thing you should do when you set up your GWT account is to check that the email address listed there is the one that your frequently use, so that Google may send you these important notifications. Actually Google recommends this as being the first step after you’ve setup your account and verified your site.

We highly recommend you to enable it, or else you won’t receive notifications from Google regarding potential issues.

Understand How Google Sees And Ranks Your Site

Google takes every detail into account. Going from the visual aspect of your website, the inbound links, the site-links and rich snippets to how well it is crawled by the GoogleBot. In Webmaster Tools you can tend to the aesthetic part by monitoring and improving the search appearance in search results. There are a couple of tools that are mainly created for that sole purpose:

- You can structure data, in order to help Google better understand the content you’ve placed on your site and make it easier for the algorithm to select which pages to place in the rich snippets. There is also the Data Highlighter which lets you do the same thing in a point and click manner

- A tool for the HTML improvements. Here you can address issues such as duplicate content and missing title tags that may improve your website’s performance and it’s user experience

- A feature which helps you show Google what sitelinks are to be shown under your site, when users search for your brand keywords in the search engine. This way, users will have the most important pages from your site at hand.

Another feature that monitors your performance in the search results is the Author Stats. Once you’ve connected your Google+ account with the content that you write, every time a page written by you is shown in the search results, it will display a picture of you which stands as a link to the profile of the author. That way you give more credibility to your content, and it links all the content written by you under one identity.

Find Broken Pages on Your Site

Another valuable feature for website owners or developers is the Crawl Errors feature. As a war commander, you cannot be omnipresent on the battlefield. It’s the same for a site. The Crawl Errors page will provide you with an overall review of the links in your site that Google couldn’t access or replied a HTTP error code. You can see exactly what URLs are broken and you can also view specific details about these errors.

To be more specific about the problems you may encounter, there are two types:

- Errors that are site related: Here you’re going to find a list with all the issues that GoogleBot encountered while trying to access your site

- Errors that are URL related: Here you’re going to find a list with all the issues that are related to a specific URL. These things might be a little bit more complicated. You can filter based on three Googlebot crawlers: Web/Smartphone/Feature Phone

Besides the listing of the pages that Google had a hard time crawling in the order of their importance ( important URLs are on top of the list ), it will also display what problem it encountered. You can see a more detailed version of the problem by clicking on the pages from the list.

After you’ve fixed the problem regarding a certain broken URL from your site you can chose to hide that specific link from the list by checking the box next to it and then clicking “Mark as fixed”. If the issue remains unsolved, next time Googlebot crawls the page, it will re-enter the list.

Find Duplicate Pages on Your Site

Even if you’d think you don’t need it, there’s a feature that gets used regularly when it comes to content ( within a domain or across domains ) being duplicated. It doesn’t matter if it’s not-malicious or it is deliberately duplicated in an attempt to manipulate search engine rankings, Google is going to make the appropriate adjustments if he’s going to perceive your duplicated content as deceiving. The problem is that, when Google sees your content on multiple pages, it doesn’t know which one to display. This problem becomes very tricky when various people start linking this content from various sources. And Google considers it’s not their problem, it’s yours! It’s a messy issue and it will be swiftly penalized.

In the majority of cases, you can find duplicate content that was added without intent on the site. You can use the HTML Improvements feature found in the Search Appearance category. In this tool you can see Duplicate title tags - it shows you a simple summary of pages that have duplicate title tags. Click on the link to see a detailed list of the pages that have duplicate title tags.

Think of it like this: you’re at a crossroad with two or more directions, and they all point out the same destination. In terms of content, you might say that you won’t be bothered as long as you get the thing that you’ve searched for, but for Google the situation is very problematic. And this ain’t just a hypothetical situation. It is quite a common situation where your article may appear twice on the site, with different URL’s. And you’re also hurting yourself by splitting the traffic two ways, instead of focusing on the same URL. You won’t be able to rank very high on a certain keyword.

Setup Your Structured Data Markup And Insights

As you can imagine, search engines have a hard time trying to decipher the content of a website. So, sometimes it can use some human help to understand what might be very logical and obvious for a human being. You can add structured data to the pages on your site via microdata, microformats and RDFa to create rich snippets ( those small slices of information that show up in the search results ). You’ve probably seen them already in various forms - average review, ratings, price range, song list with links to play each song etc.

And creating all these can be pretty overwhelming. That’s why Google puts the Structured Data Markup Helper feature at your disposal. With a point & click style, it’s guaranteed to ease yourself of the pain of adding structured data markups.

On the main page you’ll find two tabs - website and email. You’ll be able to sort the website data by various tags: articles, events, local businesses, movies, products, restaurants and software applications. After you’ll hit the “Start tagging” button you’ll be able to start selecting the content by hovering over the desired segment.

PageSpeed Insights is another tool put at your disposal in the Other resources menu that takes a quick audit of your page and gives you tips on how to improve its rendering. You’ll receive valuable information and insights only for the parts of the page that are not related to the network ( since your network connection may alter the performance of a page ). You’re going to see insights regarding HTML structures, CSS, JavaScript and images. In front of each suggestion made by the tool you’ll have a priority indicator so you’ll know what are the most pressing matters.

These features are meant to ease the jobs of webmasters and developers and give them some quick insights on how to optimize their sites, if needed. You’ll find these nifty features and many more in the Other Resources tab.

Check The Google+ Author Stats

First of all, let me start by saying that this feature started out as an underdog, and wasn’t a very popular tool. I feel the need I must start by explaining Google+ Authorship - People can link their Google+ profile picture to any content they have created, on any site. What are the benefits ? Well, for starters, when it’s displayed in the search results, your article will have a bigger credibility, and it will stand out. A second reason would be that, by doing this, you’ll get a higher ranking. And last but not least, if someone sees an article written by you on other sites, it will be easier to access more content written by you on your own website.

You’ll find Author Stats in GWT, as a Labs feature. Many users just happen to discover this by chance and they immediately get hooked on it. It’s only available to those that claimed their content through the help of Google Authorship, and it primarily quantifies and displays the times your content appeared in the SERPs and the number of times it’s clicked. With this tool you can actually see which content is attributed to you by Google, since you have all the data right there, for every single page!

You can access all the data about the organic searches made for the content on which you have authorship and you can toy around with all the results, metrics and filters. The data can include Youtube videos, posts, articles and images.

The data that’s available in this feature has very similar measurements and filters with the one displayed on the Search Query page: Impressions / Clicks / CTR / Average Position. You also have “Filters”, a feature that displays your reports based on your selections: web, image, mobile, video, location and traffic.

Worth mentioning in the end is that the maximum period of time covered in the data shown in Author Stats reports is 90 days old and you can customize this period at your choosing.

How to Link GWT With Google Analytics And Get Better Insights

Since Google pulled the plug on providing keyword data through Google Analytics, people became creative and found out another source of capturing details about search behaviours. The data provided by GWT about queries is limited, so if you import it into Google Analytics and sort out the data by the same metric, you’ll get valuable information for keyword research.

To make this possible, the nice people from Google tweaked some parameters to make it easier to correlate the data from these two tools. You just need to link your GWT to your GA property and you are ready to run. It gives you the ability to see the data from Webmaster Tools into your Analytics reports.

If your GWT data doesn’t resemble the one in GA, there are a few reasons why - the statistics from Webmaster Tools differ a little because it needs to watch out for duplicate content, and that causes that information to be altered. On the other hand Google Analytics receives information only from the users that activated their JavaScript.

How to Setup Your Preferred Domain For The Google SERPs

When you have two versions of URLs to your site ( www and non-www ) you might have links pointing to both of them. One feature can be used to set a preferred version for the site to be seen in the search results. You need to know why this is so important to your site and the intricate understanding on how it is perceived in search results. Google uses any variation of the same URL as a different occurrence when introduced into a search engine.

So, if www.example.com and example.com are one and the same site, Google crawles them and acts as if they are different. It can potentially damage the ranking because the traffic gets split in half. It’s not only the ranking that suffers, it’s also the domain authority that takes a hit. Your backlinks will be split among the two versions of URL and your online marketing efforts will be very hard. You won’t have a good data set that reflects the actual situation from the search engine and your decisions will be defectuous.

It’s best that you set up a preferred domain right away, so Google knows what to index and what traffic to monitor. In order to set it up, you need to claim and verify both versions of your site’s URL. After adding one version of a site, let’s say www.example.com, you repeat this easy process and introduce the alternative version without the www. After this, all you have to do is to assign the preferred domain from “Settings”. That way, you’ll make sure that you’ll monitor everything from a single Webmaster Tools account

How to Speed up a Google Disavow Recovery Using The Remove URLs feature

You’re going to have a great time playing with Webmaster Tools, but sometimes you’re gonna have to do things that you don’t want to. Things like removing pages and sites from the SERPs. These acts of cruelty can only be done by a site’s webmaster or by Google itself.

As Matt Cutts explained when the Disavow feature was introduced, a webmaster should resort to this feature after he went on the normal path of removing the unnatural links that affected the site. It doesn’t matter if you have low-quality links because of poor judgement or a SEO company that didn’t knew what they did, you can disavow the links that you weren’t able to remove with the Google Disavow tool.

Even if Google hasn't taken any manual actions to penalize your site, you should still be able to look at the backlink profile and disavow everything that seems suspicious. You should feel free to use this feature to take precautionary measures with this feature against those suspicious links, even if they aren’t causing any issues yet.

How to Block or Remove Unwanted/Obsolete Pages from the Google Index

You can block out pages from Google through the use of a robots.txt file. At this moment, the feature to generate such a file exists, but not for long. You’re going to see a message displayed in Webmaster Tools Help that states that this tool is going to be removed and this file is going to be created manually by the users or generate a robots.txt file using specially designed tools.

What does a robots.txt do? What is its purpose? When you’re placing such a file on your site, you can restrict the Googlebot from crawling certain pages. As you may know, these bots use an algorithm and crawl pages automatically, but before all, they will access the robots.txt file to examine if you applied some page restrictions.

You’ll only make use of this file just in the case you’re site has some content that you don't want to appear on the search engines. In case you don’t want to apply any restrictions there is no purpose for the existence of the file.

You’d think, well, why would I bother doing this ? There are a couple of reasons you’re going to want to take extra care using this feature:

- You want to discard information from Google’s search results

- You want to discard information that is placed on other sites, from Google’s search results

When you want to address something you want handled quickly, this tool is your biggest helper. Google will address your request right away and will expedite the process of removal. Otherwise your pages are going to be automatically removed from search results if your pages suffer any updates or are removed completely. These procedures are completed automatically by Google.

This tool is intended for people that need to take immediate action on their site - for example, when the page displays confidential or sensitive information. And also, it’s worth to mention that this can be used on a small number of pages, and it’s a pretty simple process. The process can become complicated when you’re dealing with thousands of URLs and you have to deal with them swiftly.

There are many ways in which you can block the Googlebot. Using disallow in the content of the robots.txt file stops bots from crawling the page ( although it might get indexed eventually ), or using a noindex tag that lets the bots crawl the page but prevents them from indexing it. The latter completely stops the page from getting indexed, even if it has links pointing to it.

There are a lot of methods out there, completely clandestine, that are inefficient or, in some cases, completely ineffective. So we encourage you to use this method, if you should happen to need to block content from indexing or remove unwanted/obsolete pages from indexing.

How to Change a Site’s Domain And Maintain Its Google Rankings

There are a few hoops that you need to jump through to maintain a site’s ranking while you transfer your site to another domain, and we’re going to explain some major lines that you need to watch in this process.

Let’s say you’ve decided you’re going to go to another domain, a fresh start, but you don’t want to completely destroy the ranking that you’ve worked for since you’ve launched the site on your old domain. You need to be really careful with what you acquire because you might buy something that has issues with the major search engines and you will have some trouble making the transition. Given the fact that your first step into this transition is the procurement of the domain, do a little background check on the domain - any record that shows the activity on search engines, what pages are still indexed. You can also make use of the database in archive.org to get a glimpse on how this site used to look.

We suggest that this transition should be made with care. Consider taking baby steps in transferring your website because you don’t want to end up with significant problems in terms of SEO. Start by creating a custom home page where you’re announcing this transition. Let it stay like that for a few weeks before you start moving content so that Google crawls it and indexes it. Don’t move all at once, it may have some unresolved issues remained, and you don’t want those problems to affect your already healthy and optimized site.

Another strong recommendation would be to link pages from the old domain to the new one by creating 301 redirects. These redirects are to be used only if you plan to permanently move to your new domain. The last thing that we think is important to be mentioned is that, if you plan on changing web addresses and restructuring the layout, you shouldn’t do both at the same time. It just means more trouble, both for you and the search engine. And if you see a decrease in rankings or something like that, you won’t know what is not working.

Google Algorithm Changes

Google Algorithm Changes