In the last week, many speculations have been made regarding what Panda 4.0 has really impacted in terms of ranking and site. As Google representatives didn’t feel like talking too much about what this update is really about, the SEO world was caught on fire by suppositions and advice about how we should effectively stay safe in front of the big angry Panda. It’s indeed a bit early to draw 100% guaranteed conclusions about what the 4th Google Panda is impacting. Yet, we think that the best to lift the veil of mystery on the matter is through in-depth research backed by real examples and case studies. Making a list with the winners and the losers of this Google Update might not be very elucidating. We need to figure out what are the patterns that lead to the rise of some sites and the fall of others, even if the process of finding it out implies a lot of work and coffee from our part, and patience and time to read a long article from your part.

We don’t want to throw ourselves in the belly of the beast right from the beginning but our first hypothesis is that Google Panda 4.0 does not penalize sites in the way we are used to(total drop from the top 100 SERPS), but instead is deranking or boosting sites ranking.

If you browse just a bit through the articles written on this issue, you will see how the latest Panda is “accused” of being more “soft and gentle” than its elder brothers. Maybe the Google engineers wanted to bring the Google Panda 4.0 Update closer to the origin of the “big furry mammal”. It is known that Pandas are a symbol of peace. For example, hundreds of years ago, warring tribes in China would raise a flag with a picture of a Panda on it to stop a battle or call a truce. Yet, given Google’s declared war against spamming and thin content, we think that there are other characteristics that the search engine copied from the Panda bear: the fact that unlike other bears, giant Pandas do not hibernate. They walk around all year-long, looking for elevations suitable for each seasons but they can be active at any time of the day or night.

This is a really long article so if you do not have the necessary time to read it, just skip to the conclusions. This is short TL;DR conclusion. For more click here.

Conclusion I. “Content Based Topical Authority Sites” are given more SERP Visibility compared to sites that only cover the topic briefly.(even if the site covering the topic briefly has a lot of generic authority). More articles written on the same topic increase the chances for the site to be treated as a “Topical Authority Content Site” on that specific topic.

Google Panda 4.0 Winners – Quality is Not a One Time Act, it is a Habit …

There was not only once when Matt Cutts said that we shouldn’t trust specialized information coming from sites like eHow or generic find-any-generic find-any-answer sites but look for specialized sites instead, the ones that really have related content and well-documented stuff. Even more, in a video posted in April this year, same Cutts stated that he is “ looking forward those rolling out because a lot of people have worked hard so that you don’t just say oh, this is a well-known site therefore should match for this query, it’s this is a site that actually has some evidence that it should rank for something related to medical queries, and that’s something where we can improve the quality of the algorithms even more.”

Here we are, one month later this video, facing the reality of the SERPs where it seems that indeed, “Topical Authority Content Sites” gained much more ground. Let’s confirm this situation with some examples that speak for themselves.

Winner – Topical Authority Content Focused Sites

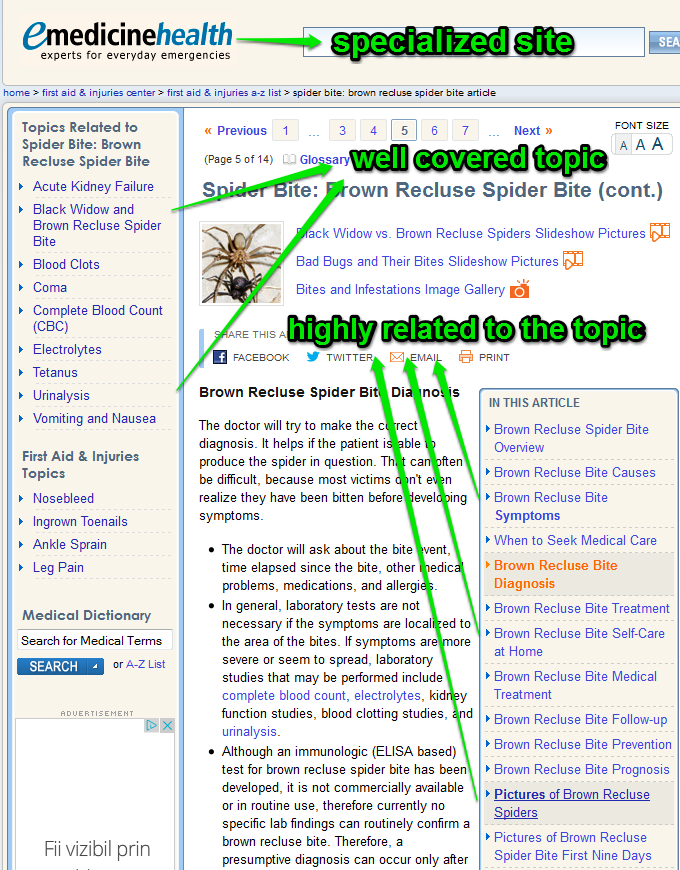

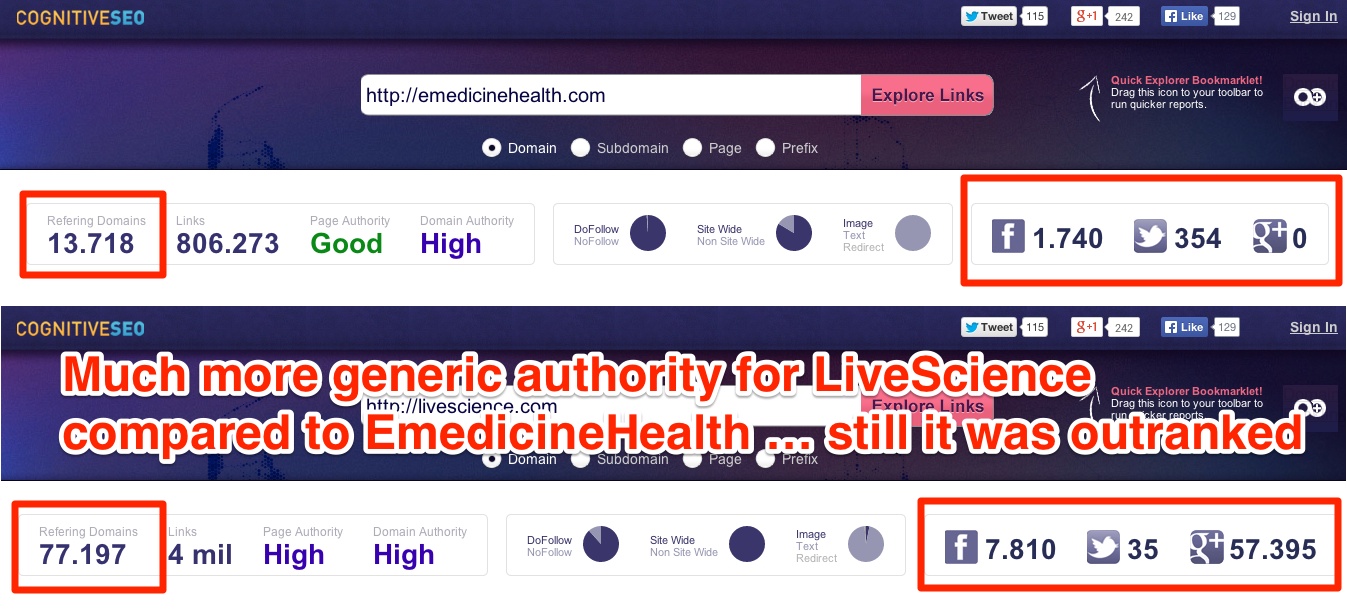

After the latest Panda update, EmedicineHealth seemed to have won important positions in ranking, as we can see in the screenshot below.

To figure out why did this situation occurred we took the keyword “brown recluse”, for which they’ve won a lot of positions in ranking, and we’ve compared the content they offer on this matter to another site that lost 36 positions on the same keyword, LiveScience.

Winner

Loser

At a first glance, both sites seem to offer good quality information, well written and with logical structures. Even more, livescience.com has great interactivity with its audience, judging by the high number of shares and comments. Nonetheless, emedicinehealth.com was the one winning more than 99 positions on this keyword and not livescience.com. Why would this apparent paradoxical situation occur?

To start with, livescience.com is a site offering general information about a lot of things. We see that the trending content from this site includes articles from military&spy tech area to best fitness trackers and 3D printing. On the other side, emedicinehealth.com offers articles highly related to the present topic, leaving the impression that this site really is specialized in medical emergencies and really offers reliable content on this matter and related topics.

Also, in the last years, Google’s goal was to return the best possible results that match not just based on the exact match query but on the intent of the user doing the query. It’s clear in our case that emedicinehealth.com has had a more in-depth topic covered than a simple article about the problem, like livescience.com has.

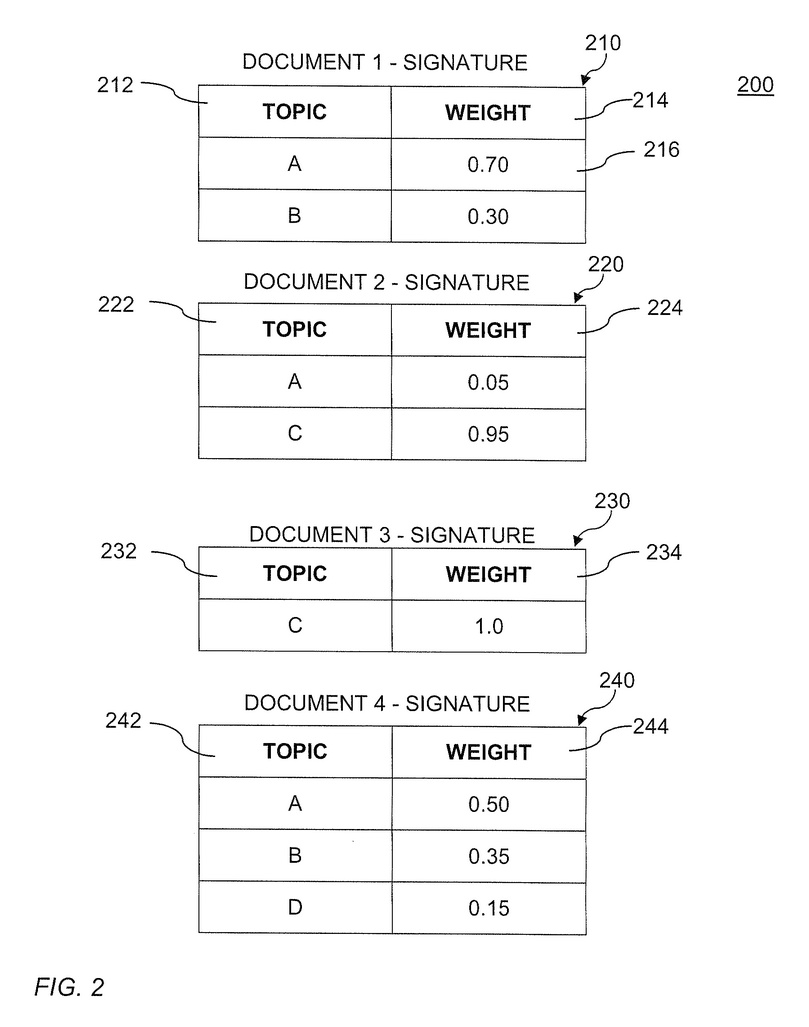

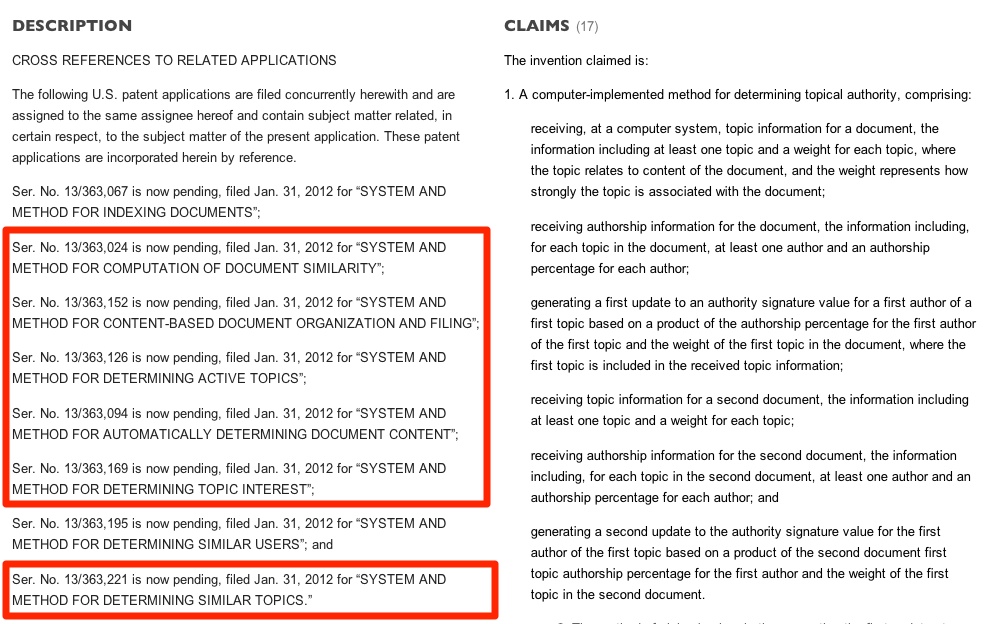

It is interesting to see this patent that Google was granted in 2013 regarding the detection of topics in particular pages and documents. Applying this procedure to each individual page across the web Google can map out all the topics for each and every page and create buckets of pages from sites that talk about those specific topics. Using this methodology it is rather easy for Google to understand the most talked topics for individual sites and map them out based on the authority of each individual page relative to that topic.

The patent goes in more details and outlines how it may be used by being cross-referenced to these related applications.

All of the listed references are practically exactly what they would need in order to map the Topical Authority of each site across their index.

Let’s continue our Google Penguin 4.0 Case Study with more examples that will underline more the Topical Authority Content Update related to this Google Update.

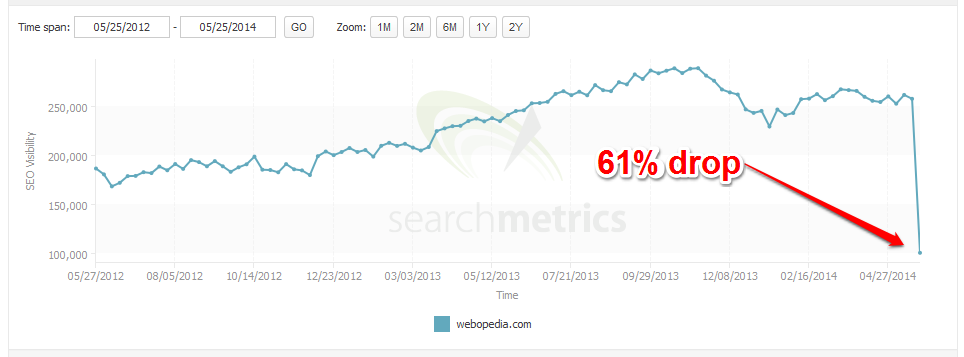

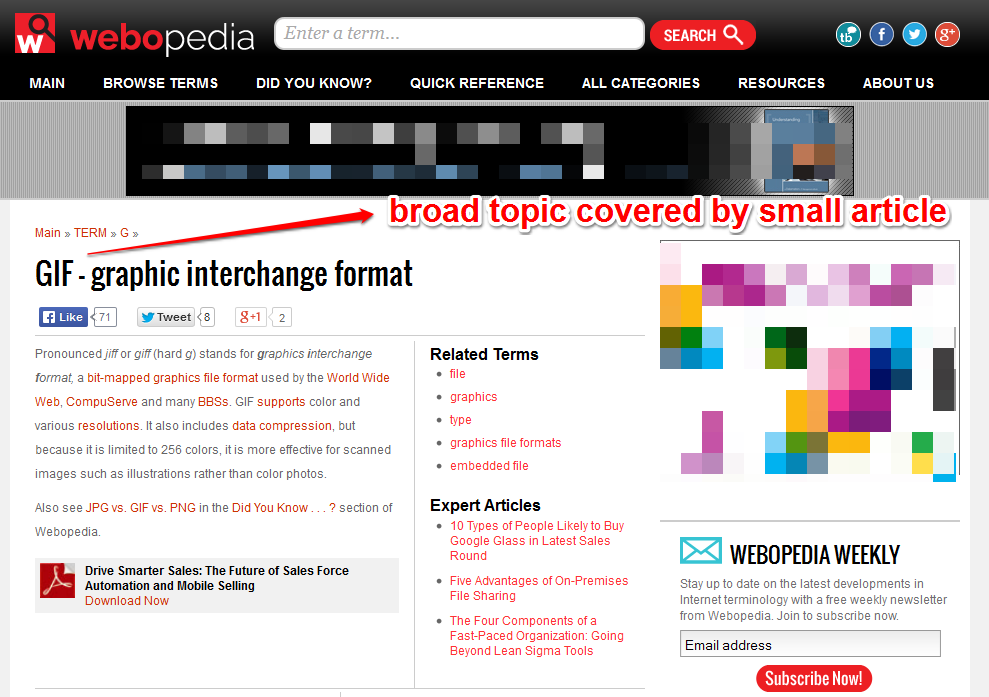

To sustain this affirmation, let’s take another example that has suffered some changes due to Google Panda 4.0. Webopedia is an online tech dictionary providing definitions related to computing and information technology. www.webopedia.com is among the sites that lost rankings for a lot of keywords, most likely because Google might be looking at in-depth concepts and not just generic and simplistic content nowadays. Let’s take a look at one Webopedia’s definition page :

We find here the definition of a GIF, a graphic interchange format. Although the definition seems correct, this is a broad topic that it is covered only by a very small article. There are other publications that cover the concept of the GIF in much more detail and those sites are the ones that outperform now Webopedia, after the Google Panda 4.0 update.

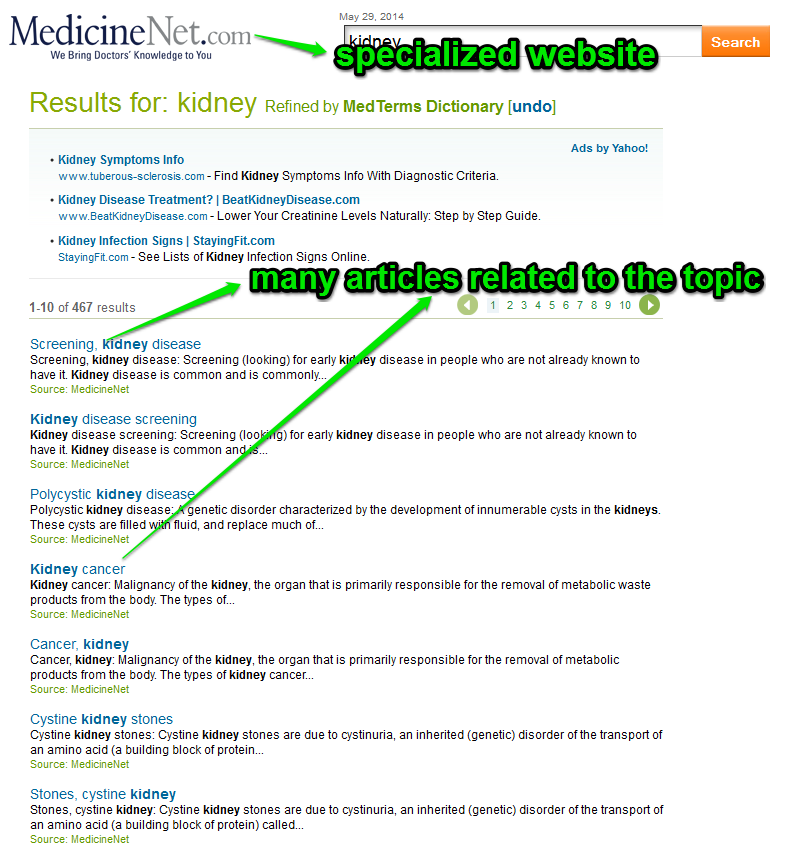

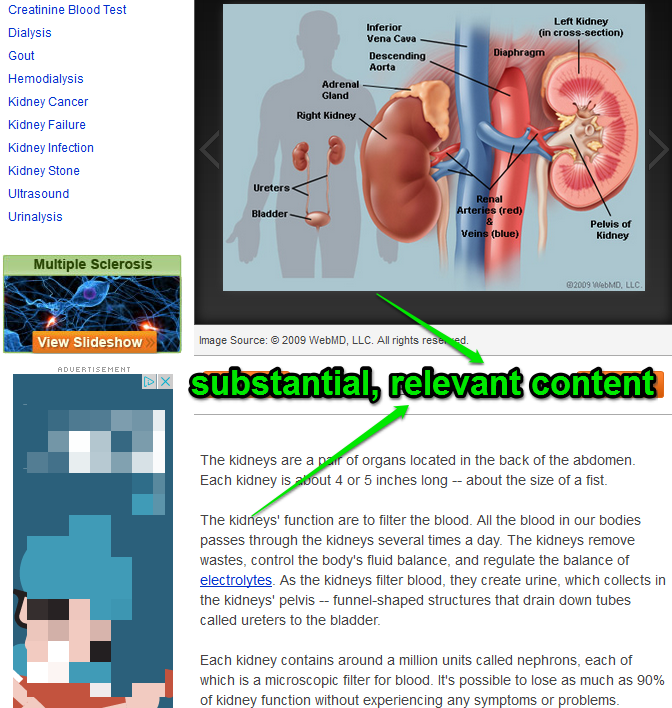

We shouldn’t draw a hasty conclusion out of this, believing that dictionaries, by default, won’t be able to rank high due to the Panda 4.0 new algorithmic improvements. Below, you can see two screenshots taken from MedTerms , an online dictionary specialized in medical terms but written, as they claim, with consumers and patients in mind.

When we searched for “kidney”, not only that we found many articles related to kidneys issues from which we can choose the one that might interest us the most, but each of that articles has substantial and relevant content which covers the topic very well.

Winner – Great Site Structure + High Quality Content

Other sites that seem to have benefited from the Google Panda 4.0 update are the ones that focused on unique, high quality and well-organized content. I know that this concept might seem a bit abstract and is always a challenge on deciding what does” high-quality” really mean. We cannot give you an exact, holistic response on this issue but we can try to figure out what really matters at the end of the day:

What does Google consider to be high-quality content? We are going to unravel the answer to this question by analyzing some sites that have been boosted by the Panda update, most likely on quality and structure basis.

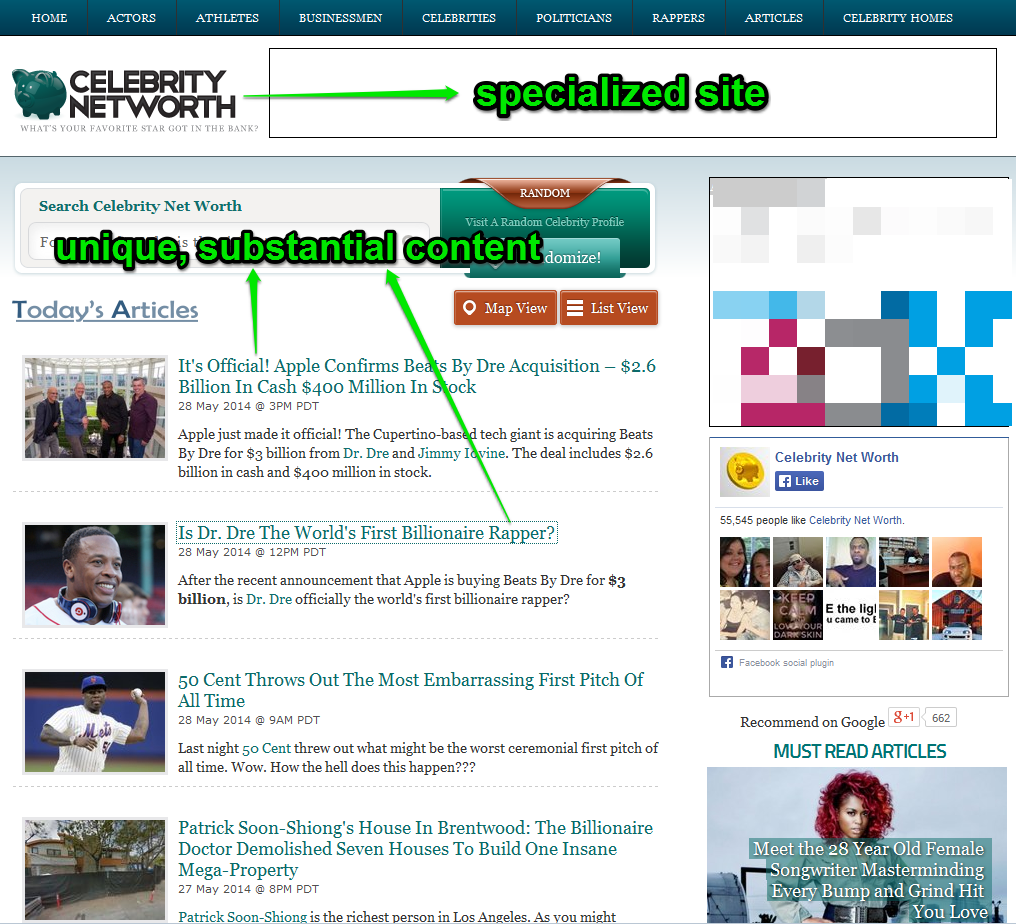

We will start our examples with some sites from quite a controversial area: the glamor and celebrity gossip field. Although a lot of skepticism is thrown up about the content quality of this area, there are sites that turn out to be very “Google Panda Friendly”.

As we can see from the screenshot above, the content that is generated on this site is really unique and substantial. Is not regurgitated nor rephrased from other sites. Although the article’s titles are really flashy, the content is in correlation with those titles and responds adequately to the topic.

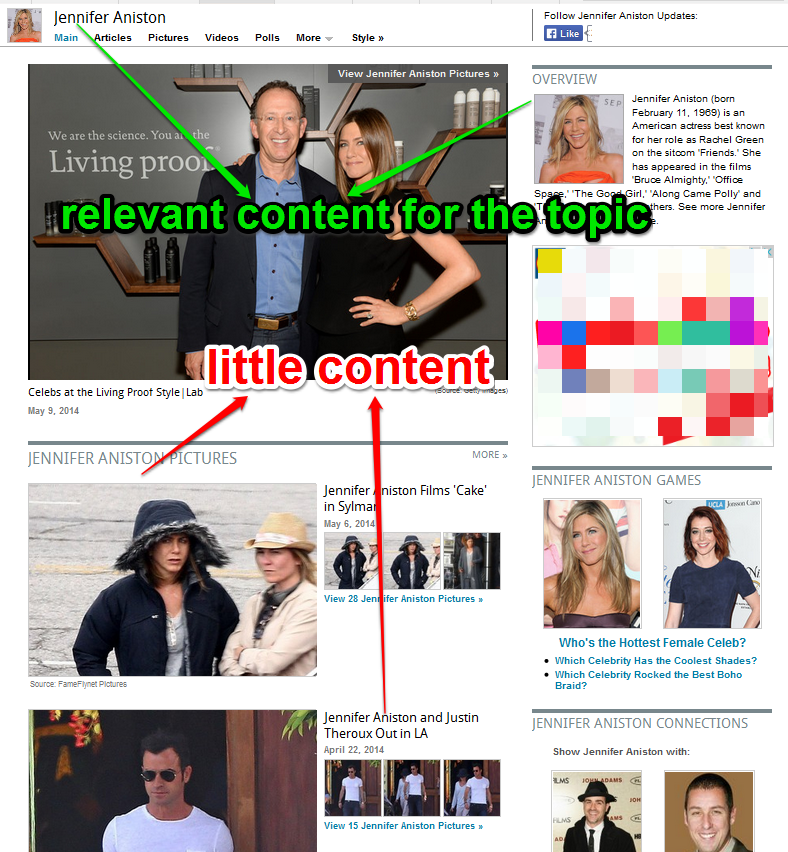

Another site from this area that got a high increase is Zimbio.com. The site is higher now in the SERPs by ranking on celebrity names like Mila Kunis, Justin Biber or Jennifer Aniston and a lot of other celebrity names. Let’s take a peek to their site to try to figure out what is it that Google found good enough to give them such a ranking boost.

In fairness, this site doesn’t seem to have much content at all, nothing further than some headers and some pictures. So, did Google make a mistake in this case or was the site ranked high for other reasons? Neither of the two affirmations can be stated fully. Yet, the content on this site is indeed relevant for “Jennifer Aniston” topic and does bring a lot of information (even though in a picture format) about the actress’s life, biography, etc. However, a lot of question marks hover across this website if we take into consideration not only the present Panda update but all the suggestions that Google gave us regarding the importance of the content on a website. Matt Cutts himself said in a video that

“If you don’t have the text, the words that will really match on the page, then it’s going to be hard for us to return that page to users. A lot of people get caught up in description, meta keywords, thinking about all those kinds of things, but don’t just think about the head, think about the body because the body matters as well.”

So in the end it might be that the site covered the topic of Jennifer Aniston and all the other celebrities much better than other sites in the same niche.

Could it also be about the Topical Authority of the site and how it build it using relevant internal linking structures based on the in-depth covered topics?

A site that Google Panda 4.0 has pushed in front is myreceipes.com

Not only that the recipes offered here seemed to be original and really well-written, but this site has also very well-organized content and structure. From its launch, the Google Panda algorithm focused on topical content segmentation and site and article structure. It looks like Panda 4.0 version still focuses highly on a good topical content segmentation.

Let’s take another example in order to reinforce this affirmation. The site we are talking about is Shopstyle.com.

In this case, we are dealing with an ecommerce site that while is presenting a dozen of products still manages to offer great user interaction due to its well structured website. Due to the great customer-focused functionality, the site succeed to win a lots of SERP visibility for a bunch of important keywords.

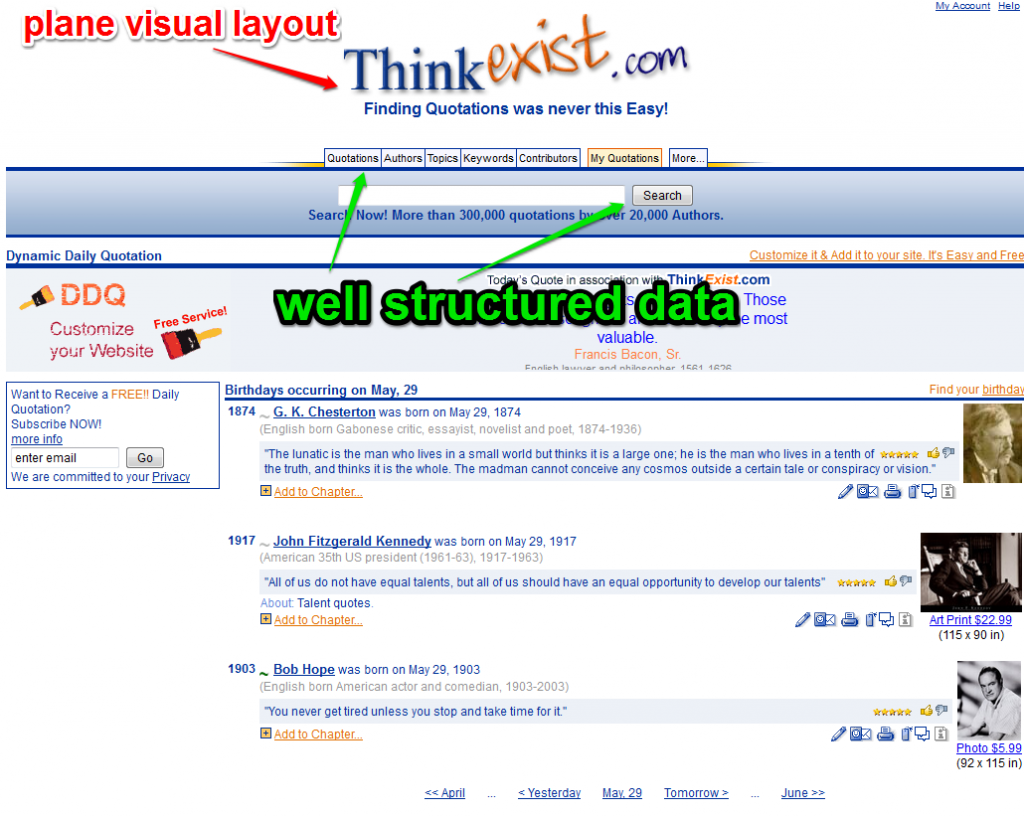

If you concluded from these examples of well-structured sites that your web page should be very flashy and conspicuous, allow me to give you an example of a site that is an “Google Organic Winner” of the latest updates with a simple and plain visual layout. The site is Thinkexist.com.

As you can see from the screenshot above, thinkexist.com is a site with a pretty plain visual aspect but with very well structured data. In fairness, in terms of graphic design this site does not excel but what is important (and what Google seems to appreciate) is that it is really well made and it makes it easier for users to interact with it also.

The Panda bear is mostly a solitary animal that meets occasionally for social feeding and mating. Did the Google Panda Algorithm borrow this characteristic from its furry correspondent or social interaction, comments and sharing are now more appreciated than before.

Asked at the beginning of this year whether Facebook and Twitter signals are part of the ranking algorithm, the short version of Matt Cutts answer was “No”. In this video , the Webspam Team leader said that Google does not give any special treatment to Facebook or Twitter pages. They are in fact, currently, treated like any other page. Still, Google shows an increasing concern, in terms of social media user’s privacy, as if they crawled social media pages the way they crawl other sites, the snippets could end up containing information the user didn’t intend to have showing up in search results. Long story short, Google’s official position is that they don’t take social signals into consideration to rank a site.

Still, a high user interaction shown in comments and sharing on a website seems to have boosted up some websites after the latest update. Let’s put under the magnifying glass the Ultimate-guitar.com , a site that reunites guitar tabs, music news, reviews, etc.

Yet, as we take a look at the overall site, at a first view, we find it hard to figure out what is it that Google loves about this site. What makes this site to be one of the Panda 4.0′ s favorites is the high interaction it has with its audience. On ultimate-guitar.com you can be a contributor (adding chords or tabs), you can request tabs that aren’t on the site already (and is highly probable to get a response in just a couple of the days), you are given the possibility to be active on the forum and (maybe the most important) there is a very strong “ultimate guitar tab community”in which you can easily connect with other people with shared passion.

So, although on the first sight this seems like a website that doesn’t appear to have obvious grounds to be boosted, it’s capacity of gathering people who share the same passion, and have an active community is what Google wants to see.

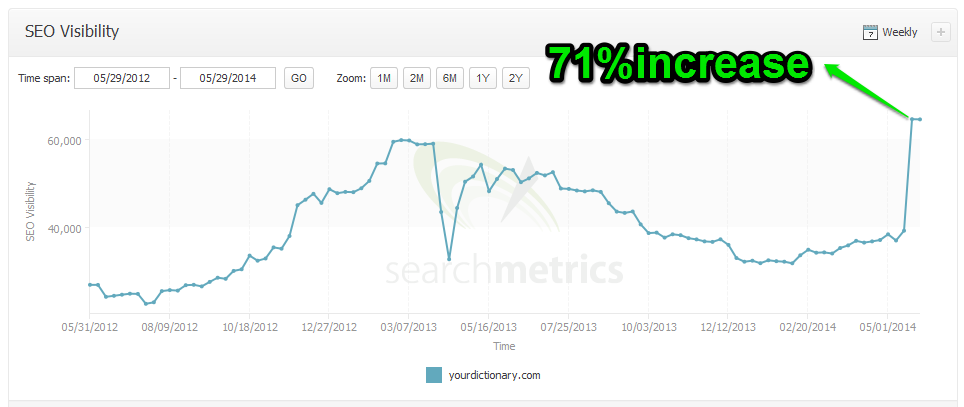

Allow me to give you another example of a site that is doing better in rankings since the latest Google Panda update was rolled out on the 21st of May 2014. This is yourdictionary.com

Above, we can see that at the very beginning of May, yourdictionary.com has recorded a major increase. The reasons? Just as in the case before, good interactivity and sharing.

Google Panda 4.0 Losers – Quality “Means” Doing it Right When no One is Looking

Since it was first released in February 2011, Google Panda aimed to lower the rank of low-quality sites or thin sites, and return higher-quality sites near the top of the search results. The 4th version of this algorithm kept the focus on quality and continued the war against thin content or automatic generated content. As Matt Cutts mentioned in an interview:

“Content that is general, non-specific, and not substantially different from what is already out there should not be expected to rank well. Those other sites are not bringing additional value. While they’re not duplicates they bring nothing new to the table.”

Loser – Thin Content

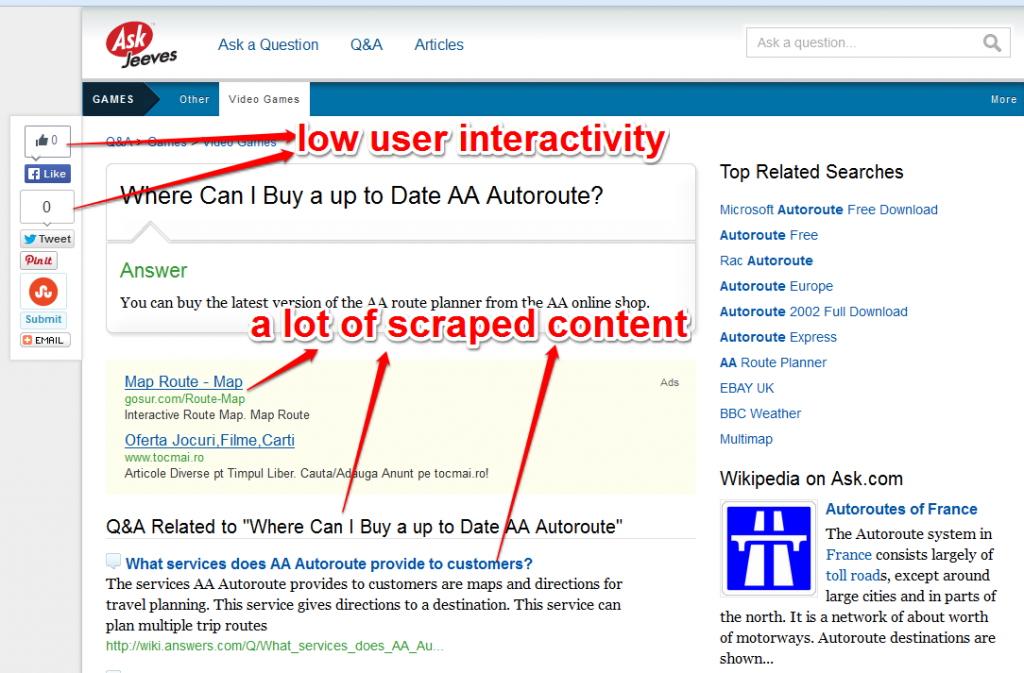

So, let’s see some examples that best illustrate this direction that Google seems to take nowadays. I am sure you seen the Ask.com site at least once in your life. It is, or was …, a big authority site that is mostly a Q&A community.

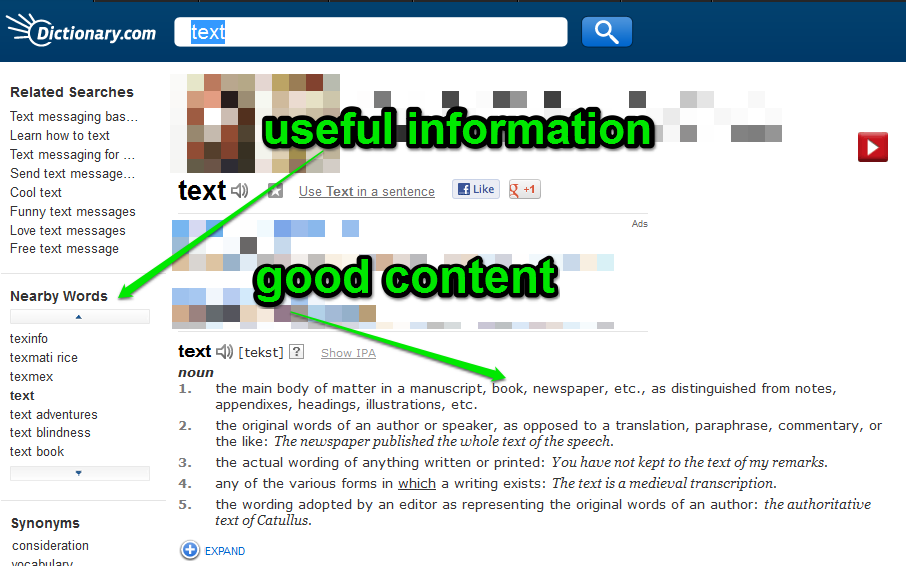

As we can see in the example above, Ask.com scraped a lot of content and it seems that it did not pass the Google Panda 4.0 filter. They have a 72% drop. In order to draw an accurate conclusion, you can enter on a page from their site to see why their site doesn’t line up with Google’s algorithm. If you look here , you wills see that the user experience is not really the best. All we can see is a definition and the a lot of ads and scraped content. Even if they have some user interactivity, the thin content outshines the interactivity. By comparison, to give you an idea of what Google considers to be adequate, take a look at a similar site, dictionary.com, that really provides a useful and pleasant user experience.

Loser – Automatically Generated Content

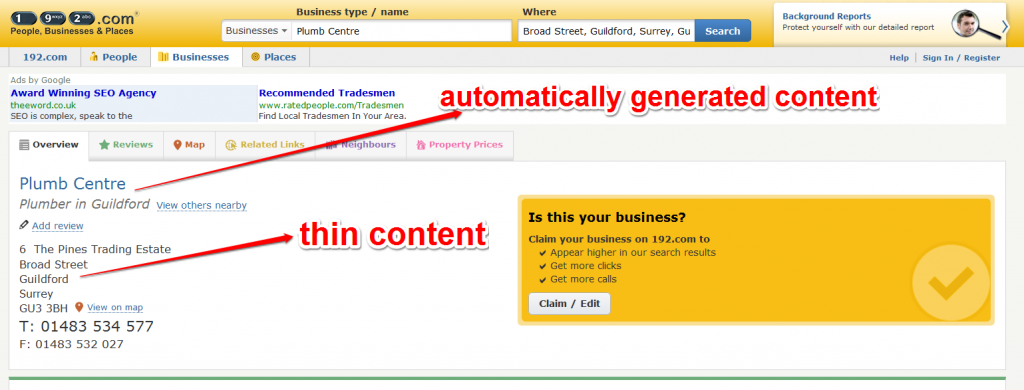

A lot of thin content and automatically generated content can be seen on the strongly Panda affected site 192.com.

The page exemplified in the screenshot above is replicated on thousands other pages. There is no user-generated content mostly but only a database built up without any real user interaction.

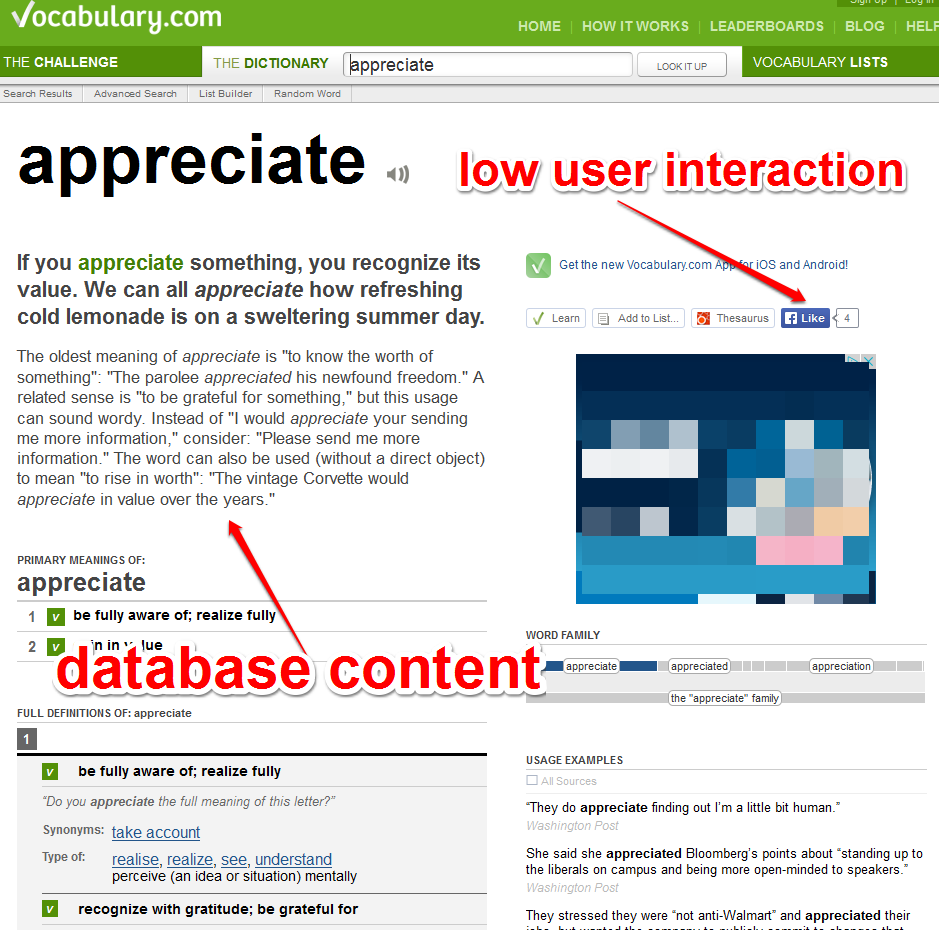

An intriguing situation can be spotted on Vocabulary.com, another site that has lost rankings due to the new update. Let’s take a sneak peek on their site to see what we’re facing here.

In this situation, it might be a problem with such database content, no user interaction and some banners. But this kind of structure can be seen on other sites that are still ranking well and where not affected by panda. We cannot say whether Google may be doing a mistake here or not but, hey, the algorithm “knows” better, right?

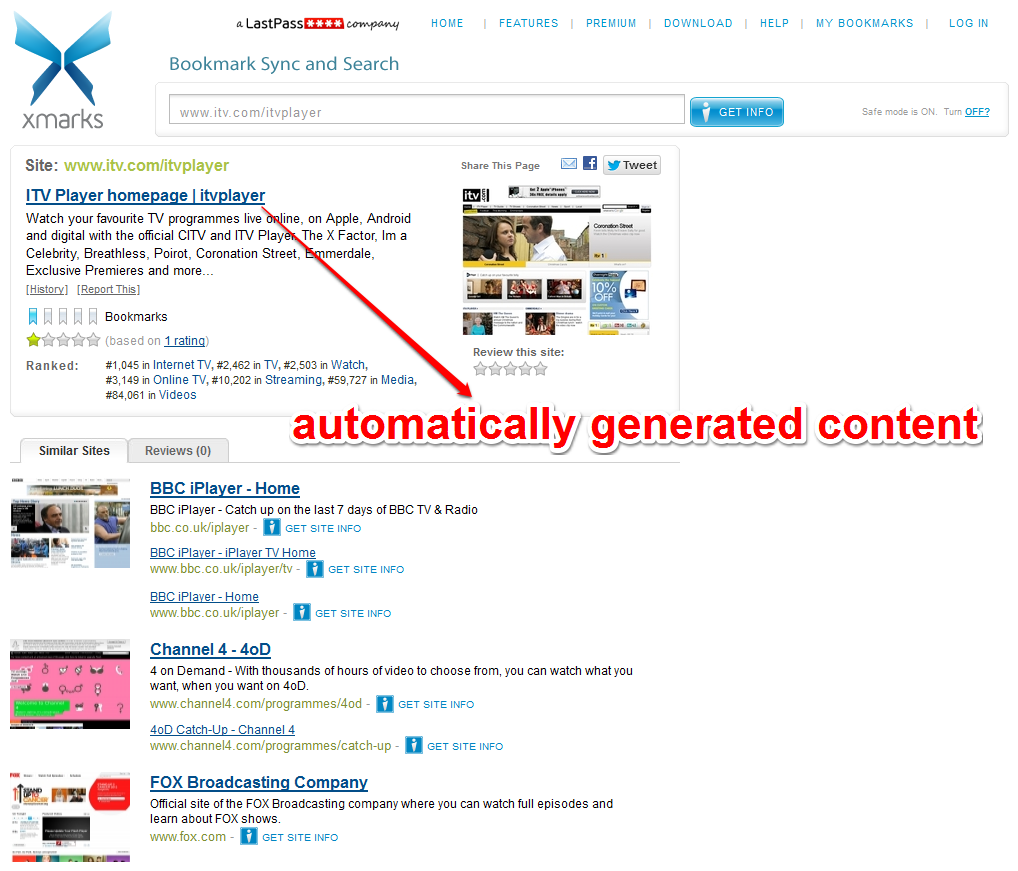

Below, there is another site, xmarks.com, that has been busted by Google Panda for having lots of Google Indexed pages with automatic content based on scraping other sites.

As it can be easily seen in the snapshot above, taken from one of the xmarks’ page, there is a lot of automatically generated content that caused a massive drop in rankings.

Another automatic content generation caused a drop for isitdownrightnow.com . For each site, this site generated a distinct page that showed statistics (automatic) about that site’s uptime. They have tens of thousands if not even more pages indexed this way. Yet, we can also see some user interactivity due to comments and shares as we can notice here .

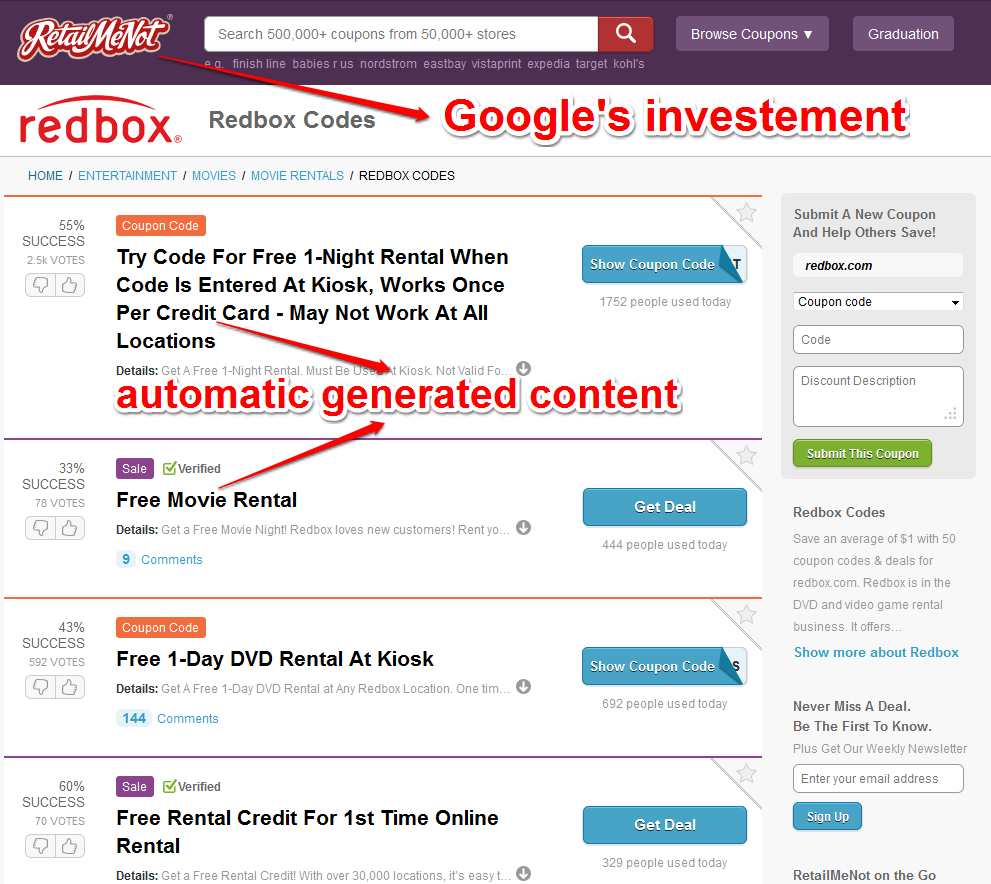

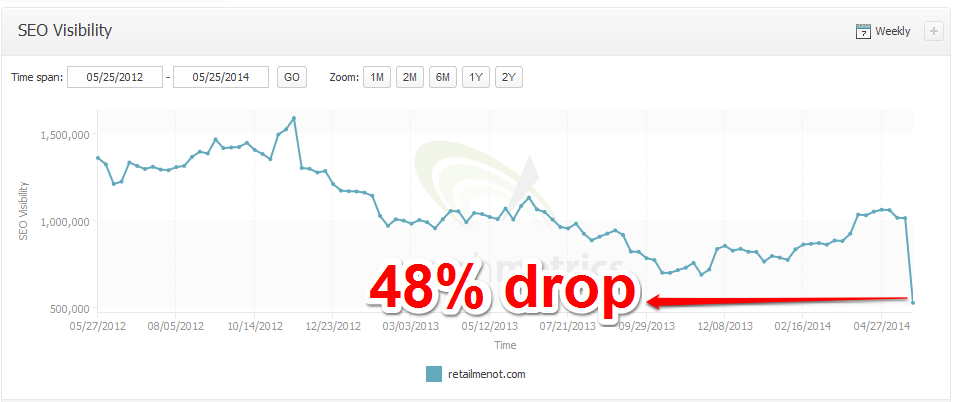

Retailmenot was hit by Google Panda probably because of the automatic content generation.

Pages such as the one illustrated above are full of automatic generated content without any user input and these are scaled to thousands of pages. What is very interesting about this particular site is that much of RetailMeNot’s revenue used to come from their high rankings in Google. Given Google Venture’s investment in RetailMeNot, there has been controversy as to whether these ranks are organically earned or not.

Looks like Google decided to put an end to all speculations and like a father that punishes his own kid when he did something wrong, penalized their own investment.

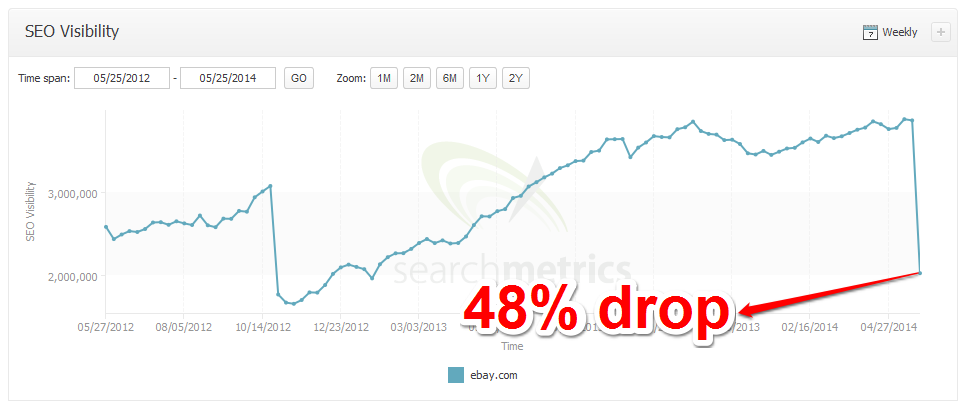

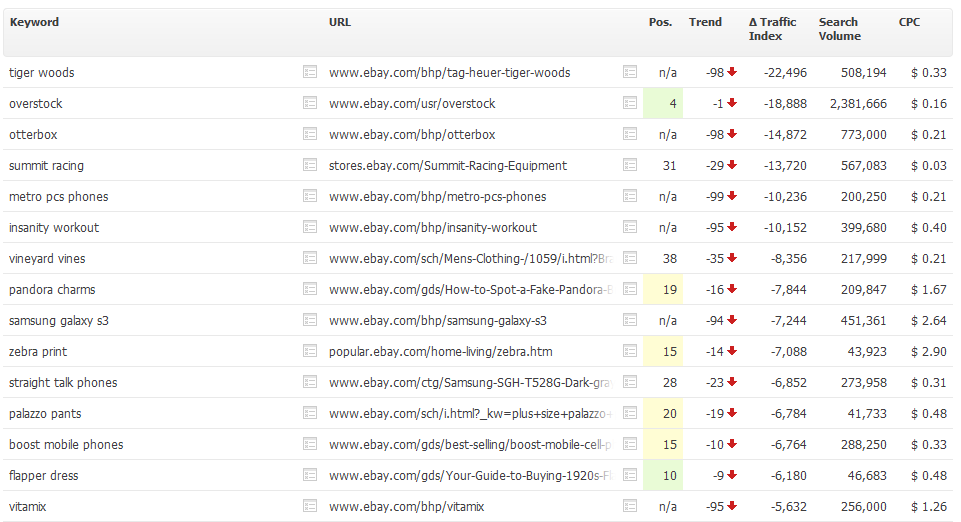

The Curious Case of the Ebay Ranking Drop

A lot of “SEO literature” has been written lately about the curious case of the Ebay Google Drop. Some assumed that it was a duplicate content issue that pulled Ebay from the high rankings it once had, others said that Ebay might have a structure issue. Aggressive internal linking on long-tail keywords has also been brought up. Yet, other sites that have some big ad banners above the hold are still ok. Although Panda had a problem with ads above the fold before, on this update it does not seem this to be an issue.

As we stated at the beginning at the article, when it comes to the latest Google Panda … known as Google Panda 4.0, it is hard to talk in terms of penalties. Instead, we think that deranking is a more appropriate concept. And Ebay is a very good example on this line. We are not saying that Ebay might not have its problems, but in terms of content and user interactivity, they are doing pretty well. So what happened here? If you scroll up to the examples that have grown in Google’s eyes, you will see that most of those sites are highly specialized sites on their own topic. A Topical Authority Medical site talking about “spider brown recluse” is doing far better than a generic one, talking about science generally. Sites like 192.com or answers.com that are not really specialized in something but have a lot of universal content, have generously experienced Google Panda’s anger. A similar pattern could be applied to Ebay too. Other smaller but more specialized sites in a certain area might have had a boost in ranking for some specific keywords, which automatically lead to Ebay’s decrease. For example, they lost 95 positions for “insanity workout” while the first sites that are ranking among the first for this keyword are some “how to” YouTube videos and some fitness centers.

Conclusions

We tried to objectively analyze the consequences that Google Panda 4.0 had on several sites, in our attempt of finding a pattern on how Panda 4.0 really works. Naturally, Google’s latest update brought joy for some websites and sorrow from others, but the most important thing is what are we taking away from all their stories. Many Chinese philosophers believe that the universe is made from two opposing forces, the Yin and Yang. And it seems that the Panda is one symbol of this philosophy with its contrasting black-and-white fur : some websites went up, others went down.

Let’s make a quick review with the conclusions drawn after our analysis:

Conclusion I. “Content Based Topical Authority Sites” are given more SERP Visibility compared to sites that only cover the topic briefly.(even if the site covering the topic briefly has a lot of generic authority). More articles written on the same topic increase the chances for the site to be treated as a “Topical Authority Content Site” on that specific topic.

Conclusion II. Sites with High User Interaction measured by shares and comments got a boost.

Conclusion III. Thin Content and Automatic content is deranked, even if it is relevant.

Conclusion IV. Sites with clear navigational structure and unique content got boosted.

Right from the launch of the first Panda, in February 2011, Google said it only takes a few pages of poor quality or duplicated content to hold down traffic on a solid site and recommends such pages to be removed, blocked from being indexed by the search engine, or rewritten. However, Matt Cutts warns that rewriting duplicate content so that it is original may not be enough to recover from Google Panda. The rewrites must be of sufficiently high quality, as such content brings “additional value” to the web.

A few important considerations:

- Take a look at the number of your indexed pages. If you have 1 million indexed pages with thin or duplicate content, you might have been deranked by the latest update. It’s better to reduce the amount of indexed page to only pages that matter to your visitors.

- Be sure that your main pages, which offer high-quality content are indexed, and “no-index” the pages that don’t offer important content to the user.

- Investigate your site structure in order to have it relevantly linked to topical content on your site

- Be less of a generic do it all site and more of Topical Authority Content in your niche.

The Chinese philosophy says that the gentle nature of the Panda demonstrates how the Yin and Yang bring peace and harmony when they are balanced. It’s hard to tell whether Google Panda 4.0 brings any harmony at all but for sure it tries to bring balance in Google’s search results. At the end of the day, it is all about the user retention by providing a value added service or value added content for the user.

Site Explorer

Site Explorer Keyword tool

Keyword tool Google Algorithm Changes

Google Algorithm Changes

Nice break down of the latest Panda update. In terms of Retail Me Not and Ask Jeeves I am glad they got hit. Both of those sites are nothing but glorified scraper sites with little unique content. Ask Jeeves is really bad.

as you saw, automatic and thin content again got hit. the big difference now is the Expert Topical Content Authority in my opinion.

Yes!!! I agree with Jereme, both site are not relevant in contents. Thanks Razvan for post.

seems Google thinks the same.

sure!

Nice breakdown!

I’d sure lot to see a whole lot more proof in the way of social activity before I EVER thought that played a role at all here. In every case where you show it is high, other factors also caused the site to do better. In every case where you show it is low, other factors caused the site to do poorly. In fact, if you look, there are a couple of instances shown where social engagement was high but the site did not do well because of other factors you cite.

I find it extremely unlikely that Google is using social interactions at all as part of its ranking algorithms – especially ones from Twitter and Facebook. If you thought backlinks were easy to manipulate, nothing is easier to fake than things like “likes.” If ( and that’s a big “IF”) Google ever does decide to weigh social interactions in any appreciable way, I’m sure they’ll only trust Google+.

It is just as likely that those social signals aren’t factors at all. Instead, they are a natural occurrence of a marketing approach and coincidentally match what is really causing websites to rise or fall. In other words, a website that is shared a lot socially probably has great content, site structure, etc. which causes more page views and lower bounce rates. The horse came first here and Google, in particular, may not be looking at the cart that trails behind the animal at all!

Google officially stated that they do not use The Twitter and Facebook social signals. Even soo those can be interpreted in various way without calling them directly social signals. And with a smart algorithm that takes into accordance the strength of the “person” sharing or liking it is harder to manipulate.

either way SEO is not an exact science and it is all about creating value in order to rank higher. At least this is what Google wants.

With this update they focus, in my opinion, on Expert sites that they could identify using various technique (check the patent listed there). These sites outranked Generic sites.

Content become more and more important it seems and Google is able to “read” it until it “understands” it.

I have to disagree with this; “either way SEO is not an exact science” … it’s 100% mathematical = it’s 100% exact science … except of course that google won’t tell us exactly what’s going on, so we have to guess, but enough people guessing and testing has allowed the seo community to get it right, again and again … it might take a while, but … it’s 100% an exact science, it can’t be more exact; it’s 100% algorithms … there’s no grey area in seo, it’s 1 or 0 and that’s it.

tks for the comment Michiel.

what I meant was that SEO is not an exact science because the search engines obfuscate it. It is not a science at all. “Old-hat” SEO was practically finding ways to understand how the search engines work and use that information to improve your rankings. This is done by reverse-engineering the results. You can only draw conclusions based on a limited number of researches and can not say for sure that your conclusion is the correct one but that you have a high change to found the correct answer.

yes it is 1 and 0 but you or me do not know the algorithms so we can not know if we give it 1 if it will output 1 or 0.

my 2c 😉

I enjoyed this post – thanks for the effort.

I agree with that some of your examples put too fine a point on the factors contributing to rank gain or loss, but overall this effectively illustrates SEO’s increasing influence over marketing strategy.

I do want to push back on your comment about the presence of an algorithmic understanding of social influence. This doesn’t exist at scale, and has been accomplished by a very scant few.

Little Bird is an example of a good approach, inasmuch as it’s akin to semantic search. Having used their tool, it produces good results, but needs to be better. Results still need to be assessed with a fine-toothed comb, but what you weed through is far more relevant in terms of finding “authority” than any other tool I’ve used.

Social authority is an inordinately complex nut to crack. Authority is simply too easy to game, and ultimately any algorithm worth it’s salt also needs to consider contribution (author rank, anyone?) to really assess authority.

Thanks again for the post.

I totally agree the Social Authority is a “complex nut to crack” :). but as any complex problem it will be finally cracked :). and Google is best at cracking complex stuff. I am sure they can model authority under Google+ (same for links). But for Twitter and Facebook , theoretically they do not have access to their feeds) even though I am sure they crawl a lot or have third party data that they could use at some point.

Scott, I agree that social signals are likely aligned with strong rankings, rather than a cause of those rankings (the ‘causation versus correlation’ argument that Moz have championed).

But I disagree that social signals are easier to spam.

The raw numbers are, absolutely. Which is why some short-sighted site owners will buy 10,000 followers and such. But it would not be difficult, access permitting, to assess how genuine those social profiles are. The work required to fake the kind of network / engagement / sharing activity that you get with a genuine account means that fake accounts will always stand out like a sore thumb.

Although I suspect they are not quite there yet, I have no doubt the search engines are building algorithms to identify and reward genuine (and authoritative) social sharing.

this is the same as link counts. Google does not care about the Link Numbers but about the Quality of the Links. The same will be applied to social. They will not care about the raw number of shares but about the quality of the sharers.

my 2c 😉

Thank you, for the article.

I have a question. Do you have any data about the effect of Panda for non-english queries?

Here in Hungary (Europe) we hardly noticed the effect of Panda 4.0. Altough previously the Panda have major effects.

Thank you for your reply.

Hi Attila, for the moment English queries were debunked in this case study. More will come soon probably for local markets.

That was very informative!

It almost seems like Google is favoring a visually heavy version of Wikipedia, at least, that’s how I understand it.

Have you tested local search? We have not seen much of a difference through this update with our clients localized rankings.

it is about sites that are defined as Experts in their niches. Not necessarily about how the site looks like, but how it interacts with the user and how it affects the users’ experience.

Very well written and analyzed each aspect of Panda 4.0. Although it doesn’t harm much as compare to the previous updates, still it is important to be aware of Panda 4.0 while creating content.

Thanks for this case study.

exactly my POV. it is more of a deranking update then a Penalty update from how I saw it.

I agreed Razvan. It more effects the rankings rather than site’s PR or other things. But, yes it is a kind of red alert for the content marketers not to curate or copy content from any other site/blog.

I think this

“Sites with High User Interaction measured by shares and comments got a boost.”

is a bit of a red herring. It seems more likely other metrics are being used to measure engagement than raw social share numbers and comments that can be so easily manipulated. Info around “long clicks”, query refinements on bounce back, etc. would seem more logical to me.

I also think it’s interesting on the ebay example that many of the terms are generic and it would be difficult to determine intent on them without utilizing user data and playing the odds.

I wonder if Google has shifted some of these SERPs to more well-rounded or informational pages over ecommerce/purchase pages.

Some interesting examples though thanks for the work!

As an algorithm yes, probably they use other metrics with are harder to quantificate for the human that is looking at them but they probably try to emulate somehow those “share numbers”.

Shareability data and virality data can be also extracted from external sources where they can see the traffic going through and identify the approximate traffic of a site(not to talk about Analytics).

In very spammy industries this was the way they did it a while ago. Commercial sites were mostly replaced with information ones. (loans and others).

glad you found it interesting 😉 and tks for the comment.

I’d like to see more case studies across industries with high cost PPC to see if they follow the same patterns.

Great breakdown, exactly the kind of post that will rank well 🙂

will look into that Gene 😉

Well, i very well written article for SEO enthusiasts like me. For me SEO getting harder day by day. Link building and other old school methods doesnt work anymore. As the author said “Quality” and “Authority” is the only thing that could actually makes a huge difference in your SEO job.

Good luck guys.

What about the free Encyclopedia, Wikipedia?. Its strengh was offering millions of pages about everything. May be this encyclopedia a losser in the future in terms of Panda?.

wikipedia was always a “favorite” of Google. It is a generic site but it may also seen as an expert in individual fields based on the strict editorial guidelines they have.

Interesting that emedicinehealth, medterms and medicinenet.com all suffered MAJOR traffic drops over the last few years at the hands of panda so it looks like they are just returning to their previous traffic levels. Also, checking a few pages in archive.org from 2012, medterms has made zero changes so its all about the algo, not what they have done to improve the site.

will look more into this. nice spot Jan 😉

indeed I do not see any major modifications done to the site too. It seems this gives even more strength to my theory on the topical trust stuff.

Yes — I also noticed that, in the majority of the graphs shown, the increase or decrease seems to be more of a correction to pre-October 2012 levels. In contrast, the Retail Me Not graph looks to be a genuine reduction in overall visibility. From this, it would seem Panda 4.0 may indeed be about being gentler and fuzzier to sites that it was too rough with initially.

it is a change of the algorithm. it may be that they could not detect all this info accurately in the past and now they can and are able to segment it.

I have observed few things.

Keyword: Vitamix

Website: http://www.ebay.com/ctg/Vitamix-5000-Blender-/62660126

Rank: 50

Keyword: Vitamix

Website: http://www.amazon.co.uk/Vitamix-TNC-Black-010231-Blender/dp/B005KQ2TYO

Rank: 4

Keyword: samsung galaxy s3

Website: http://www.ebay.co.uk/ctg/SAMSUNG-GALAXY-S3-MINI-SAMSUNG-GALAXY-SIII-MINI-UNLOCKED-/141738147

Rank: 26

Keyword: samsung galaxy s3

Website: http://www.amazon.co.uk/Samsung-Galaxy-SIII-Smartphone-Unlocked-UK-Sim-Free-Pebble-Blue/dp/B0080DJ6C2

Rank: 6

Conclusion I. “Content Based Topical Authority Sites” are given more SERP Visibility compared to sites that only cover the topic briefly.(even if the site covering the topic briefly has a lot of generic authority). More articles written on the same topic increase the chances for the site to be treated as a “Topical Authority Content Site” on that specific topic.

Conclusion I Thought: This could be a reason but then it should affect both ebay and amazon; as both sites don’t cover the topic briefly in spite of having high generic authority.

Conclusion II. Sites with High User Interaction measured by shares and comments got a boost.

Conclusion II thought: Both ebay & amazon have a high user interaction but why only ebay again?

Conclusion III. Thin Content and Automatic content is deranked, even if it is relevant.

Conclusion IV. Sites with clear navigational structure and unique content got boosted.

Conclusion III. & IV. Thought: Again both have relevant but thin content but only ebay is penalized. Both have clear navigational structure but they can’t have unique content as the product specifications would remain same. Both sites have almost similar content which shouldn’t be the only differentiator!

Hi Razvan, The analysis and your effort is brilliant and takes us close to the reality on what Google could have had in the update. However I was just trying to understand the algos discriminating act against eBay and not Amazon.

Cheers!

Nice Post

Google Panda 4,0 It is confusing and but we still have to fight

fight to the top 🙂 think about possible future algorithm changes that could affect you 😉

So interesting to see all these stats

glad you found it worthwhile 😉

Well researched post, thanks. This is very helpful.

With eBay loss, could it be related to the fact that shopping on eBay is in direct competition to Google Shopping?

Seems rather obvious to me.

it could be anything. we are only doing reverse engineering 🙂 . no one will ever know for sure (except Google)

I was the lead writer on Ultimate Guitar for three years, so I feel I have some insight here.

Here’s what Ultimate Guitar has:

– Millions of guitarists using the website repeatedly to learn new songs (consistent and growing repeat visits over time)

– Lots of daily original content. The first thing I changed three years ago was to stop pasting news content, and to write everything original. We added social sharing, and made efforts to stir comment and debate with several articles per week earning hundreds of comments and thousands of shares.

– Constant linking out to other high quality sites, like Rolling Stone etc – I don’t know if this is a boost, but to be honest, if we’re rewriting their content then it seems fair to link back once. We would add original comment to every post too, linking back to previous relevant articles (“it was only last month that ozzy osbourne did… etc”)

– Targeted top 10 articles around keywords, like ‘top 10 best drummers’, which would ALWAYS spur a tonne of debate with people disagreeing and sharing.

These actions helped the site get into the alexa top 1000, though it seems to have wavered out since I left. Still doing great in the USA and UK, which were the main audience targets, though they get a lot of global traffic.

This is all just on the news side of the site. The site does have many weaknesses in its content, but the scale of original and community content is pretty huge. I can’t comment on how the SEO was run elsewhere on the site, and there was probably some smart stuff going on.

My takeaway with this in mind and your post:

1. Lots of well-segmented content, with decent fresh content in every section and community engagement

2. Repeat visits from people who clearly identify with your site, and probably browse similar content elsewhere

3. Massive community engagement

Well damn, that sounds like a good quality online resource if I ever saw it. Who’d have thought Google would reward a site like that?

tks for the inside input Tom. very valuable. the secret recipe it seems to be the user generated content that is editorially validated and a good linking structure for the site. and they found a way to scale it 🙂

Great article, makes a lot of sense about precise content and expertise being king. But that does not seem to be the case at least in our field where the big box stores that offer little in content or expertise all got big boosts and all of the sites that offer higher quality products and much more expertise and information ALL got hammered.

Maybe we got it all wrong but do you really need to go to Google for something you need to buy or find at Home Depot?? If I want to know if Home Depot has my item or I want to order it online I simply go to the Home Depot website. Most likely you already know if they have what you want or not since most people have been in a Home depot a thousand times. If Google wants a better user experience I would think it would rank sites that offer alternate product options to the thousands of chain retailers that everyone knows about already. Google is for helping you find what you don’t know exists.

I think!

To me it’s like if I google hamburgers do I really need google to put MacDonalds at position 1(not saying they do, just an example), if I’m googling hamburgers it means I’m looking for alternatives to the MacDonalds Burger Kings etc.

I think that’s why Ebay got hammered, do I need Google for Ebay items, no, I go to Ebay directly. I need google to find stuff that is maybe not on Ebay.

Just my confused Panada 2 cents. Again great article and thanks

Google changes all the time. And this is the perfect example. Sites that were de-ranked in the past were boosted now. They evolve and based on their analysis they try to offer the best user experience possible and at the same time cash in based on that. With the latest Hummingbird update this is exactly what they are trying to do. Understand the searchers’ intent. It is not simple but it is double to some extent. So we should watch and see how Google changes over time.

Hi Jim, I agree with your point about eBay. People buying on eBay would never need Google to search but if that was the case then why not Amazon also? If you could please see I have made a point about this earlier in the conversation. For the same set of keywords that eBay has lost positions Amazon hasn’t. And when I did a detailed page to page comparison there isn’t a real differentiating factor. Thanks

I have seen many sites in my own portfolio that validates much of your observations here. In managing dozens of local lead gen sites, I have sites on either side of the “topical authority” spectrum.

The sites that were thin on content, with just one page for each targeted keyword (I’ve always called them “one page wonders”), suffered an average ranking drop of around 15 positions.

Those that had significant content and site structure (silo architecture with several supporting articles in each silo), however, either stayed strong or improved in rank position.

In fact, I have taken a couple of the sites that took a hit and added additional supporting articles, categories, etc and seen a positive movement already.

So while I’m not saying this is 100% definitive, I have strong evidence to support this observation.

Nicely done case study,Ravzan.

tks for the comment Bradley. glad to see another confirmation on my research here. surely this is not 100% exact. But as I said before I see a lot of sites with the same pattern that led me to conclude this. glad to see you already seen improvements 😉

Hi Razvan, thanks again for this helpful analysis to get through Google Panda 4.

I’ve written a little excerpt in Italian of this: hope you’ll enjoy it! Here’s link:

http://goo.gl/3GQev9

glad you found it interesting Marco. super 🙂 tks for translating part of it to Italian. The research will reach more people now and show them what should be done.

Brilliant synopsis of Panda 4.0. Thanks for these case studies Razvan!

tks for the appreciation Bruce.

Good findings Razvan. Helps lot and give clear idea about panda 4.0. And I guess same goes even with job board sites. Most of the job sites only scrape jobs from other sites and post it on own site. But if these sites are compared to Glassdoor then there’s a huge difference. GD provide way more information about the company, work culture, interviews, pay scale etc compared to other jobs sites which only gives job listing. This is why GD is one among the winners in panda 4.0 update. People want more information when it comes to any product or services and business whether it is online or local, needs to understand this and do research on their target audience in order to give them ‘MORE’ information.

Google improves their algorithms in order to better analyze and segment content and authority. sometimes it may not be what we expect and it may be addressed in a future update. it is a continuous evolving process.

It is difficult then, isn’t it, in the light of this information to know how to handle sites that sell a product as the retail agencys for a company. Especially if they are in an industry where very specific wording is critical in order to prevent misleading the public if the product is very techinical. From the parent companies point of view – they need the agents site to duplicate their authorised version of content – yet the parent company needs the local agents to rank. Will location based seo trump a couple of duplication issues in this scenario ?

probably a rel=canonical tag would be required here. this is kind of based on specific scenarios.

Hi Razvan – You provide a lot of useful examples here about what might be driving Panda 4.0. Thanks for sharing your insights.

Another site that seems to have benefited significantly from this latest algo update is AllBusiness.com. I think it fits with your conclusions #2 and #4. What’s interesting about their content is that much of the most highly ranked seems to be author-less reference information rather than how-to or expert opinions. Example: a glossary of business terms or a list of “top business ideas” (but without an author).

This goes against the idea that associating an author (person with “authority”) benefits the content’s visibility. (Reading through the patent you mention, I thought it might add more weight to authorship.)

But it does support your idea of “Topical Authority” for the site. In this case, AllBusiness does have a long history of relevance and authority in their space of small business information. And they cover this topic extensively. So it seems their overall authority for this topic space has been recognized better by Panda 4.0 and thus boosted their visibility.

tks for the detailed comment Kevin. Author Rank may or may not be used by Google yet. I did not see any relation to this in this Panda update though. Google may use this as another factor about the content quality to give it an initial boost but that would be it I think if the content does not perform well over time.

Topical Authority is mostly about the content on the entire site. And here I was referring to the entire site as being an authority in a specific niche or niches.

Razvan,

Great post. I’ve been referring back to it for weeks.

What if you don’t have 1 million pages of thin or duplicate content but you do have some? I own several sites in the web design space. Clearly Google sees the content as thin because they took up to 50% of the traffic on various sites with Panda 4.0. It’s hard for me to know which posts to remove.

Over the past year I have noticed there is so much duplication of content going on in the space and I have been trying to fight against it. For example, for one keyword I found that over a 4 year period I had 7 posts that were basically saying the same thing with different players mentioned etc. Since it was just curated content with no real differentiation in the posts I had my writers post a new larger and more authoritative post and then 301 redirected the old posts to that one. The results were very good. It become my top post and is still top today.

However, the “deranking” of panda 4.0 still took away 50% of the traffic on that post. I have about 7k pages indexed. If I go and start removing duplicate content (I assume 301 redirecting to the best version on my site is ok) and deleting stuff that is very thin are you saying that at some point I might see a lift everywhere?

Are any expert SEOs reviewing sites for this very thing and making recommendations about what to delete, redirect, etc?

Brad, Glad you found it interesting and worthwhile 😉

glad to hear about the content curation experiment and that it worked so well. I think that the days when you would give all you had on the site for Google to index are kind of dying. Nowadays probably it is better to curate the content that you may be interested in ranking and not having all irrelevant content pages indexed in Google in order to keep their index clean and the best picture about you in Google.

Great post, Razvan sir. It’s always a pleasure reading your informative and in-depth articles that really get into the heart of what’s going around in SEO.

This article will surely be featured in my next post.

Regards,

Nauf Sid

Great article, Rasvan.

I enjoyed it very much.

Do you have an explanation for Indeed.com’s overwhelming presence in the Google’s serps? It seems that this ‘ask.com of jobs’ has found the right mixture of scraped content, user generated content, reviewing and internal link structure. But when one uses Google for finding a job it looks like Indeed is practising reversed engineering of the results. Some cached queries even show a low match on keyword density level, compared to other more relevant sites. So when and how does relevance become a decisive factor for Google? Do you think that keyword stemming is of more influence now, since the latest Panda update.

Hi Ernst, I have not checked the Indeed case so can not say too much about it. regarding keyword stemming I would recommend another study that I wrote. https://cognitiveseo.com/blog/5370/941-traffic-increase-exploiting-the-synonyms-seo-ranking-technique/

Great article, Rasvan. As SEO task levels increase, it is challenging to see how Google Panda negative impacted auto generated web content – but it makes sense. I would love to see an update on the topic as we complete quarter 1, 2016. Thanks also for your recent post titled: “39 Rarely Used SEO Techniques That Will Double Your Traffic”. What a long list – “The Comprehensive List of Amazing SEO Techniques”.

Thanks Razvan for Sharing this article, It is really nice how you visually explained everything, very informative and helpful.