Image Reading and Object Recognition in Images is an important task and challenge in image processing and computer vision. A simple search for “automatic object recognition” on Google scholar will provide you with a long list of articles mixing all sort of sophisticated equations and algorithms, dating from the early ’90s until present. This means that the subject has been highly intriguing for the researchers in the field from the very beginning of search but is still work that seems to be in progress.

Not long ago we engaged you in the Google Image Search world, with an in-depth case study. We then tried to figure out whether Google can read text from images and what are the implications of this matter for the SEO world. We are now back with an interesting research in the same “image search” field that is trying to put the light on Google’s progress in the field and on how a SEO professional should keep up in order to have better rankings in the future.

The future belongs to those who are prepared for it today! In the very near future Google will probably change the algorithms regarding the way it will rank images, changes that will dramatically affect the search, and thereby the SEO world.

It is not a minor algorithm change we are talking about, one of those which affect only a small percentage of the search. Is the next level image search generation we are about to face. It will be a great success for the search industry progress undoubtedly, but the true success will be of those who were one step ahead and already prepared for these big changes.

Why is object detection in images important to the digital marketing community?

At the end of the day, it all comes down to rankings.

Object Detection in Images will add an extra layer of ranking signals that cannot be easily altered.

An Image with a blue dog will rank on a blue dog related keyword and not on a red dog related keyword. This has two important implications for the SEO industry:

1. A lower number of false positives when searching for a particular keyword will appear in terms of what the image actually contains.

2. It can also be used to relate a page content to that actual image without any other external factors. If a page has a lot of photos of blue dogs and various other stuff related to dogs, than it automatically strengths the ranking of that page being about dogs.

Yet, a question may come from this:

Could this be a new era for Object Stuffing in Images as a shady SEO technique?

I do not think so, as the algorithms are pretty advanced nowadays in order to detect this kind of spam intent. However, it’s definitely a new generation of search we are talking about that may come with big changes and challenges for the SEO world.

Google, Artificial Intelligence & Image Understanding

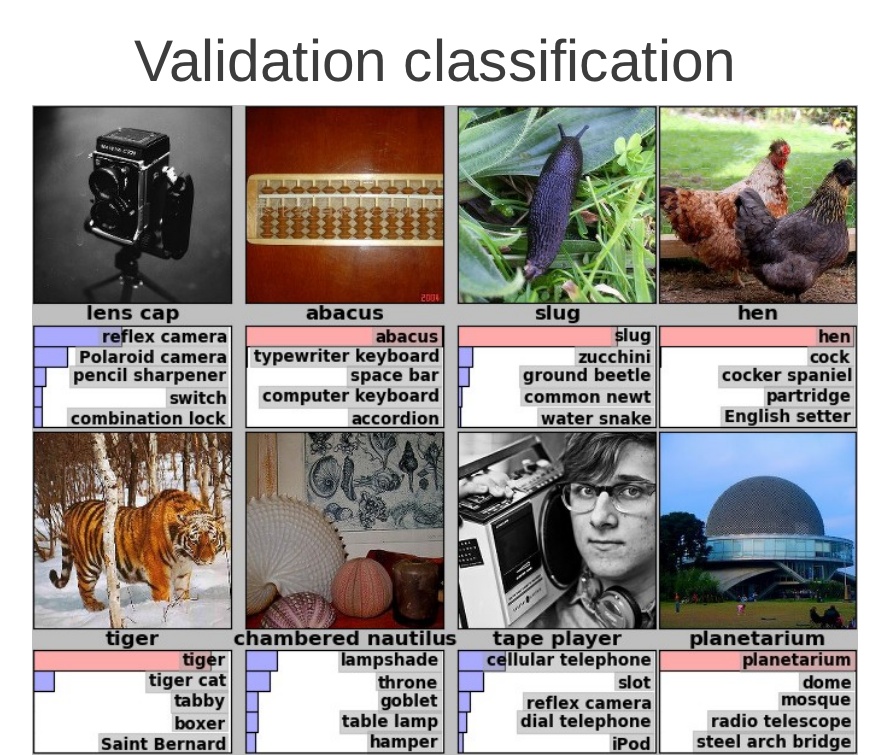

Image understanding is a pretty big deal for everyone, which is why a visual recognition challenge has existed since 2010. It is called ImageNet Large Scale Vision Recognition Challenge (or ILSVRC) and it is a fine example of how competition fosters progress. There are three main tracks in ILSVRC: classification, classification with localization and detection. This means algorithms that are entered in this challenged are tested both in relation to how well they recognize objects in a particular picture, as well as whether they “understand” where the object is located in the picture.

This level of superior performance in the detection challenge requires the industry to push beyond annotating an image with a “bag of labels” in hopes that something will stick.

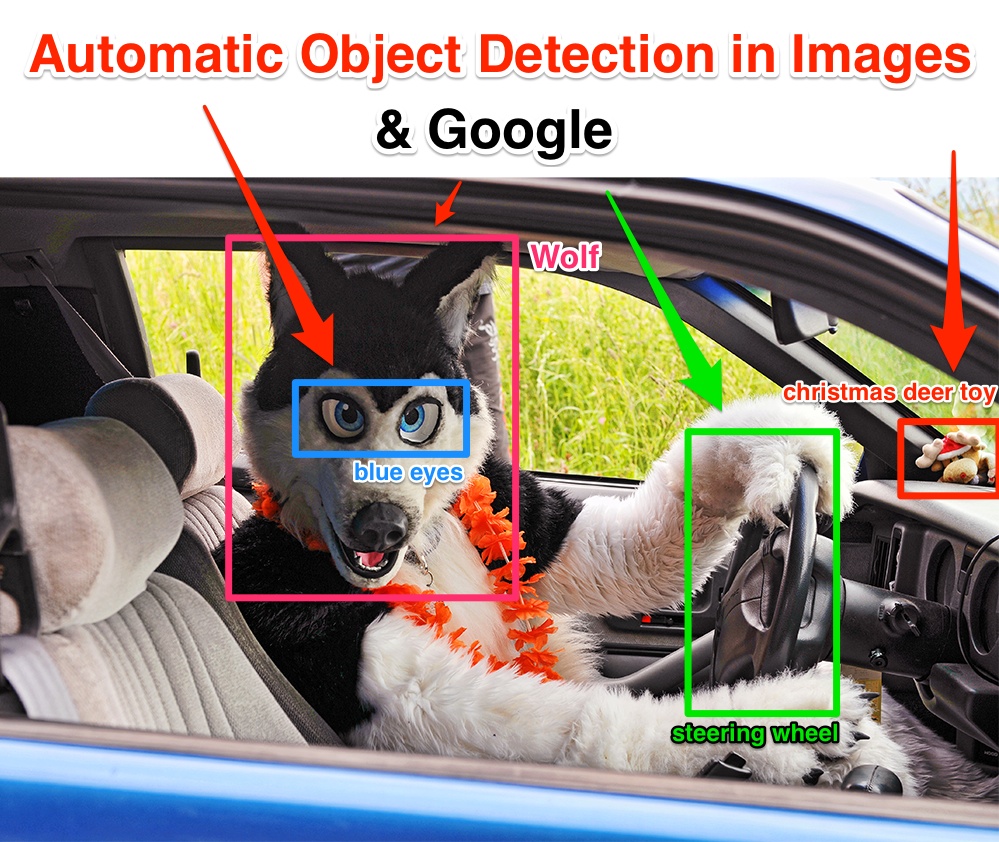

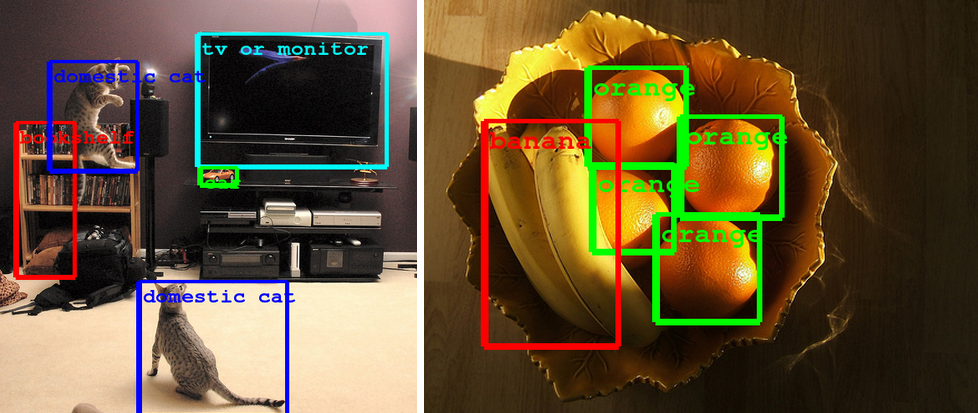

In order for an algorithm to succeed in this challenge, it must be able to describe a complex scene by accurately locating and identifying many objects in it. This means that given a picture of someone riding a moped, the software should be able to not only distinguish between several separate objects (moped, person and helmet, for instance), but also correctly place them in space and correctly classify them. As we can see in the image bellow, separate items are correctly identified and classified.

Screenshot taken from http://googleresearch.blogspot.ro/2014/09/building-deeper-understanding-of-images.html

Any search engine with this capability would make it extremely difficult for anyone to try and pass pictures of people riding mopeds as “race drivers driving Porsche” pictures by stuffing them with metadata that simply says so. As you can see in the examples below, the technology is pretty advanced and any misleading scheme could be easily exposed.

Screenshot taken from http://googleresearch.blogspot.ro/2014/09/building-deeper-understanding-of-images.html

Google participated this year at ILSVRC where it won with their team GoogLeNet and made the code open source in order to share it with the community and make the technology advance faster. This has a tremendou significance in terms of progress, since the ILSVRC 2014 is already hundreds and even thousands of times more complex than the similar object detection challenge just 2 years ago. Even within the span of a single year, the advance made this year at this competition seems to be significantly superior to last year‘s: 60 658 new images were collected and fully annotated with 200 object categories, yielding 132 953 new bounding box annotations compared to 2013. With the hope I am not bringing too much data in the equation , this means that in 2013 the image number was about 395000 and only one year later the number considerably increased to about457000. And yes, this sounds as twisted and impressive as Google’s data center that you can see in the image below.

Google Data Center

The winning algorithm this year is using the Distbelief Infrastructure which not only looks at images in a very complex manner, and can identify objects regardless of their size and position within the picture, but it is also capable of learning. This is neither the first nor the single time Google has focused on machine-learning technologies to make things better. Last year, Andrew Ng, the director for Stanford University’s Artificial Intelligence Lab and former visiting scholar at Google’s s work research group “Google X” has put forward an architecture that is able to teach and grow:

“Our system is able to train 1 billion parameter networks on just 3 machines in a couple of days, and we show that it can scale to networks with over 11 billion parameters using just 16 machines.”

Google+ Already Uses Object Detection in Images. Google Search Next?

In fact, a smart image detection algorithm based on convolutional neural network architecture has already been in use at Google+ for more than a year . Part of the code presented at the ImageNet challenge has been used to improve the search engine’s algorithms when it comes to searching for specific (types of) photos even when they were not properly labeled.

Neural network architecture has been around since the 1990s but it is only recently that both the models and the machines are efficient enough to make the algorithms work for billions of images at a time. The advances could be quantified in any number of ways, but in terms of user experience, there are several things to keep in mind.:

1. For one, Google’s algorithm has proven itself to be able to match objects from web images (close-up, artificial light, detailed) with objects from unstaged photos (middle-ground, natural light with shadows, varying levels of detail). A flower seemed just as much a flower by any other resolution or lighting conditions.

2. Moreover, the big G managed to identify some very specific visual classes beyond the general ones. Not only it identified most flowers as flowers, it also identified certain specific flowers (such as hibiscus or dahlia) as such.

3. Google’s algorithms also managed to do well with more abstract categories of objects, by recognizing a fair and varied number of pictures that could, for instance, be categorized as “dance” or “meal”, or “kiss”. This takes a lot more than simply detecting an orange as an orange.

Screenshots taken from http://googleresearch.blogspot.ro/2013/06/improving-photo-search-step-across.html

4. Classes with multi-modal appearance were also handled well. “Car” is not necessarily an abstract concept, but it can be a little tricky. Is it a picture of a car if we can see the whole car? Is the inside of a car still a picture of a car? We would say yes and so does Google’s new algorithm it seems.

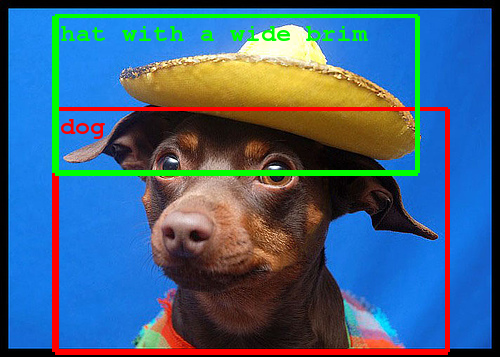

5. The new model is not without sin. It makes mistakes. But it is important to note that even when talking about mistakes, those show progress. Given a certain context, it is reasonable to mistake a donkey head for a dog head. Or a slug for a snake. Even in error, Google’s current algorithm is head and shoulders above previous algorithms.

Screenshots taken from http://googleresearch.blogspot.ro/2013/06/improving-photo-search-step-across.html

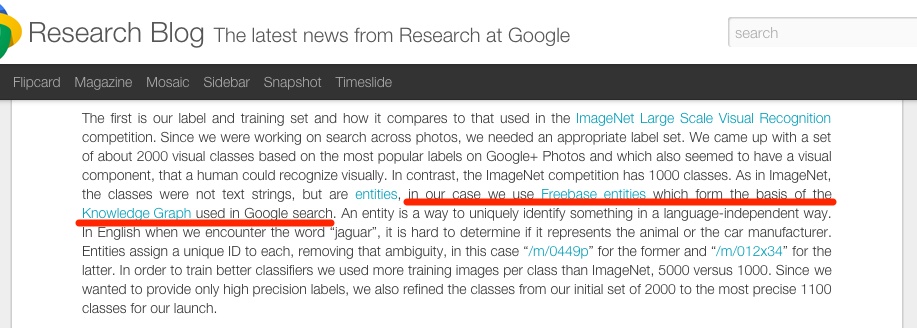

Is a Google’s Knowledge Graph & Image Detection “Marriage” Possible?

As impressive as Google’s new model is, it is even more impressive that it is just a part of a larger picture of learning machines, perfectly integrated with the already impressive on its own Knowledge Graph. The “entities” which form the basis of the latter also help shape the classification and detection capabilities of the image detection algorithm. Objects and classes of objects are each given a unique code (so, for instance, a jaguar – the animal – could never be mistaken with a Jaguar – the car) and then used to help the algorithm learn by providing it with a knowledge base against which to test its attempts.

Google is turning search into something that understands and translates your words and images into the real-world entities you’re talking about.

That’s right! It doesn’t just generates results based on specific words or images but it really “understands” you.

Screenshot taken from http://googleresearch.blogspot.ro/2013/06/improving-photo-search-step-across.html

How Object Detection in Images Might Affect Your SEO

These technological advances will enable even better image understanding on our side and progress is directly transferable to Google products such as photo search, image search, YouTube, self-driving cars, and any place where it is useful to understand what is in an image as well as where things are. @GoogleResearch

Screenshot taken from http://googleresearch.blogspot.ro/2014/09/building-deeper-understanding-of-images.html

In terms of plain SEO, this move is tremendous and it will help foster Google’s vision of quality-content driven SEO. Just as it has become increasingly hard to trick the search engine through various linking schemes, it might become comparably difficult to trick it with mislabeled photos or the sheer amount of them. Good content (i.e. quality pictures, clearly identified objects, topical pictures) is likely to become central soon enough when it comes to visual objects as well.

Tagging is also likely to become much more about photographic composition rather than manual or artificial labeling. If you want your picture to show up when people are looking for “yellow dog” images, your SEO will have to start with how you take the picture and what you put in that picture.

How Object Detection in Images Really Works

If you haven’t already, it’s time to grab a cup of coffee and bear with me while we make a short journey into the “geeks’ territory”.

So what is so particular about the DistBelief Infrastructure? The straight answer is that it makes it possible to train neural networks in a distributed manner and is based on the Hebbian principle and on the principle of scale invariance.

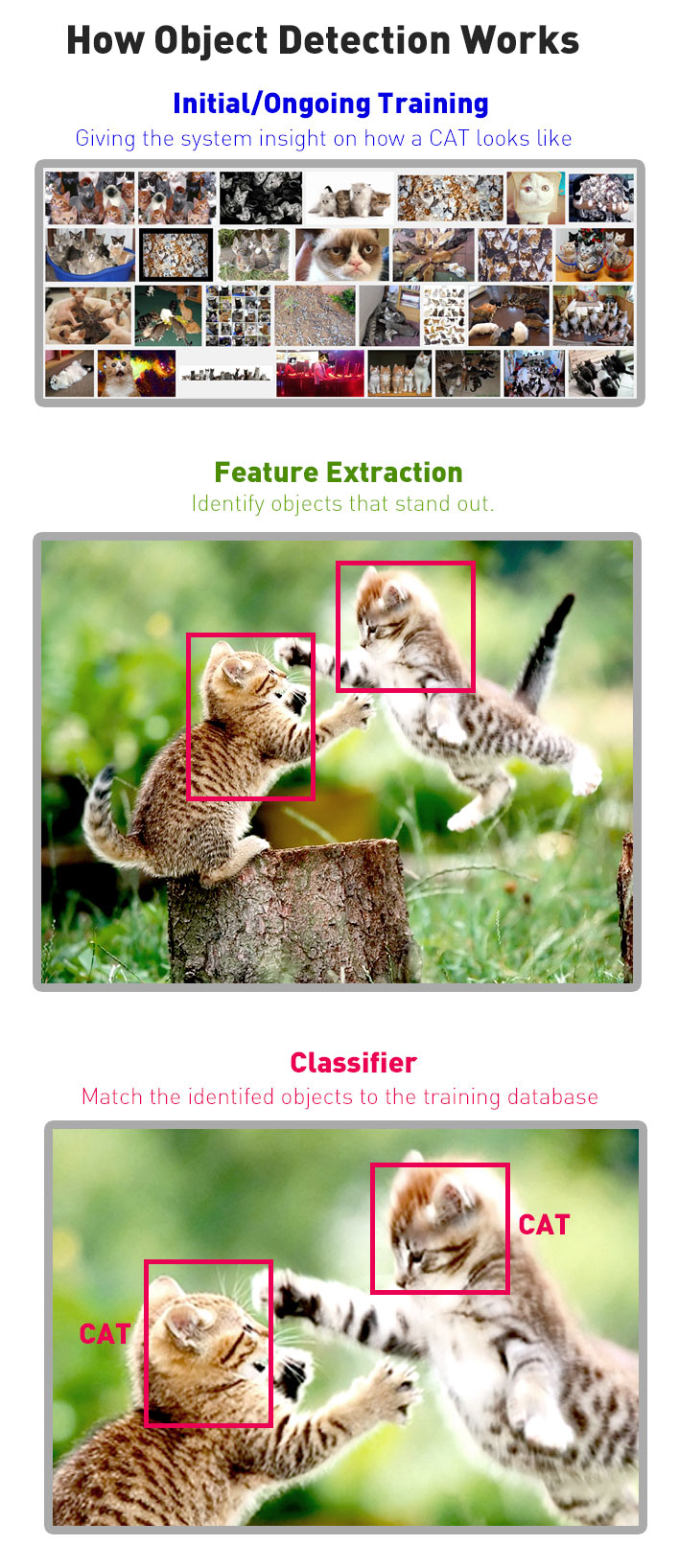

Still confused ? Yes, there is a lot of math packed in there, but it can be unpacked at a more basic level. Neural networks actually refers to what you would expect – how neurons inside our brains are wired. So when we are talking about them we actually mean artificial neural networks (ANNs), which are computational models based on the ideas of learning and pattern recognition – makes sense in the context we are talking about, right? The example below, of how object detection works might bring some light into this pretty-hard-to-understand field.

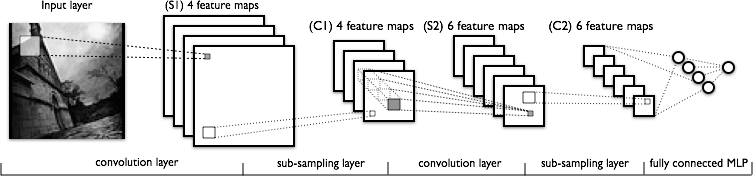

GoogLeNet used a particular type of ANN called convolutional neural network which is based on the idea that individual neurons respond to different (but overlapping) regions in the visual field and that it is possible to tile them to get a more complex image. To grossly simplify – it’s a little bit like working with layers. One of the perks of a convolutional neural network is that it supports translation very well. In mathematics, translation can refer to any type of movement of an object from one space in place to another. So if we put together all we know this far, Distbelief is pretty good at recognizing an object no matter where it is placed in a certain picture. It can also do more than that. Scale invariance is also a mathematic principle and it basically states that properties of objects do not change if scales of length are multiplied by a common factor. This means that Distbelief should be pretty good at recognizing an orange regardless of whether it is as big as your screen or as tiny as an icon: it will still be an orange and recognized as such (yay for oranges!)

Screenshot taken from http://deeplearning.net/tutorial/lenet.html

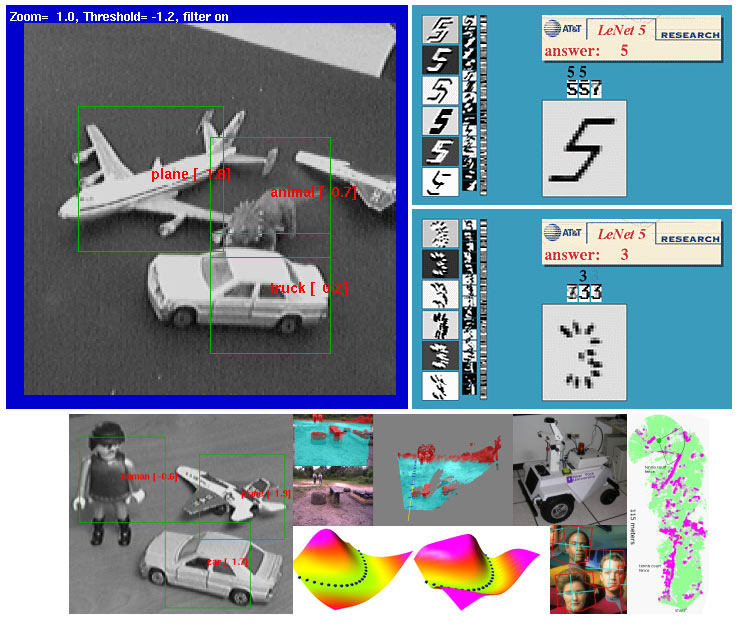

The Hebbian principle has less to do with object recognition and more to do with the learning or training of neural networks part. In neuroscience this principle is often summarized as “Cells that fire together, wire together”. The cells being, of course, neurons. The application of this principle for artificial neural networks basically means that the software based on this algorithm would be able to teach itself, to get better in time.

Screenshot taken from http://yann.lecun.com/

Google’s Acquisitions Regarding Artificial Intelligence and Image Understanding

Google has already developed some of these technologies on its own; others, such as Andrew Ng’s architecture, it acquired. It is also worth noting Google’s strategy of growing while letting the market grow, by leaving many of its solutions open-source for others to improve on (why stifle the competition when you can afford to buyout the competition?)

One of the most interesting acquisitions though, is DeepMind, a $400 million dollars investment. Why would Google make such a big purchase? you might think.

It’s very likely that this particular acquisition aimed at adding skilled experts rather than specific products, marks an acceleration in efforts by Google, Facebook, and other Internet firms to monopolize the biggest brains in artificial intelligence research. Of course, this is just a supposition. Yet, for a better understanding of this matter, we highly recommend you to watch the video below which will give you a very well illustrated image of how big Google actually is and how it impacts our everyday life.

Conclusion

The ability of humans to recognize thousands of object categories in cluttered scenes, despite variability in pose, changes in illumination and occlusions, is one of the most surprising capabilities of visual perception, still unmatched by computer vision algorithms. Or at least this is what an article from 2007 stated. Here we are, a couple of years later, facing the situation where search engines are about to implement automatic object recognition on a daily basis. Even more, Google is already making steps forward as it owns a patent for automatic large scale video object recognition since 2012.

Organic results might not look the way they do today and for sure important improvements will be made soon. Google is switching “from strings to things” as the Knowledge Graph will be fully integrated in the search landscape. Algorithms will change too and they will probably be more related to the actual entities in the content and how these entities are linked together.

It’s true that only time and context will be the one to prove us wrong or right. Things can only be understood backwards; but they must be lived forward.

Photos 1, 2

Site Explorer

Site Explorer Keyword tool

Keyword tool Google Algorithm Changes

Google Algorithm Changes

Hi Razvan, is a pleasure follow and read your article, I was thinking also, to Google (if not already done so) will not put the routine type OCR to better interpret the content of some images?

the technology exists. it is being used in other Google services but in Google Search for now it seems that it is not used. Though they use other interesting stuff. Here is another indepth research about this stuff https://cognitiveseo.com/blog/5909/did-google-read-text-image-can-affect-my-rankings/ that I wrote a while ago.

Very well written and explained post. A real good insight of future ranking by Google and maybe other search engines. Razvan, you have done a fabulous job on this.

tks for the appreciation Fredrick. To be prepared for the future you have to at least think about the evolution. So knowing this stuff would help everyone to optimize on what probably will follow 🙂

Because Google has cut off direct traffic for image viewing so effectively in image search I’m not sure putting any effort into appeasing Google image search has a ROI. For instance, in Webmaster Tools I show a large number of hits on my images in search queries, but receive almost none of that traffic to my site (in Google Analytics). I cannot optimize that traffic so why would I really care what Google does for image search?

Interested in your opinion.

You could watermark your images (if it makes sense) or add a logo at the bottom. There are also other techiques that would help you tell the viewer to visit your site if the user sees the image in the Google results.

Also this might affect your content. Think about the big picture. The image might influence also your page ranking as the image might contatin a “blue cat” and you did not mention the string “blue cat” in your content 😉

here is more info on Google Images redirects and stuff http://stackoverflow.com/questions/14796003/prevent-image-hotlinking-in-google-image-search

hope it helps.

Really well written.

We have been renaming images to keywords for some time to gain the added benefit of SEO, but never did I think we would be controlling the image itself to match KWs.

Such an E ticket ride Google!

and the image might even control the ranking of the site/page as I was mentioning in a comment above 😉

when will be this ready in google

there is nothing certain about this. this is a hypothesis on what Google could do based on their interest shown in the filed regarding object detection in images 😉

Really impressive article Razvan.

I’ve often wondered when indexing & analysing images in the way you have now described would eventually occur. It’s pretty impressive to see just how far down the path Google already are with using this technology.

A few questions:

Let’s say we have a photo of a Promotional Hat and in that image, it’s pretty obvious it’s a hat, it’s a blue hat and it has the word Promotional Hat, do you think adding keywords to images, such as ‘Blue Promotional Hat’ could also further help to rank better?

– Paul.

tks Paul.

I am sure it will. And regarding OCR I wrote another article and did some very interesting tests a while ago. https://cognitiveseo.com/blog/5909/did-google-read-text-image-can-affect-my-rankings/

Thank you Razvan! Truly a in-depth article. On my experience image optimization instructions traditionally just state descriptive name and alt tag, but there really is more into it.

tks Ari.indeed it is. and if it isn’t now. it will be in the future.

Everytime I read one of your articles I am like WTF… how deep does this guy dive into the topic 🙂 Great work!

Still, I believe Google is far less smart than we all think. Looking at their moves and strategies it is clear that they are still human… They might be ahead but not that far. I think most of it is smoke & mirrors.

This technology sounds amazing and would change search engine optimization forever but I just can’t imagine them doing this effectively at the moment. The internet is a big place after all 😀

It would solve a lot of issues though. Images talk louder than words. Right now they are very dependent on text which this would solve.

Anyways, thanks again!

Greetings from Innsbruck,

Alex

tks for the appreciation Alex 😉

the technology exists. it is being fine-tuned.

the other problem is that they should be processing very large chunks of big data. And with big data big problems appear. Such as the time required to process the data. This is where they are innovating also with the “Distbelief Infrastructure”:

“Our system is able to train 1 billion parameter networks on just 3 machines in a couple of days, and we show that it can scale to networks with over 11 billion parameters using just 16 machines.”

So yes. It may be smoke and mirrors now. But I do not think in the future it will be. And I am referring to the near future. 1-3 years.

They are progressing but everything can be exploited and BH is doing that now. It still works but is not as efficient as it was.

Hi Razvan!

Appreciate the well written post. You’re like Bill Slawski 2.0 or somethin’ 😀

I certainly can see this happening soon for search since they are already using these elsewhere. I can’t wait. The future of search definitely looks bright and it’ll keep our work more interesting.

Have a great weekend man!

lol. funny comparison.

Search is something that too many are stuck in Search 1.0 ( aka old hat exact match anchor text web directory linking stuff). We are already experiencing Search 2.0 and Search 3.0 in just around the corner. So be prepared the “best” is yet to come.

great weekend!

This is a really value content 🙂

Hi Razvan,

thanks for your well and deeply researched article. I’m a little bit afraid, what is possible for google with the power of their machines and algorithms. Not for image optimization to understand websites, more to recognize people on images. Especially since “don’t be evil” is no longer a slogan for google…

Does or will the IPTC meta data in a JPEG photo influence the what Google sees in the photo?

Well explanation about google image index procedure, I didn’t know indeed about lot of image boot system, by the way your content writing skill is well and understandable. Sometimes I feel irritated when I read everything and don’t get anything what exactly they want to say.

Awesome post. you can rank the image by giving the main key words in alter tag, title tag and head of your page. so that when people search the name of the image or the web site, you have a chance to rank your image in search result.

this technology is possible but it creates much data. Will see how the computer technology evolves.

Does it make any sense to add my url in the description of an image? Also, curious to know if it will affect job postings or not if I were to attache company logos as part of posts.

Well written and already shared. Thank you

Facebook showed something simmilar last year in there F5. Thats not really SEO relevant but they showed and recognized a lot of details.

If they can, i could imagine what Google can…