Yesterday at SMX, Matt Cutts tweeted about a new update that is targeting highly spammed keywords such as :

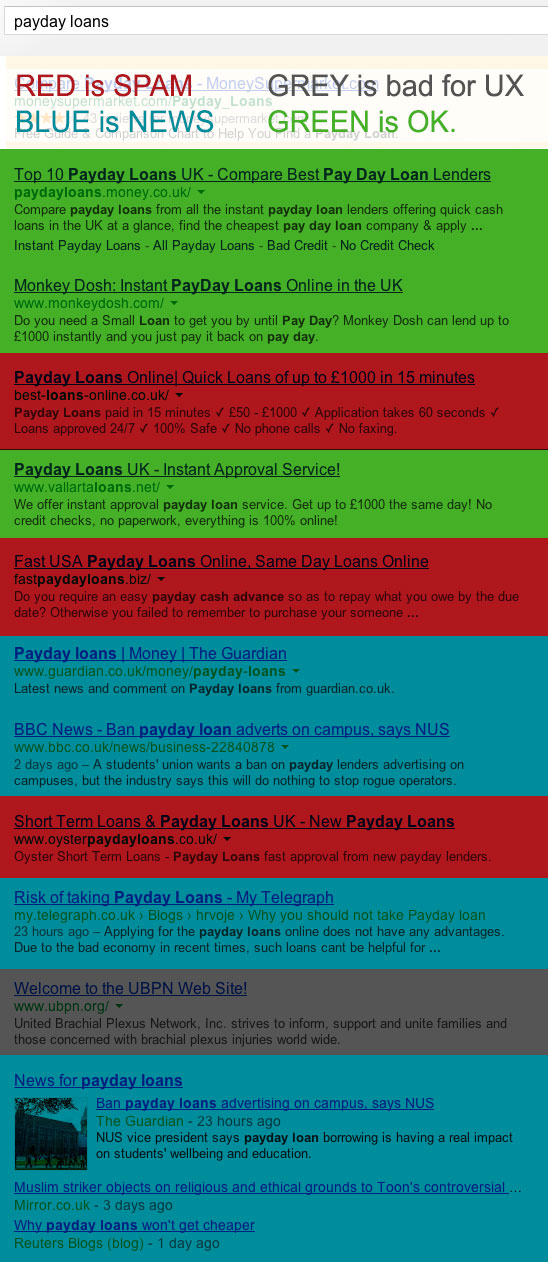

- Payday loans

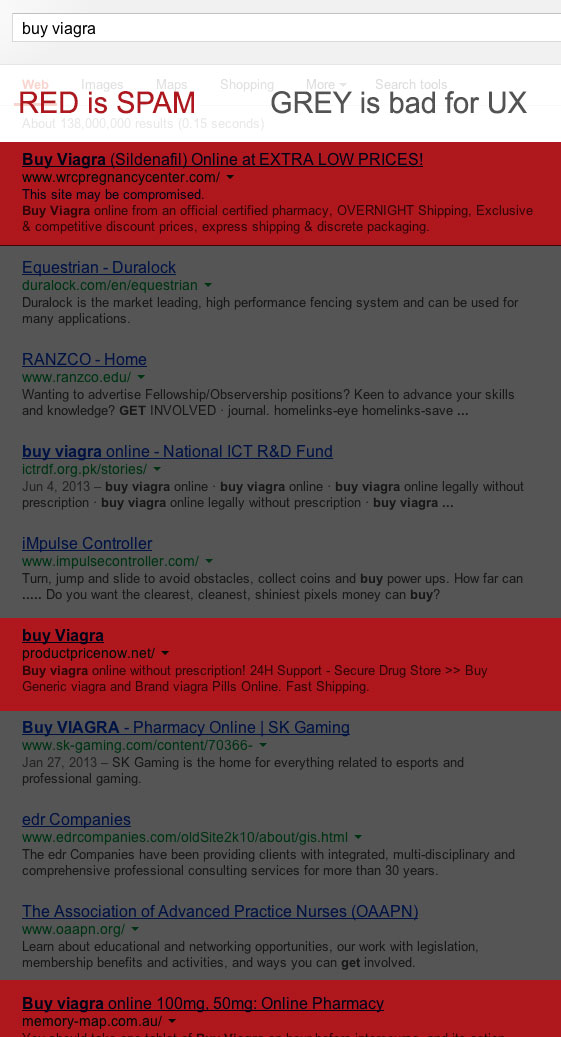

- Buy Viagra

- Adult stuff

- Other Highly competitive keywords (practically everything that makes tons of money…)

I am glad that Google has finally addressed the problem that I publicly mentioned a year ago.(really funny that the post was done on June 13 2012 and the “response” came on June 12 2013)

This is a very important update because it tries to find a solution to faster penalize highly aggressive spam sites.

Looking at the some of the queries affected by this update, I can see a trend on all of them in terms of returned results. It is a mix of:

- “Authority” sites (more or less)

- Newspapers & Informational sites

- Webspam (yes it is still here)

- Unrelated sites (due to massive spam)

Results after the Update

After a quick study on the results it seems that, depending on the SPAM level on each keyword, Google has now a higher chance of showing irrelevant content or too much informational content. In the “buy Viagra” case, which is a commercial keyword with immediate user intent, I can only see results that provide unrelated conten and spam sites. The SPAM has been reduced but now Google might need to try and work on the relevancy of the results.

Here are a few hypothetical questions:

What if in a specific niche no sites “play” by Google’s rules?

What sites would Google choose to rank?

Policing these keywords is really tough because of the “eco-system” that was formed around them. For years, almost anyone trying to rank on these keywords did a lot of shady stuff. Even the legit companies did.

The exaggerated competition, and “holes” in the system, led to “an never ending spamming” activity that was applied by almost everyone in a chance to trick the system. Out of the top 10 results, you could easily see around 7 to 10 spammy results.

My personal theory on why these keywords are so tough to police algorithmically, is that the entire “eco-system” around these keywords is formed of spammy sites. Let’s give it a hypothetical number of 95%. We have another 3% informational sites and maybe we have another 2% legit companies in a particular super highly competitive niche.

If all the algorithms rules will be applied “à la carte” we might end up with no commercial sites ranking on those keywords, and instead we will have a lot of informational content for a set of commercial keywords. Google ends up with cleaner results, in terms of sites that play by their rules, but this leads to compromising the users experience.

That is why it might be so hard to police these keywords from a conceptual point of view and not from a technical point of view.

The user doesn’t know and doesn’t care what’s a spam site. He expects to find a site that solves his personal problems when he does a specific search.

The policing problem that Google has is to find the fine border between user experience and relevant ranking sites (with or without shady techniques).

From an algorithmic point of view, here is something that still works.

An Active Google Algorithm “Hole”

There are still “holes” in the Google Algorithm, and one of those is Google’s inability to visually crawl pages quickly. One of the latest black hat techniques that are applied on some of the highly competitive keywords is to get hidden links that can’t be seen by a normal crawler but by a visual one. Google catches these spammy link profiles but not as fast as the sites are spamming the results. This leads to sites that stay there for a few days and then get dropped. The spam process is recurrent and goes over and over again at a very high speed.

Here is a post I written half a year ago that addresses this “hole”.

I end my post with the desire to learn more from your experiences. Looking forward for your comments.

Site Explorer

Site Explorer Keyword tool

Keyword tool Google Algorithm Changes

Google Algorithm Changes

Interesting analysis and it certainly is vital to look at the fact Google are ranking news sites. I’ve also spotted this in other niches for commercial terms and although it does ensure good sites it isn’t always relevant as you suggest.

I do however think it’s a myth that some industries are only spammy. For example the Payday loan industry does have many sites who play by the rules and deserve to rank but simply don’t.

One thing I spotted when I checked the results was some hacked sites were showing for “payday loans”. Their only mention of the terms was the word hidden once in the source code but Google ranked them because the sites themselves were strong. Further proof they are prioritising the site strength over relevance to the term. Sadly that’s going to be easy to game if unrelated hacked sites can rank.

It’ll be interesting to see this change.

Mike,

indeed. there is no industry that is SPAM only but it can be 98% or 99% SPAM. The rest are “OK” sites that got “KO” by the SPAM. They can’t compete simply because the trend is given by the 99%.

If you look on the buy viagra search you will see the majority of the grey sites are in the situation you desribe and that is because they were once hackend and they got a lot of links pointing at them with commercial anchor text for the keywords targeted ( like a Google Bomb).

Unfortunately as you say they tend to get higher, again another delay in the Google algo until it figures what it is about.

my 2c 🙂