Google continues to make algorithm updates and change the search results to please the user. Similar to the saying “the client is always right”, here the saying becomes “the user is always pleased”. Or most of the times, that’s what Google is trying to achieve.

To fulfill that need and deliver good quality content that meets all the requirements and improve the search, Google officials let us know about major Google Algorithm Updates they make from time to time. This way, webmasters, content writers, SEO analysts and SEO professionals take action and update their websites accordingly.

Most of the time, it is hard to understand what has changed, what we should do or how our site should comply with the requirements. With BERT – Google’s latest algorithm update, things get even more confused sometimes.

We thought of making things easier for you to understand the evolution of Google’s algorithm updates, what Google focuses on, the important role the user is playing in a search, what a quality page should have to rank, what type of pages Google disapproves and more interesting discoveries found in the quality rater guidelines.

The Search Quality Guideline is the tool all websites should comply with. After a thorough analysis, here’s what you should know about Google’s Algorithm Updates to deliver proper content:

- Google’s Algorithm Updates Purpose – Improve the Search

- Google’s Main Focus – Quality Content

- Google’s Most Important Ranking Factor – The User

- Google Search Quality Rating Guidelines Utility – Improve Google’s Search Algorithm

1. Google’s Algorithm Updates Purpose – Improve the Search

The times change, the users’ needs change, so it is only natural that the search should improve accordingly. And Google’s Algorithm Updates do just that. What was top priority a few years ago, might have other value now. Google wants to keep results relevant as content on the web changes.

Google did mention they make thousands of updates yearly, and only a few major updates per year that are officially announced. See below an example of an official announcement from Google Search Liason’ Danny Sullivan about their last confirmed broad core update.

This week, we released a broad core algorithm update, as we do several times per year. Our guidance about such updates remains as we’ve covered before. Please see these tweets for more about that:https://t.co/uPlEdSLHoXhttps://t.co/tmfQkhdjPL

— Google SearchLiaison (@searchliaison) March 13, 2019

First things first. Google started a new era of SEO back in 2003, when it was Google’s Florida Update turn to get rid of overly optimized content (including keyword stuffing, invisible text, hidden text and so on). Things continued on the same topic: get rid of manipulative link building and black hat techniques that didn’t serve the user’s best interest, but was rather a gimmick to fool Google and rank higher.

Then, Google looked at the main content on a website and targeted those that had lots of ads above the fold and were blocking the actual content. The focus spread to the user the Google algorithm updates were meant to deliver more localized results and detect whether a query or webpage had local intent or relevance.

Lots of other Google Panda updates followed, plus the Google Payday Loans update which targeted spammy queries mostly associated with shady industries (payday loans, porn, casinos, debt consolidation, pharma) and included better protection against negative SEO.

The following updates targeted conversational search, such as voice search and the improvements were made to offer more relevant results for those types of complex queries. After voice search, another big change was the Mobile-Friendly Update, that rewarded websites that had a user-friendly mobile version to deliver quality content on mobile devices, too. Plus Google started to predominantly use the mobile version of the content for indexing and ranking through Mobile-first indexing.

The next big thing that followed was RankBrain, which Google acknowledged at that time to be one of the three main ranking factors, besides content and links. RankBrain is a machine-learning algorithm that filters search results to offer users the best answer to their query.

Next, Google releases real-time Google Penguin. They said Penguin is devaluing links, rather than downgrading the rankings of pages. What followed next were lots of algorithms that targeted low-quality content, deceptive advertising, UX issues, making the search a better place for the user. Their constant advice was to “continue building great content.”

In the last year, Google algorithms targeted content relevance, to reward those websites that comply with their request to offer the best content on the topic. Lots of broad core algorithms have been made and will continue to be made according to Google. All the pages that might perform less than they used to or experienced a slight rank fluctuation aren’t penalized or subject to a quality update by violating guidelines, but rather other pages were found to deliver better content and were rewarded with a better place. This is also the case for Google latest update named BERT, which is characterized as a massive and the biggest step forward for search in the past 5 years, as well as one of the biggest steps forward in the history of search altogether.

2. Google’s Main Focus – Quality Content

The way Google search looked a few years ago has changed tremendously, including its appearance, the analyzed intent behind the searches, the way results are pulled out.

To follow Google’s explanation, imagine that in 2015, you made a list of top 100 movies to see. If you were to look at it now, that list might suffer some changes. There’s are lots of reasons: new movies have appeared, other ones might have changed their position since your preferences changed. So, the list you knew in 2015 looks slightly different than the one you’d make today.

Similar to Google’s algorithm updates, it started to evaluate other factors. Just think of the battle between content and links. Backlinks had been the king and queen of the ranking factors until Google started to move the ship towards content, and more specific, quality content.

| “Sometimes, the web just evolved. Sometimes, what users expect evolves and similarly, sometimes our algorithms are, the way that we try to determine relevance, they evolve as well.” | |

| JOHN MUELLER | |

| Webmaster Trends Analyst at Google | |

Google started to focus more on content. Google’s officials always say to build quality content, referencing a blog post written by Amit Singhal. John Mueller continues to recommend to those who participate in the Webmaster Hangouts and ask questions on Twitter to follow the Quality Guidelines and create quality content.

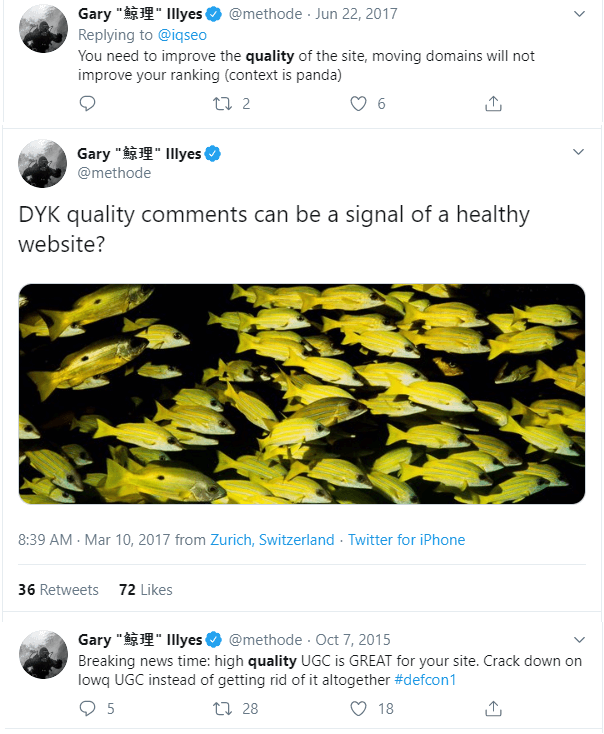

Garry Illyes, on the other side, tweeted multiple times saying how important it is to have quality content.

3. Google’s Most Important Ranking Factor – The User

Yes, site quality is important and all Google wants is to deliver it to serve a user’s needs. So, isn’t really the user who dictates the way pages are valued in search?

Let’s say the user searches for a how-to tutorial to remove wine stains. The way he decides which pages to read is influenced by what Google pulled out in search through algorithms that calculate context created by previous searches. Then the user enters the pages he wants by looking only at the title and meta description. The user is the one to eventually reward specific pages.

Moreover, the results pulled out in SERP are influenced by location, interests, previous searches, language. So isn’t really the users that generate a set of results based on history?

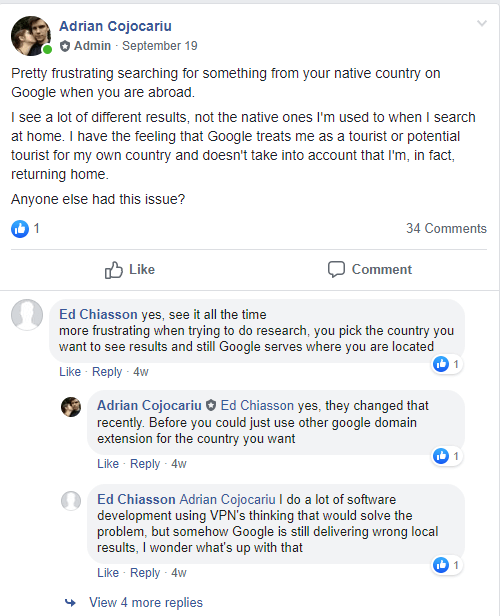

Have you ever been curious to find out specific results on a particular country and your results were displayed based on your current location? Well, that’s because now Google automatically knows your location and offer localized results in the current language. It is frustrating especially if you’re on vacation and want to search for something in your native country. If you think the incognito mode is the answer, you’re wrong. It doesn’t always work, even if you change the language and country manually.

This topic was fleshed out on the SEO Growth Hacks Facebook Group by one of our colleagues.

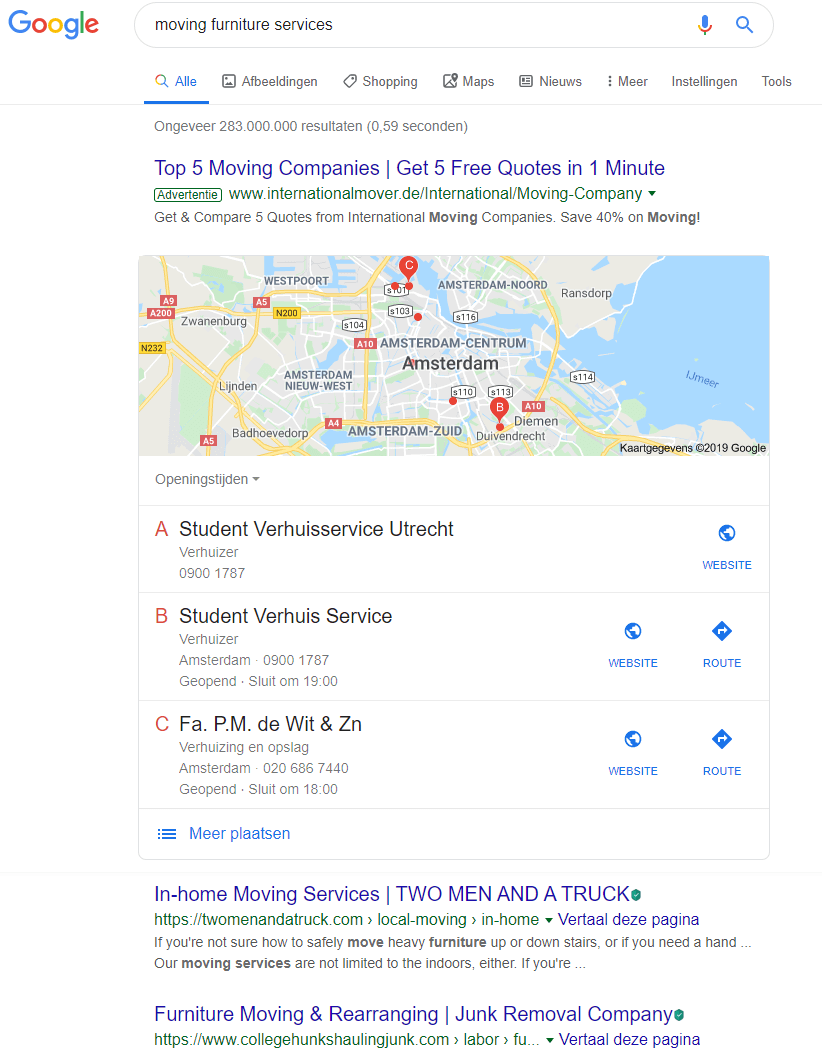

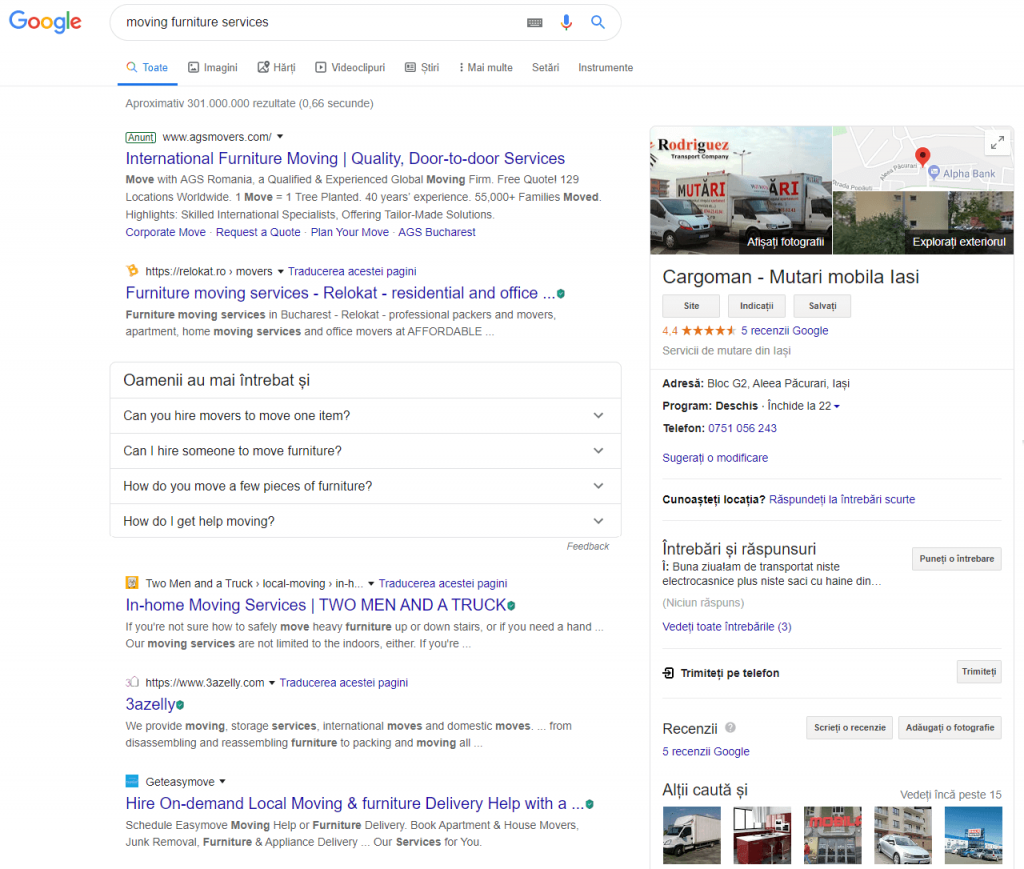

The searches are influenced also by the device you’re using and the websites you’ve previously visited. Check the example below, for example. I asked somebody from Germany to search for “moving furniture services” and then I performed the same search in Romania. Even though the query is in the same language, the results are different.

Searching for moving furniture services in Germany

Searching for moving furniture services in Romania

It’s only understandable that Google wants to offer more relevant results and location is an important trigger. Google works on delivering information that is findable, accessible, relevant and usable correctly.

Understanding Google and serving the user is a winning recipe.

A good user experience helps sites gain traffic, popularity and ultimately begin ranking. John Mueller confirmed it on one of his Google Webmaster Hangouts. UX is an indirect factor that marks a high-quality website.

This year, at BrightonSEO 2019, where John Mueller and Hannah Smith’s held a Q&A Keynote, John mentioned that their job is to “measure how happy people are with regards to Google in the whole kind of content ecosystem.”

Michelle Wilding, Head of SEO & Content at The Telegraph, present at BrightonSEO 2019 talked more about the connection between SEO and UX:

| SEO’s change in direction has meant that the user is now first and foremost. Google cares deeply about providing quality experiences for searchers. Therefore UX is more important than ever – and so is collaborating with your UX/CRO department. | |

| Michelle Wilding Baker | |

| Head of SEO & Content at The Telegraph | |

To design great user experience we need to follow quality guidelines, understand how to please Google and make users happy. There’s one question that Google makes you wonder when creating content that puts the user in the center.

Does the content seem to be serving the genuine interests of visitors to the site or does it seem to exist solely by someone attempting to guess what might rank well in search engines?

4. Google Search Quality Rating Guidelines Utility – Improve Google’s Search Algorithm

There are some Page Quality Rating FAQs that point out some important characteristics of high-quality content, such as:

- deliver original information;

- describe the topic thoroughly;

- offer insights and additional interesting discoveries based on studies or personal research;

- write a descriptive title to represent the content well;

- avoid exaggerating titles and fake news;

- provide trust and show your content is a reliable source of information, using expert opinions;

- check for facts to see if your content doesn’t share misleading information;

- verify spelling and correct language style;

- ads don’t interfere with the main content or interstitials block the user see the main content;

- it isn’t mass-produced content;

- it is mobile friendly;

Checking all of these elements will provide a poof vest against Google’s algorithm updates.

A more comprehensive source of information about quality content is the Quality Rating Guidelines, where you can find the questions and descriptive explanations. Based on these guidelines, Google assesses your content, analyzing E-A-T (Expertise, Authoritativeness and Trustworthiness). Google created the EAT to translate algorithm concepts and make them more user-friendly. There’s not EAT algorithm or an EAT score, but rather documentation for those eager to understand better what Google looks for when deciding what pages to reward and rank.

“EAT and YMYL are concepts introduced for Quality Raters to dumb down algorithm concepts. They are not ‘scores’ used by Google internally.“ #Pubcon @methode Followup: There is no EAT algorithm.

— Grant Simmons (@simmonet) October 10, 2019

A core algorithm is not like one if statement. "It's a collection of millions of tiny algorithms that work in unison to spit out a ranking score. Many of those baby algorithms look for signals in pages or content. When you put them together in certain ways, they can be…"

— Marie Haynes (@Marie_Haynes) October 10, 2019

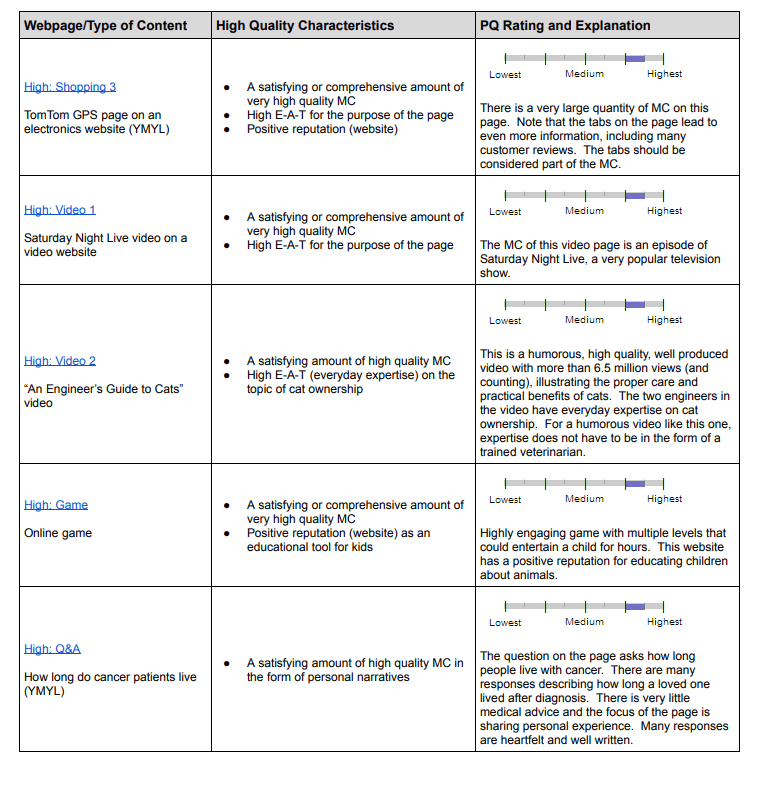

Depending on the industry, a high E-A-T means that the content is written by an expert, or organization that is trusted and has authority in that niche. Some topics require less expertise, and to have a high E-A-T it is required to have extremely detailed, helpful reviews of products and information on that particular topic. There are lots of content writers that offer valuable information based on their life experience and can be considered experts on that matter. High page quality means you have a positive author reputation and positive brand reputation (recognized through user reviews), a high standard for accuracy and that it is focused on helping users. Below you can see some example of pages with high page quality rating:

Don’t bury the real meat of the content. Make it understandable, add visuals, make reference to studies and researches, add personal opinions based on previous life experience, add lists for an easier understanding of the topic, look at it through all the possible sides, make it the best possible side of what’s already available in published form. Something worth remembering every time before starting writing a new piece of content:

Don’t write something that’s been written before, without bringing any additional value or new information.

In this case, you’re not thinking of the user’s best interest but yours, and it’s selfish, irrelevant and useless.

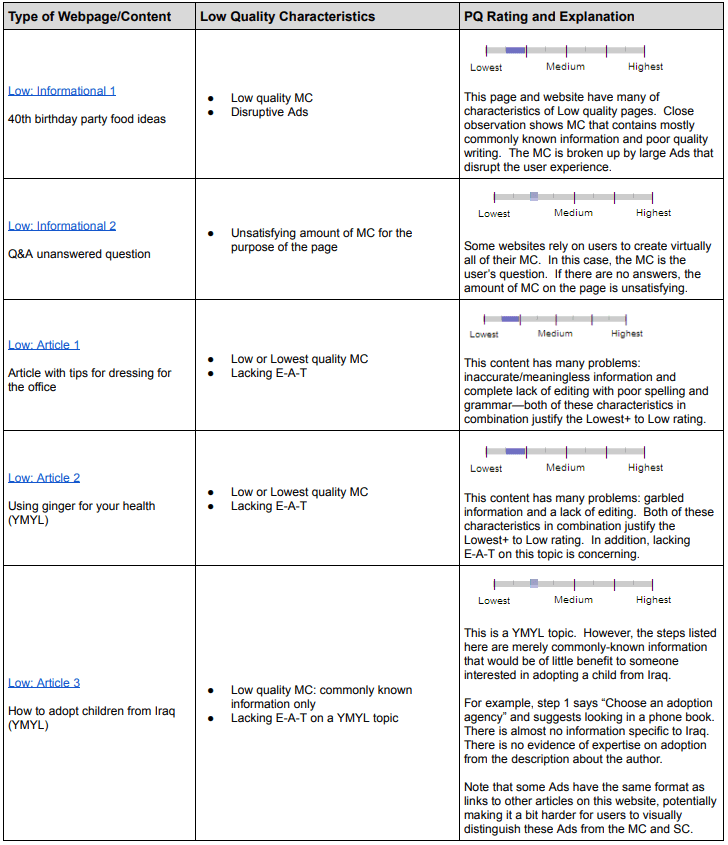

Since we’re talking about things you shouldn’t do, let’s elaborate on the topic more to find out what Google disapproves and what type of pages are banned from the search. Low-quality content, as Google describes it, has the following characteristics:

- it is created without adequate time, effort, expertise, or talent/skill;

- the content doesn’t correspond to the title and does not achieve the purpose;

- the title has no call to action, nor does it represent the main content;

- it has irrelevant exaggerated or shocking titles;

- a small amount of content with no additional value, such as writing just a few paragraphs on a broad topic;

- pages with interstitials and ads that are blocking the user to see the content they came for;

- it contains disturbing images, such as sexually suggestive images on non-porn related pages;

- pages with mixed content that are not safe for the user;

- malicious or financially fraudulent pages that shatter the trust of users and are harmful;

- the company doesn’t have contact information, email address o social media accounts, or shares anything to make it a trusted company;

- pages that potentially spread hate;

- pages that misinform users;

- content that is inaccessible on hacked websites and spammy pages;

- auto-generated or scraped content and other forms of content generated through black hat techniques.

Below you can see some example of low-quality content:

If you avoid and check out all the latest characteristics and focus on the things that matter to create the best content there is on the web, you’ll be able to make both Google and the users happy. And even if, hypothetically, your page might be devalued by other pages after a google search ranking algorithm update, that doesn’t necessarily mean you got penalized, but rather other pages offer more value. Which brings us again to those elements mentioned above. You should always stick to those.

Don’t forget that search engines do not understand content the way humans do. It is a more complex process where Google looks for signals and correlates it to the meaning of relevance humans are aware of. Delivering perfect content is hard, even for Google. That’s why they perform these broad core updates from time to time. To curate content and keep improving their ranking signals & systems.

Think of it this way, in order to get results for a searched query, Google analyzes and organizes all the information on the internet to give you the most useful and relevant search results in a fraction of a second. Sometimes, it even gives you the correct form for misspelled or mistyped queries.

Site Explorer

Site Explorer Keyword tool

Keyword tool Google Algorithm Changes

Google Algorithm Changes

Really a good info which you shared!! I got a clear view about the google algorithm updates. Thanks for the stuff!

Thank you so much for this information. I really find it hard to rank a website since the FRED updates. Thanks for sharing this. This really helps.

Hello,

Such a piece of useful information I found today and I’ll definitely follow your tips for ranking my website,

Thank you so much for the stuff.