Today we got tons of articles on the Penguin 2.0 update. Sorry, I meant to say, tons of disinformation, about the Penguin 2.0 update.

Here are some of the Penguin 2.0 myths:

- “Penguin 1 targets homepages, 2 goes “much deeper.”

- “Penguin 2 had a high impact on cleaning up the SERPS”

- “Penguin 2 spots unnatural link velocity trends and penalizes them”

And now I will debunk each one of them

1. “Penguin 1 targets homepage, 2 goes “much deeper.”

FALSE.

Remember the Firefox Penalty where Google confirmed that only 1 page from the Firefox site was affected by the unnatural link warning? It was a deep page. Case closed.

2. “Penguin 2 had a high impact on cleaning up the SERPS”

FALSE.

Looking at the SERP “weather” reports on various sources, here and here, I see nothing spectacular about the variations. Matt Cutts said Penguin 2.0 would affect 2.3% of the search queries. We can only believe that J.

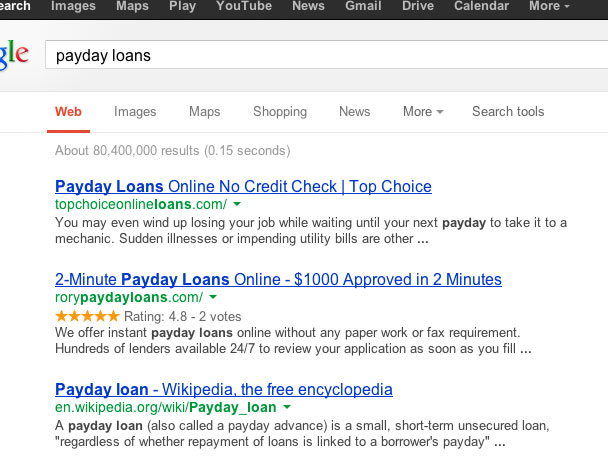

Either way I always benchmark working black hat techniques by looking at some of the most spammed keywords.

Both are full of spam. Indication that Google has still a lot of work to do in terms of automatic unnatural link discovery.

Let’s be frank and open minded for a moment about all this stuff.

All these Penguin updates demonstrate that Links are still the Core of the Google Algorithm and there is still a big battle to fight between shady SEO’s and Google. If Google wants to model the real world by links among other signals it must be able to interpret the link, the page and the context of those, like a human does. Artificial Intelligence is still in its infancy and they still have a lot to upgrade on the system to be able to simulate real world events.

Penguin 2.0 is not the “BIG LINK UPDATE”, as many expected, it is just an incremental update in the long list of incremental updates that Google is doing in order to clean up the SERPS from shady SEO strategies.

3. “Penguin 2 spots unnatural link velocity trends and penalizes them quickly”

FALSE.

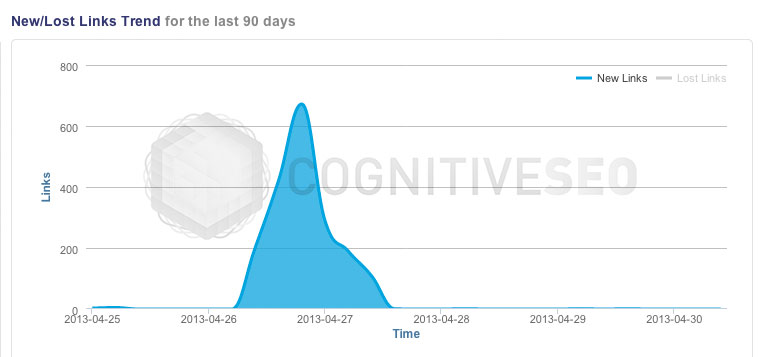

Based on my own research and various other people voices Google seems to do updates in batches. What this means is that Google needs to be able to differentiate Virality from Unnatural High Speed Link Velocity. It can do it but it does not do it fast enough. Remember when Google updated their SERPS like once per month ( a few years back) ? I think this is similar in terms of updating the rating for a URL/DOMAIN. It is not done instantly but in a batch procedure that is run from time to time When Google runs the batch job and updates the rating of the URL/DOMAIN is could instantly change the SERP. This is a theory … not internal Google info ran into this J, but how things seem to be working it can be a functional method of how it is done today.

Here is the number 1 site (SPAM) on “payday loans” on the 24th of May (after the 22nd of May Penguin 2.0 update)

Link Velocity Trend

Ranking Trend

Brute force ranking still works and it works big time. Maybe sometime in the future we will get clean SERPS, but not with this update.

Overall nothing has changed in unnatural link detection from what I can see until now. A bit better in detecting unnatural links but this should have been expected.

General rules of thumb:

- If your links are unnatural Google aims to penalize you.

- If you clean up your links Google might forgive you (some people reported that with the Penguin 2.0 they see old site with cleaned up link profiles to get better rankings)

So what do you think about the Penguin 2.0 ?

Site Explorer

Site Explorer Keyword tool

Keyword tool Google Algorithm Changes

Google Algorithm Changes

Actually for #1, Matt Cutts mentioned in a video (this one: http://www.youtube.com/watch?v=nNbWw2OUUAc, see around the 1:30 mark) that Ver 1 did only look at the homepage, while Ver 2 “looks deeper”. It could be misinformation, of course, but something to note anyway.

I know. but how do you explain the 1 month ago incident(confirmed by Google) about the deep page penalty ?

I think Google has fun trying to send out the most confusing message possible, all to keep people guessing.

I’m not sure which penalty you are referring to, but is it possible that *that* penalty was manual action, vs algorithmic…?

You know Google did algorithmic link based penalties far before even Penguin 1 arrived ?

A lot of penalties are also applied manually, but are generally triggered algorithmically.

Penguin 2 has a new engine and is just in first gear, just slightly more effective than Penguin 1 so far.

But just wait until they press the throttle and switch it up some gears !!!

A separate new algo will deal better with the Virality vs Unnatural High Speed Link Velocity issue, specifically for spammy niches, such as ‘payday loans’.

obviously. as I said these are more like incremental updates.

manual is a different story.

it will do another update. the idea is that there was a lot of fuss about Penguin 2.0 to be something more like the spam killer and from my data I see it only as a incremental update that applied some more penalties and increased good sites.

payday loans made history already with the spammed keywords. from my POV it is both a PR issue for Google and if it could have dealt with it until now I am surely it should have … problem is I believe the technology is not advanced enough to deal with it. Maybe in the future ( tomorrow or in a year or 2 … )

Unnatural links warning is different than Penguin. One is manual, the other is algorithmic. See seomoz.org/blog/the-difference-between-penguin-and-an-unnatural-links-penalty-and-some-info-on-panda-too

yes they are but they are still about links.

Penguin though is automatic and I was referring to the automatic clasification of links.

An important distinction has to be made between looking at links to a page and analyzing a page’s backlink profile. While 1.0 looked at the backlink profile of just the homepage, 2.0 goes “deeper” by analyzing the backlink profile of a set of pages.

read more here http://www.seroundtable.com/google-penguin-deep-16837.html

Razvan watch this https://www.youtube.com/watch?feature=player_detailpage&v=nNbWw2OUUAc#t=85s

I know it. that is exactly the link above detailing. Here is a bit from it:

“Update: I see now where the confusion comes from, via TWIG, right over here where Matt said Penguin looks at the home page of the site. Matt must mean Penguin only analyzed the links to the home page. But anyone who had a site impacted by Penguin noticed not just their home page ranking suffer. So I think that is the distinction.”

The general consensus since the update was done is that it won’t have near the effect of what people were preparing for. I think the first couple rounds of updates last year shook everyone up a bit, so now people expect all updates to knock them off the radar. But what seems more correct is this is just a minor tweak to the major update that was already done. They should have called this one Penguin 1.1, but then nobody would have panicked, and I’m sure Google is trying to keep people on their toes.

With all the spammy, low quality link wheels on Fiverr and elsewhere, there are an endless amount of sites out there with pure garbage that are getting all kinds of Google love like the payday loan site you mentioned above. Sites like that will eventually lose their 2 seconds of fame and come crashing down. It’s only a matter of time.

A general rule of thumb whenever a new algorithm update comes out, is keep your eyes on your analytics. There are plenty of factors that can lead to a decrease in traffic, many not related to the update at all. Monitor your sites and look for fluctuations in data and then investigate if the change in traffic happened algorithmically or by other factors.

agree Nick. Analytics and Rank positions are always important

90% PR 10% filters this update.

So much fluff from Google.

This is fixed with Penguin 3.o. You won’t see the same sites on the first two pages.