The SEO landscape has changed enormously in the last years. Organic traffic comes and go, the websites’ performance seems to be as volatile as it gets and at the end of the day, you might ask yourself: why did my organic traffic drop? Did Google change its algorithm again? Was a sort of SEO attack on my site or was it something that I did? And while you keep on searching for the reasons your hard-worked ranks and traffic went down the drain, your frustration gets bigger as the solution to your problem seems increasingly far.

We are in the SEO industry for a while now, wearing many hats: digital marketers, tool developers, advisers, researchers, copywriters, etc. But first of all, we are, just like you, site owners. And we’ve been through ups and downs regarding organic traffic, and we were in the situation of trying to understand why the Google traffic dropped dramatically at some point as well. Having all these in mind, we’ve thought of easing your work, and we’ve put together a list with the top 16 reasons that can cause sudden traffic drop, unrelated to Google algorithm changes or penalties.

With no further introduction, below you can find the top 16 causes that can lead to massive organic traffic drop:

- Changing Your Domain Name and Not Telling Google

- Indexing Everything Without Pruning Your Content

- Getting Your Pages De-indexed by Google Through robots.txt

- Redesigning Your Website & Not Doing 301 Redirects to Old Pages

- Moving From a Subdirectory to a Subdomain

- Giving Up Creating Great Content or Creating Content at All

- Counting Your Links and Not Your Referring Domains

- Ignoring Mobile First Indexing

- Moving Your Site to a Slow Host

- Ignoring Your Broken Pages and Links

- Hiring a SEO Company That Will Guarantee You Instant Traffic

- Dropping Lots of Shady Links on Other Sites

- Not Using Alt and Title Tags

- Duplicating Your Content – Internally & Externally

- Ignoring Canonical Tags

- Using the Same Commercial Anchor Text

1. Changing Your Domain Name and Not Telling Google

You might remember “Face Off” , a movie from ‘97 where Nicolas Cage is a terrorist and John Travolta is an FBI agent. And, as it happens in movies, they became obsessed with one another. Travolta needs information from Cage’s brother, so he undergoes an operation in which he gets Cage’s face; a few plot twists later and Cage has Travolta’s. They continue to pursue each other with new identities, the bad guy the good guy, the good guy the bad guy and so on and (almost) no one knew who is who. Dizzy yet?

Well, the situation is similar if you decide to change your domain name and not tell anyone about it, especially the search engines. Just with less drama and action scenes.

Whatever reasons you might have for changing your domain name, if you want to be sure that your organic traffic drops, do not tell anyone about the change. Especially Google.

Not even a word. This way, you’ll make sure that your new name, as well as your search engine traffic, will be like they don’t even exist in the digital world.

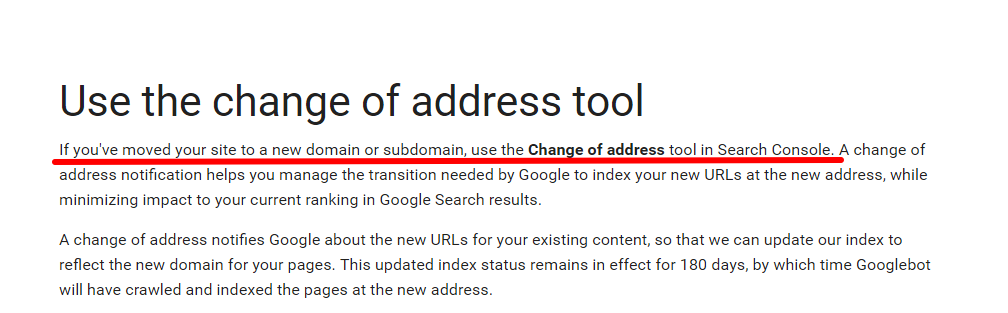

Yet, if you do want to keep your organic search traffic and ranks, once you make a domain name change, let Google now by using a specially designed tool in your Search Console.

2. Indexing Everything Without Pruning Your Content

One good way of causing a drop in your organic search traffic, just as the title of the chapter says, is to index each and every single page from your website and not prune your content.

Google makes constant efforts to improve the search algorithm and detect quality content and that is why it rolled out the Google Panda 4.0 update. What is this update really doing? It deranks low quality content and boosts the high quality one, according to Google’s idea of valuable content. In the screenshot below you can see what kind of content you shouldn’t be looking into if you are really determined in making a mess out of your organic traffic.

Every site has its evergreen content, which attracts organic traffic, and some pages that are deadwood.

If you don’t want your search traffic dropped, in the Google Index you should only have pages that you’re interested in ranking.

Otherwise you might end up polluting your website’s traffic. The pages filled with obsolete or low quality content aren’t useful or interesting to the visitor. Thus, they should be pruned for the sake of your website’s health. Low quality pages may affect the performance of the whole site. Even if the website itself plays by the rules, low-quality indexed content may ruin the organic traffic of the whole batch.

If you don’t want your Google traffic dropped dramatically due to indexing and content pruning, we are going to list below the steps you need to take in order to successfully prune your own content. We’ve developed this subject in a previous blog post. Yet, before doing so, we want to stress on the fact that it’s not easy to take the decision of removing indexed pages from the Google Index and, if handled improperly, it may go very wrong. Yet, at the end of the day, you should keep the Google Index fresh with info that is worthwhile to be ranked and which helps your users.

Step 1. Identify Your Site’s Google Indexed Pages

There are various methods to identify your indexed pages. You can find several methods here, and choose the one which is most suitable for your website.

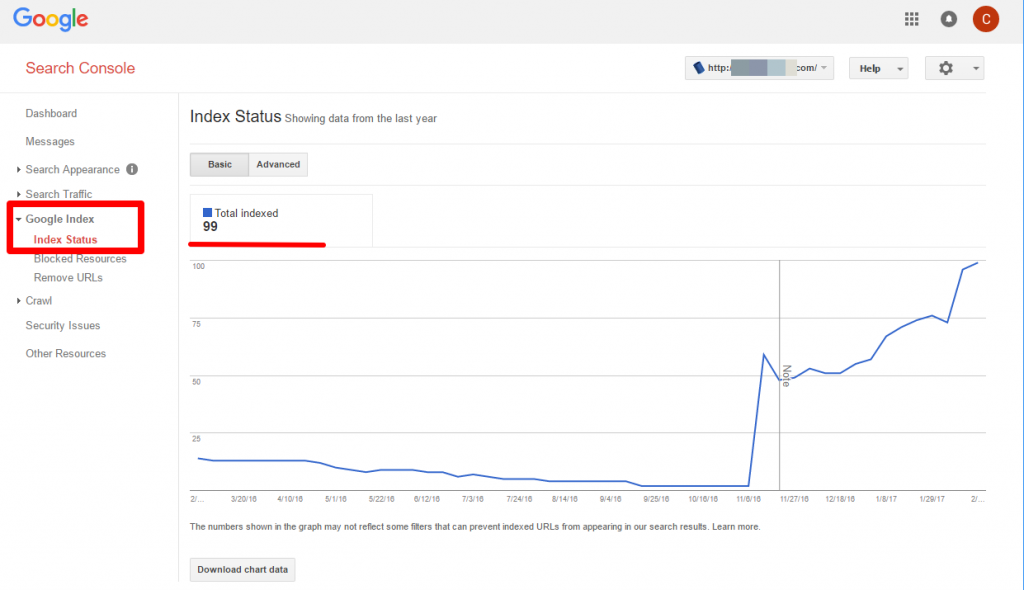

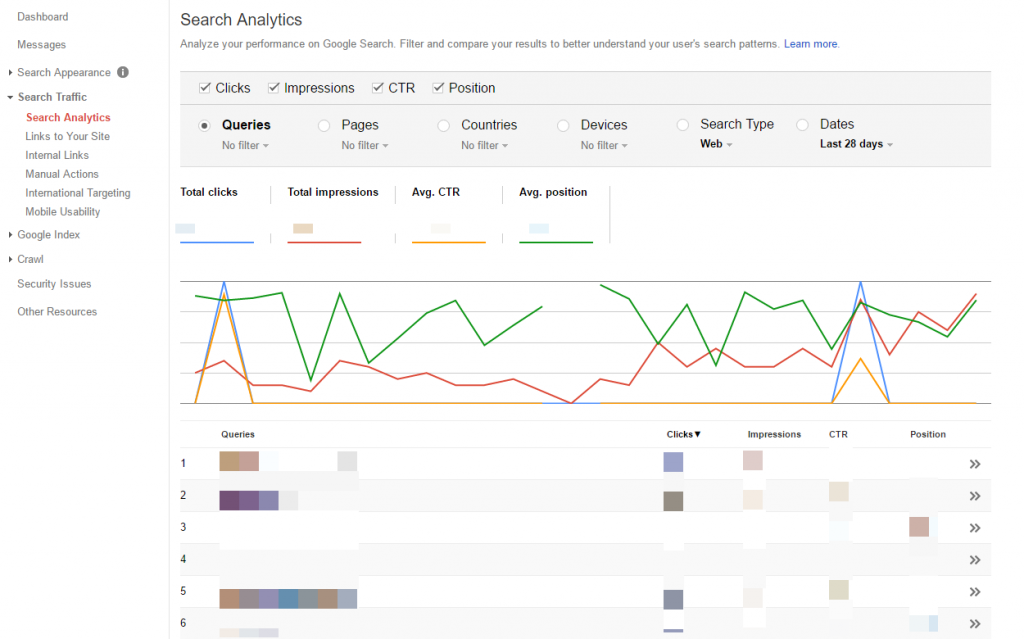

One way to do this is through your Search Console account, just like in the screenshot below. There you’ll find the total number of your indexed pages along with a graphic display of its evolution in the Index Status.

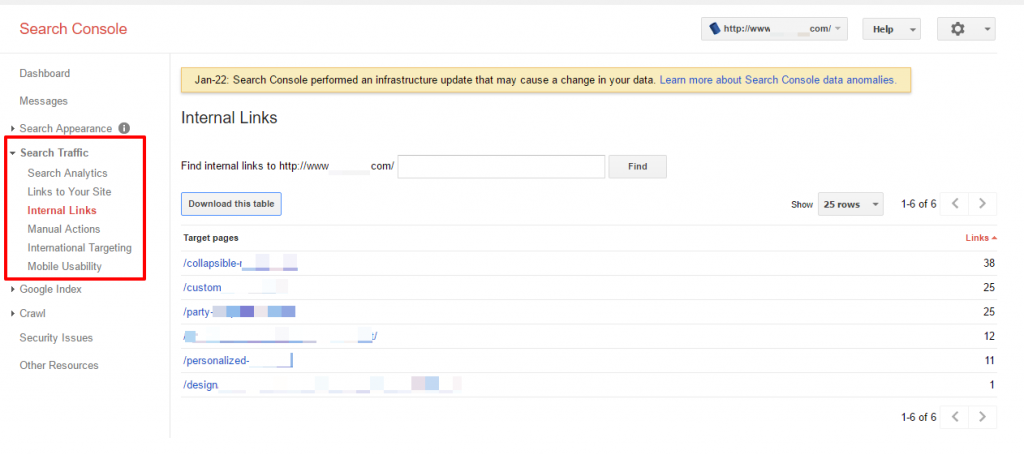

In order to get the whole bundle of internal links for your website, in the same Search Console, you need to go to the Search Traffic > Internal Links category. This way you’ll have a list with all the pages from your website (indexed or not) and also the number of links pointing to each. This can be a great incentive for discovering which pages should be subject to content pruning.

Step 2. Identify Your Site’s Low Traffic and Underperforming Pages

Of course all your pages matter but some matter more than others. That is why, in the pruning process it’s highly important to identify data regarding the number of impressions, clicks, click-through-rate and average position.

Step 3. No-Index the Underperforming Pages

Once you know the pages that pull you down, as painful as it might be sometimes, you need to start to no-index those pages. As hard as it was to create them, and make them well seen by Google, when it comes to getting them off the search engine’s radar, things are a bit less complicated. Below you can find two ways to

- Disallow those pages in the robots.txt file to tell Google not to crawl that part of your website. Remember that you need to do the stuff right as you do not want to hurt your search rankings.

- Apply the meta no-index tag to the underperforming pages. Add the <META NAME=”ROBOTS” CONTENT=”NOINDEX, FOLLOW”> tag. That will tell Google not to index the page but at the same time to crawl other pages that are linked from it.

3. Getting Your Pages De-indexed by Google Through robots.txt

A great way to break your web traffic without even noticing is through robots.txt. Indeed, the robots.txt file has long been debated among webmasters and it can be a strong tool when it is well written. Yet, the same robots.txt can really be a tool you can shoot yourself in the foot with. We’ve written an in-depth article on the critical mistakes that can ruin your traffic in another post.

Before developing this subject further, indulge me to remind you that according to Google a robots.txt file is a file at the root of your site that indicates those parts of your site you don’t want accessed by search engine crawlers. And although there is plenty of documentation on this subject, there are still many ways you can suffer an organic search traffic drop. Below we are going to list two common yet critical mistakes when it comes to robots.txt.

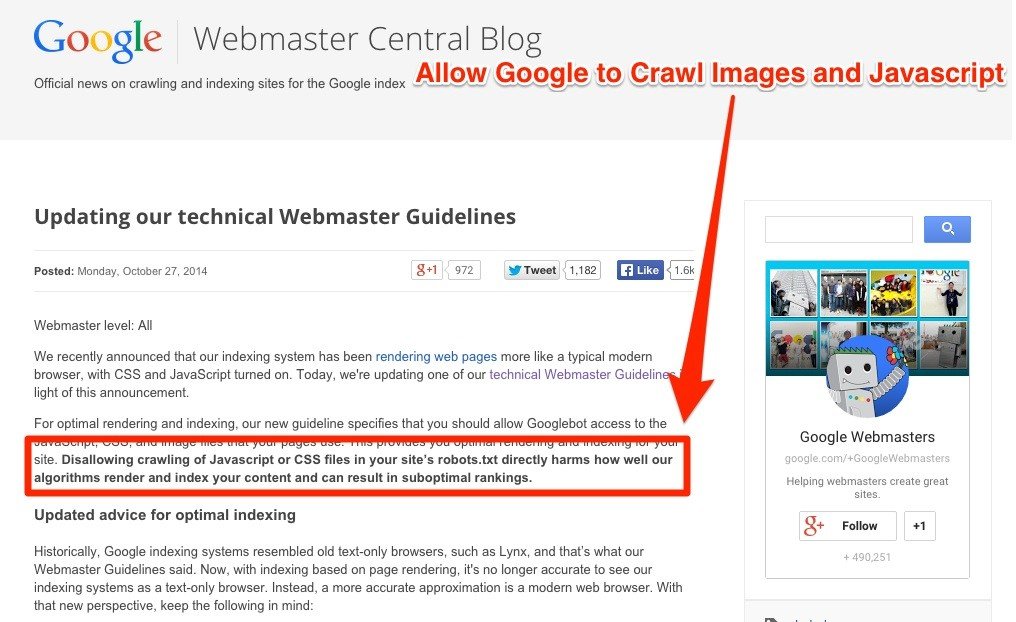

1. Blocking CSS or Image Files from Google Crawling

Google’s algorithm gets better and better and is now able to read your website’s CSS and JS code and draw conclusions about how useful the content is for the user. As Google itself stated, disallowing CSS, Javascript and even images counts towards your website’s overall search traffic.

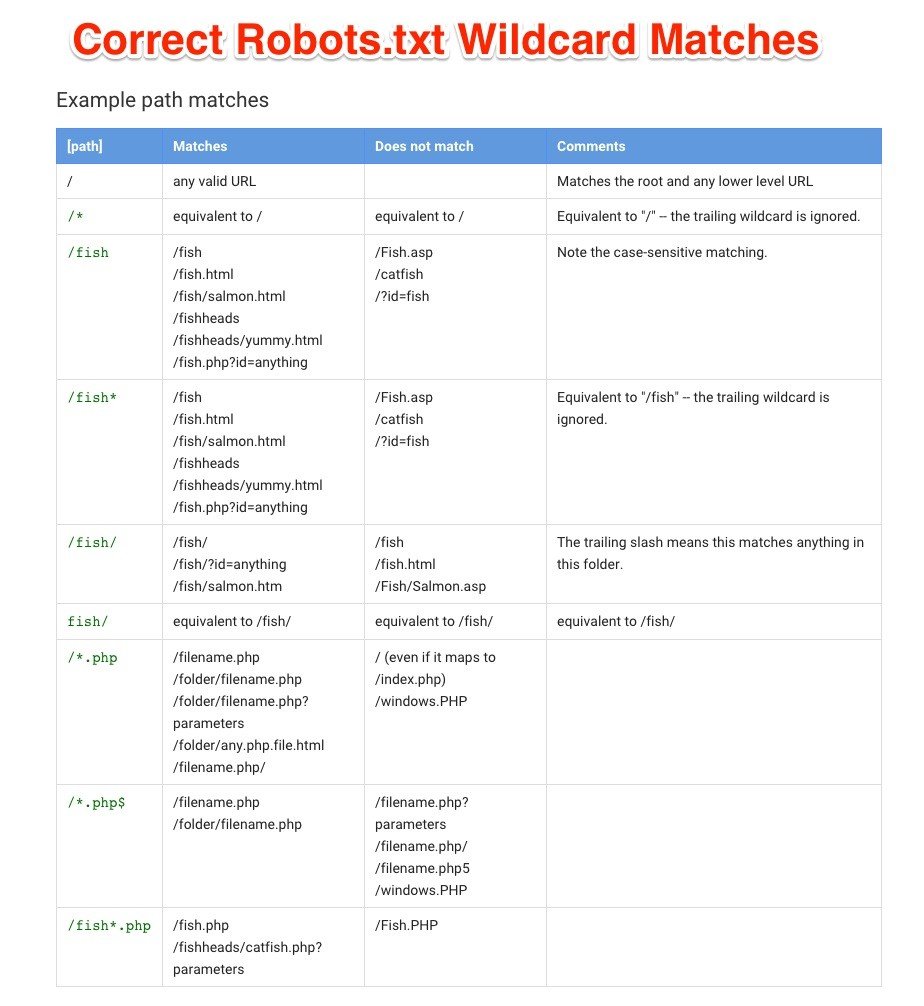

2.Wrong Use of Wildcards

A wildcard is a symbol used to replace or represent one or more characters. Wildcards are typically either an asterisk (*), which represents one or more characters or question mark (?), which represents a single character.

By now, you must be asking yourself how can an * can cause a sudden drop in organic traffic.

Well, when it comes to robots.txt, it can.

Let’s say you wish to block all URLs that have the PDF. extension. If you write in your robots.txt a line that looks like this: User-agent: Googlebot Disallow: /*.pdf$. The sign “$” from the end basically tells bots that only URLs ending in PDF shouldn’t be crawled while any other URL containing “PDF” should be. I know it might sound complicated, yet the moral of this story is that a simple misused symbol can break your marketing strategy along with your organic traffic. Below you can find a list with the correct robotx.txt wildcard matches and, as long as you keep count of it, you should be on the safe side of website’s traffic.

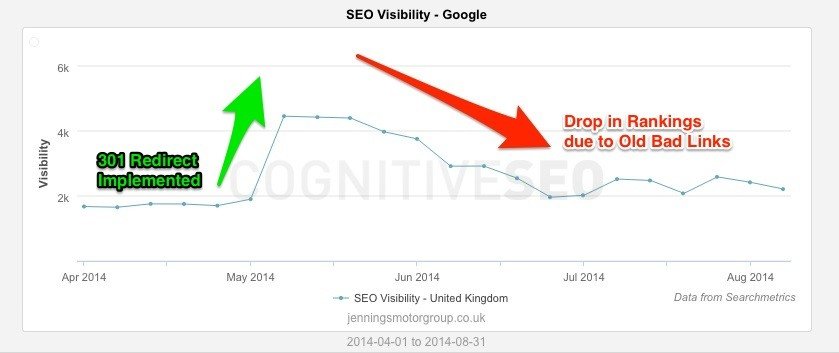

4. Redesigning Your Website & Not Doing 301 Redirects to Old Pages

When migrating your website from one domain to another, if you want to make sure you instantly lose any traffic to your website that you are currently enjoying and all your new visitors will be greeted with a 404 page, do not implement proper redirects.

Any good organic search traffic you experience at the moment can be just a memory if you fail to do 301 redirects.

Let’s say you just did a rebranding of your business and among others, you’ve changed your site’s address. Will your Google traffic drop dramatically ? Only if you choose to do so.

With only a few lines of code you can transfer all the organic search traffic from one domain to another.

What the 301 permanent redirects do is to redirect any visitor, including search engines, to your new site. Along with the direct traffic, the age, authority and reputation of your old website in Google is transferred to the new web address.

How long should you keep your 301s? Like most of the love songs lyrics say, forever and ever.

Will it help you get rid of a Google Penalty? Well, this is debatable but we have a previous interesting blog post written on this matter.

You can find more info on how to implement redirects correctly, directly from the Google representatives themselves.

5. Moving from a Subdirectory to a Subdomain

In case you think that you can move a part of your website from a subdirectory to a subdomain without having your search traffic dropped, think again.

Let’s say that you want to move your blog from a subdirectory URL (yourwebsiterulz.com/blog) to a subdomain (blog.yourwebsiterulz.com). Although Matt Cutts, former Google engineer, said that “they are roughly equivalent and you should basically go with whichever one is easier for you in terms of configuration, your CMSs, all that sort of stuff”, it seems that things are a bit more complicated than that.

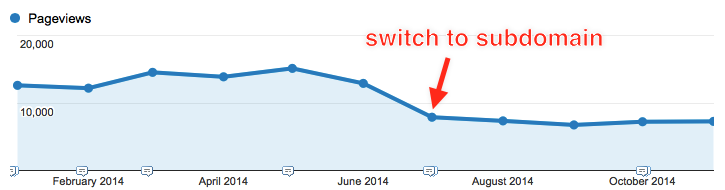

There are some interesting case studies on this issue solely that show exactly the opposite. Below you can see a screenshot from the iwantmyname.com case of switching between subdirectory and subdomain. After a while of traffic dropping, they decided to revert the changes.

Screenshot taken from iwantmyname.com

Yet, what is the problem, actually?

Is not Google clever enough to know that the newly created subdomain is part of the same domain?

Well, Google has made strides in this direction and got much better at identifying and associating content that’s on a subdomain with the main domain. The problem is they’re not good enough to rely on that factor yet and major organic traffic drop can come from this direction.

With the hope that this SEO technicality will change in time, the recommendation for the moment is to keep your content on one single sub and root domain, preferably in subfolders.

6. Giving Up Creating Great Content or Creating Content at All

The best movies don’t always win the best prizes. A similar thing happens in the digital marketing world.

The best content doesn’t always have the best organic search traffic, yet search engines are really trying to rank sites in accordance to their content.

Just by looking at the latest Google updates we can tell that the search engine is constantly refining and improving its algorithm that automatically scores pages based on content quality. There are even quality rating guidelines and Google penalties content based.

Other metrics and factors count as well but content is among those top ranking factors; and you should not let your content get dusty unless you are decided to leave the digital world for good.

Going back to the analogy with the movies, having a great script is not enough; you need great acting, awesome music, good directing, etc. in order to win the prize for the best picture.

You should not keep count of content only if you are planning to have a sudden drop in organic traffic. And if you are already having a good web traffic, do not count on it very much. The SERP is volatile, tons of content is being written as you are reading this and you need to keep up.

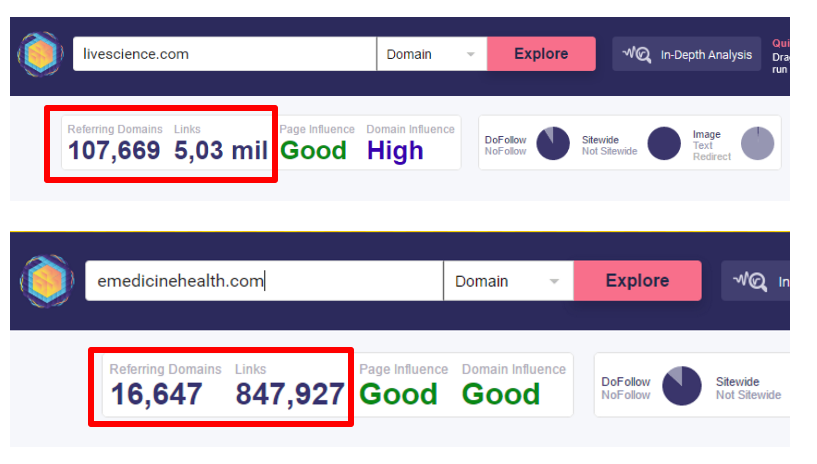

Let’s take a look at the screenshot below. We’ve analyzed two websites, livescience.com and emedicinehealth.com.

And although livescience.com has way more links than its competitor, it seems that emedicinehealth.com is performing better for a lot of important keywords. Why, you might wonder. Well, the answer is simple: content. And although they are both offering good content, livescience.com is a site offering general information about a lot of things while emedicinehealth.com offers articles highly related to the topics, leaving the impression that this site really offers reliable content. We are not saying that the 5 mil links livescience.com has do not matter, yet it seems that in some cases content weighs more.

7. Counting Your Links and Not Your Referring Domains

Do you know the saying: don’t count the days but make the days count? Well, this somehow applies in the SEO world as well.

Or, at least, in the link building industry. Just by looking at the number of links and having an ongoing concern of multiplying the links won’t take you to first page on Google. On the contrary; it will probably make you disappear for SERP.

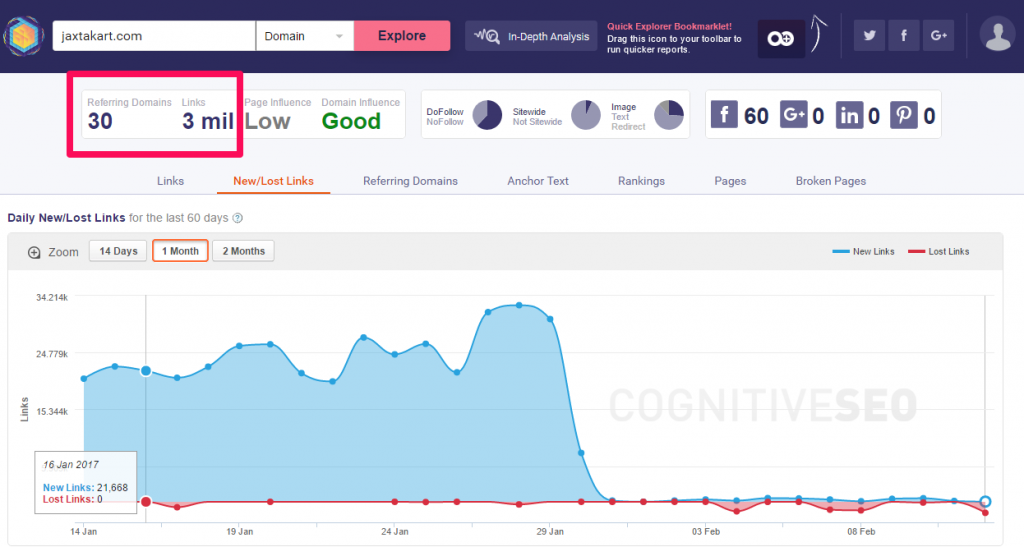

I invite you to take a look at the screenshot below.

If we are looking at the number of links and that number only, things look great: 3 mil links. That’s quite something; great performance. Yet, when looking at the number of referring domain, all your appreciation disappears, right? Only 30 referring domains that generated 3 million links.

What would you prefer: 30 journalists writing about you in 3 mil articles or 100 journalists writing about you in 1.000 articles ?

In which situation do you think you’ll gain more credibility and authority? (Really hoping you’ll choose the second case).

8. Ignoring Mobile First Indexing

It might not be news to you that Google looks primarily at mobile content, rather than desktop when deciding how to rank results.

A “my business is concentrated on desktop, I don’t care about mobile” attitude might lead you straight to the end of page 99 in Google.

It’s called mobile first indexing and, as Google stated, it means that algorithms will eventually primarily use the mobile version of a site’s content to score pages from that site, to understand structured data, and to show snippets from those pages in our results.

Ignore mobile first indexing if you want Google to ignore your website.

9. Moving Your Site to a Slow Host

Are you familiar with the saying: it doesn’t matter how slow you move as long as you don’t stop? Great one, right? Well, forget about it when it comes to your website performance.

Things look quite different when it comes to hosting.

A good hosting isn’t going to guarantee you a spot at the top. But a slow host will surely lead to a drop in organic search traffic.

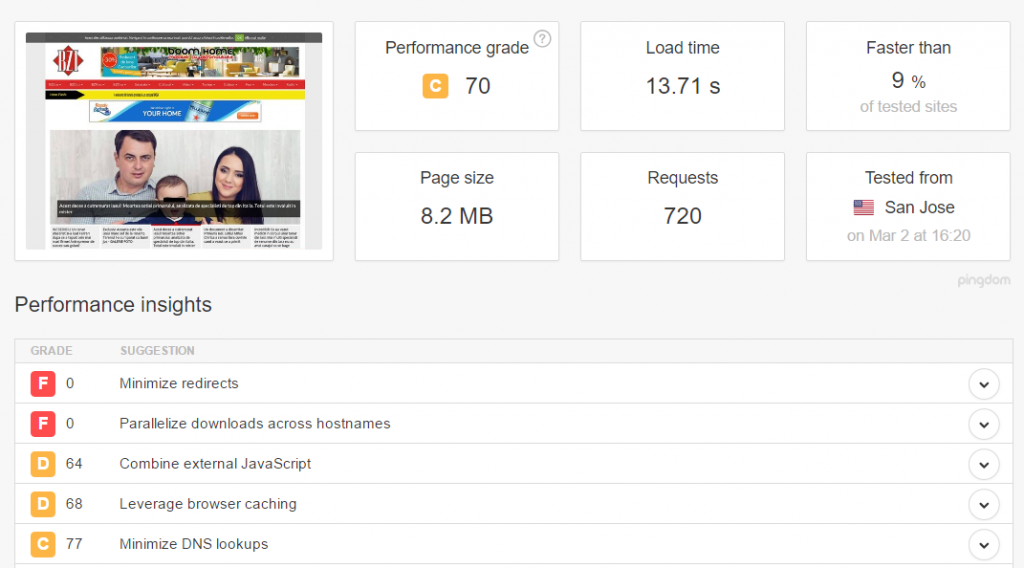

Google kept on saying how page speed is affecting the websites’ performance. They even created a tool for this. There are several website speed performance tools out there that come in handy when trying to figure out the performance of your website.

Screenshot taken from tools.pingdom.com

Cheap hosting might sound tempting and we cannot blame you for this. Yet, in terms of costs-benefits, it might “cost” you the traffic of your website, the revenue of your business.

Shared servers are a common solution and they do work well for most of the sites.

Sometimes sharing is scaring.

If you are on a shared server, as convenient as it might be for you, one resource-hungry neighbor can kill your site’s speed and therefore your organic search traffic. Better solutions when it comes to servers can be VPS (virtual private servers) or dedicated servers. They are indeed more expensive but they pay off.

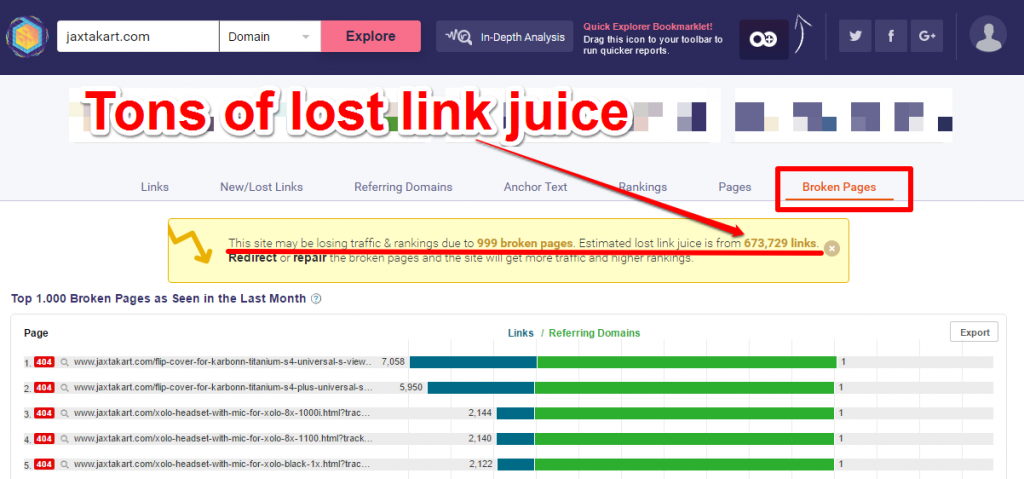

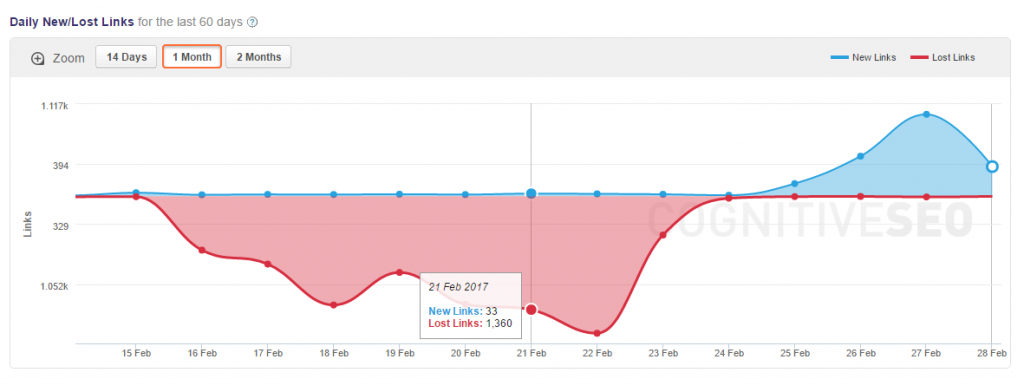

10. Ignoring Your Broken Pages and Links

Ignorance is a bliss…sometimes. But never when it comes to digital marketing. Unless you want to experience some really nasty traffic dropped dramatically.

It might happen to have some lost links from now and then or a broken page due to some crawling issue or something similar. Yet, when your broken pages and links form up a figure just as big as your phone number, then you might be facing a problem; in terms of site user experience and organic traffic as well. Your bounce rate will increase, your overall usability, you will be loosing links and therefore you will start asking yourself “why did my organic traffic drop”?

Take a look at the image below. The number of broken pages is enormous, not to mention the link juice lost. Does traffic suffer due to this issue? You bet it does. Is there a fix for this? The answer is again yes.

The irony is that along with all the issues broken pages bring, there are some forseen opportunities as well. If you are interested in getting back your lost traffic and that link juice from your broken pages, two easy workouts can be done:

- 301 redirects – this way, the link juice will pass to the redirected page.

- “Repair” those pages – you can create a landing page with dedicated content.

A similar thing happens when it comes to lost links. As mentioned before, having a couple of lost links is not such a big problem. Yet, when you are losing hundreds of links on a daily basis, that should be an alarm signal for you.

As we are your organic search traffic number one fan, below we’ve listed two steps that can help you out in the event you are experiencing a massive lost link drop:

- Find those lost links. There are SEO tools that can help you here. We recommend you to use the Site Explorer as it gives you instant results and it’s easy to use.

- Contact the webmaster who has the broken link. Indeed, a bit of outreaching and networking is involved here but your traffic and keywords’ranking are worth all the effort.

11. Hiring an SEO Company That Will Guarantee You Instant Traffic

Having lots of traffic is an entirely desirable outcome and there’s nothing wrong in keeping that goal in mind whatever strategy you chose to implement.

Keep your head in the clouds but your feet on the ground.

But what happens when your desire goes beyond any realistic expectation? You hire an SEO company that will guarantee you the first position on the most competitive keywords ever.

Indeed, the first thing you should ask any digital marketing company is:

Will my site rank #1? But if the answer is yes, start looking for somebody else, don’t hire them!

This, of course, if you do care about your organic traffic and your overall inbound marketing strategy and you don’t want to make a mess out of them. The truth is that Google has so many rules and algorithms for scoring a website and that the SERP is so volatile that it’s nearly impossible to predict which site will have the best organic traffic and from what exact reasons.

If you want to drop from your hard worked #14 position to #79, you can start looking for companies that advertise themselves like the one in the image below. We don’t even know what to believe when it comes to companies that promise guaranteed instant traffic: that they are folly (in which case you don’t need them) or charlatans (in which case you don’t want them). If you want to find out more about SEO agencies and how they can ruin your website’s traffic, we wrote a previous blog post on this.

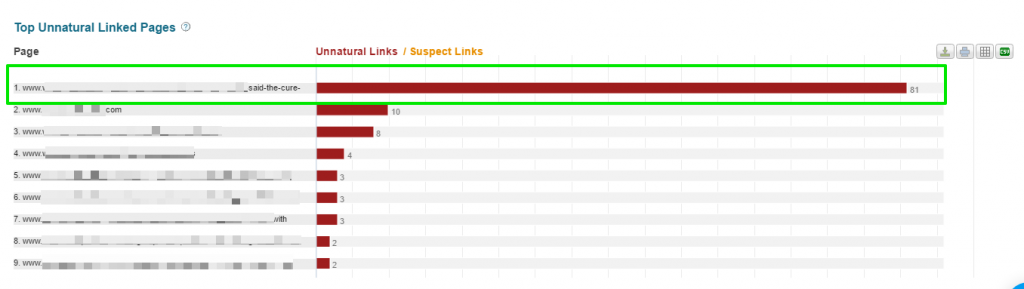

12. Dropping Lots of Shady Links on Other Sites

You might know by now that unnatural links can get you into trouble and can cause sudden drop in organic traffic. Google has developed a whole series of algorithm changes and penalties named after cute animals that make unnatural links the persona non grata of the internet.

Yet, if you are really determined in screwing up your Google organic traffic, having a lot of unnatural links might not be enough.

Having lots of unnatural links connected to one page only, well that is true dedicated work for losing massive traffic.

Just take a look at the screenshot below. Indeed, there is an unnatural link issue there; but more than that, the majority of unnatural links lead to just one page.

If you are in a situation similar to the one presented in the screenshot below, we recommend you to fix it because if it hasn’t affected you until now, it will for sure on the long term. The easiest way to do identify your unnatural links and get rid of them is by using specialized tools.

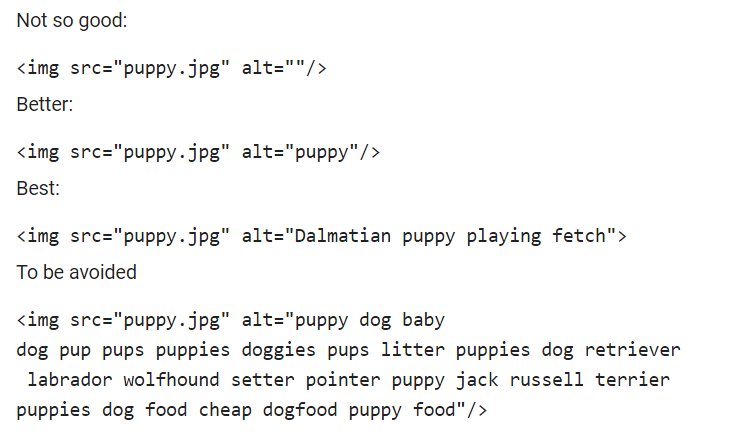

13. Not Using Alt and Title Tags

The best way to predict the future is to invent it. This seems to be Google’s motto. Because yes, Google can “read” your image and this can be a ranking factor. We documented this in a previous article. With this already happening, the use of alt and title tags is commonplace. Therefore, no alt tags and title tags = no traffic.

Google places a relatively high value on alt texts to determine what is on the image but also to determine the topic of surrounding text.

First things first, you should use alt text not just for SEO purposes but also because blind and visually impaired people won’t know what the image is about. Second, yes, the title and alt tags influence your traffic as mentioned by the search engine themselves.

If you want to keep your organic traffic safe and sound, include subject in the title tag and in the image alt text.

We’re not saying you should spam with your focus keywords into every alt tag or title. But you need a good, related image for your posts in which it makes sense to have the keywords you are interested in there.

14. Duplicating Your Content – Internally & Externally

Putting it simple, duplicate content is content that appears on the world wide web in more than one place. Is this such a big problem for the readers? Maybe not. But it is a very big problem for you if you are having duplicate content (internally or externally) as the search engines don’t know which version of the content is the original one and which one they should be ranking.

Can duplicate content get your website into trouble? The answer is yes.

Although it is stated that there is no such thing as duplicate content penalty, the fact you have no unique content will prevent you from getting organic search traffic from Google. The truth is that search engines reward uniqueness and added value and filter duplicate content; be it externally or internally.

Having duplicate content will not necessarily penalize your website but they will not help with your traffic at all.

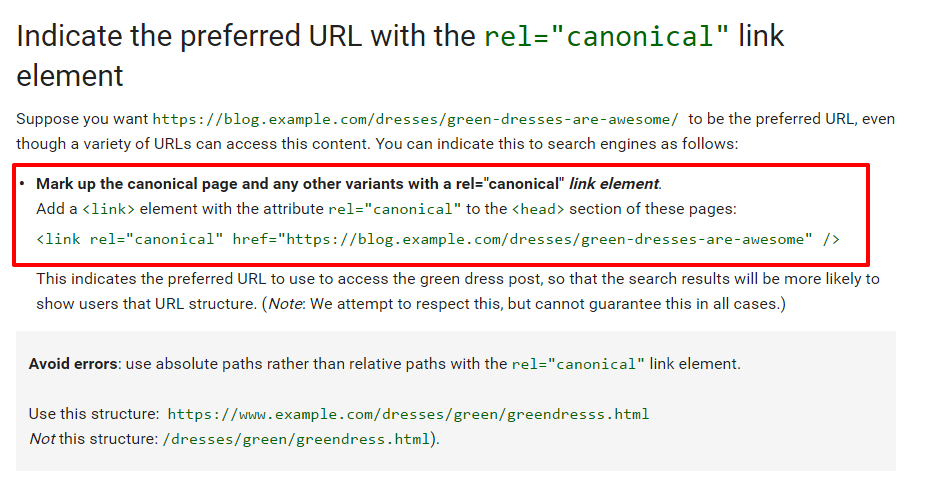

15. Ignoring Canonical Tags

The time might have come when you want to secure your website and switch from HTTP to HTTPS. Easier said than done. A lot of issues might appear along the way that can screw up your hard-worked organic traffic. However, there is one thing that can really mess up things for your website while moving your site to https; and that is the canonical tag.

The truth is that a major problem for search engines is to determine the original source of content that is available on multiple URLs. Therefore, if you are having the same content on http as well as https you will “confuse” the search engine which will punish you and you will suffer a traffic loss. This is why it’s highly important to use rel=canonical tags. What exactly is this?

A canonical link is an HTML element that helps webmasters prevent duplicate content issues by specifying the “canonical” or “preferred” version of a web page

Just to be sure you are doing things in a search engine friendly way, on the Google support page you can find more info on this matter.

If you indulge me an analogy, if you’re moving from one place to another, you would like the postman to send all the love letters to your new address and you wouldn’t want them lost in an old mailbox that no one uses, right? (I am guessing you would want the bills to be sent to the old address instead). A similar thing happens when it comes to moving your site’s address.

If you don’t want your organic traffic dropped for good, you need to use the re=canonical tags.

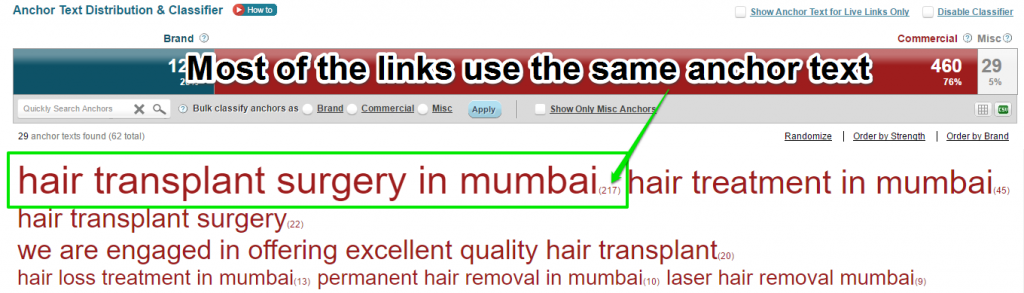

16. Using the Same Commercial Anchor Text

The days of keyword stuffing are gone, or at least this is what we want to believe. Yet, when it comes to anchor texts, not only using them too much can break your traffic but also using the same anchor text for most of your links can be an issue.

Let’s say that you are selling dog food. And most likely you will want the best traffic possible on the keyword “dog food”. Creating/building/earning all of your links with the same anchor text will get you in trouble. If you are having 600 links from which 400 use the anchor “dog food”, you might be facing a problem; it will surely raise a red flag to the search engines. And we all know what search engines do when they are getting these kinds of alarms: they begin applying penalties and move your websites so far from reach of the user that you will not be even listed in top 100.

A variety of anchor texts, branded and commercial keywords all together, is a sign of naturalness and health of your link profile.

Conclusion

Instead of the conclusion, indulge me for a couple of minutes to share a story with you.

Once upon a time, there was an old farmer. One day his horse ran away. When hearing this news, his neighbors came to visit. “Such bad luck,” they said, but the farmer replied: “We’ll see.”

The next morning the horse returned to the farmer, also bringing with it two other horses.

“Just great” the neighbors exclaimed! Again, the farmer answered: “We’ll see.”

The following day, his son broke his leg and therefore we could not help his father with his farming. “That’s terrible,” the neighbor said. “We’ll see,” replied the old farmer.

A couple of days later, the army came to the village to draft new recruits. As the farmer’s son had a broken leg, they did not recruit him. Everyone said, “What a fortunate situation.”

The farmer smiled again – and said: “We’ll see.”

The moral of this story is that everything is contextual. And this applies to everyday happenings and to your online marketing, traffic sources, and conversion rates as well. It happens that what looks like an opportunity to be actually a setback and vice-versa. We all make changes within our sites with the purpose of having tons of traffic. We are in a continuous race for inbound links, domain authority, technical SEO, diagnosing traffic drops, search volume, keyword research and we forget to take a few steps back and see how all of these influence our overall site’s performance. We’ve documented the ranking drop issue in an earlier post as well and you can take a look at it as well to better understand this phenomenon.

Being dropped in rankings is not anyone’s desire, but it happens to be a reality. And as much as we’d like to put the blame on algorithmic penalties, manual actions or any other common reasons, sometimes we just need to take a closer look at the actions done by ourselves.

Site Explorer

Site Explorer Keyword tool

Keyword tool Google Algorithm Changes

Google Algorithm Changes

Wow, never knew these many reasons for traffic drop. Usually Google pushes you down for over anchor text optimization or spammy backlinks as far as I know. But never knew that ignoring Technical issues might cost us a serious penalty. Thank you so much for this in depth article. Shared on my Facebook page! 😀

Hi Cornelia,

You have detailed an important tutorial in this condensed guideline.

I think I am guilty of point 3 on no-indexing of images. I will go back to my site and effect the right changes.

Cheers

Good luck with the no-indexing, Kunle!

Glad you found it useful, Satish! 😉

Thanks for sharing this well informative post of organic traffic drop. Very helpful in performing my work.

Thanks for Sharing Such a Great Information about Rankings Drop. 3,7,14,15,16 pints are very helpful to me

Hey,

My 2018 JAN AND FEB traffic is keeping down and down day by day. This is really frustrating.

Some of the posts that were getting 100 of pages views individually ARE not getting 20 to 30 views. resulting my overall traffic has decreased by 50%.

IS There any problem. I’ve done most of the things but my traffic is still there.

Is it temporary or permanent?

I have been having a similar problem since the time i switched to a new theme. Did you get some help?

Great and helpful guide! …and really interesting for me! Shared with my friends on FB 🙂

I seem to be getting drop in my organic traffic. Or maybe just because it spiked last week and going back down now. Not so low as the previous week though. I followed the above instructions. Could it be because of a spammy backlinks detected? There’s a backlink with spam score of over 80%. I did not create it. Should I remove the image to which it’s linked to? And add new image with different filename?

My visitor drops since last month and today I came across this post and it is really helpful and informative. Thank you for the share.