Each and every day that passes, another fact about SEO is revealed, another important tip is told, another story is learnt just by opening our email, twitter account, or our smartphone. We have the information at hand. Lots and lots of news from the internet marketing world go by our ears and our eyes. Make sure to listen to the right one.

And, speaking of important stuff, we’ve searched and scooped through the Webmaster Central Hangouts to reveal the most intriguing facts about SEO Google has ever said.

Google Webmaster Central Hangout is an important source of information you should always keep an eye on. It is available for everyone, you can send questions or address them live directly to experts, and find insights straight from Google.

You can check Google’s working hours directly on their website and listen to all recorded videos, starting with 2012, regarding all kinds of topics, offpage SEO and onpage SEO facts included. Yet, we thought you wouldn’t want to listen to all the recorded hangouts, therefore we’ve made a summary for you as we’ve curated the most interesting of them all and tackled the following:

- You Can Get a Site Architecture Penalty

- Mobile PageSpeed Is Not Yet a Ranking Factor

- Search Console Doesn’t Count Knowledge Graph Clicks

- Don’t Canonicalize Blog Pages to the Root of the Blog

- Applying Multiple Hreflang Tags to One URL Is Possible

- No Limit on the Number of URLs That Google Bot Can Crawl

- Now You Can Get ‘Upsetting-Offensive’ Content Flag

- Disavowing Is Still Necessary for a Post-Penguin Real-Time Era

- URL Removal Tool Affects All Domain Variations

- No Specific Limit for Keyword Density in Content

- Content Duplication Penalty Doesn’t Exist

- Better to Add Structured Data Directly on the Page Than Using Data Highlighter

- Have Relevant URL to Rank Your Images Higher in Google

- Low-Quality Pages Influence the Whole Domain Authority (DA)

- Google Works With Over 150,000 Users and Webmasters Against Webspam

- Google Knows Your Work Place and It Isn’t Afraid to Admit It

- Personal Assistant Search Optimization (PASO) Might Be the Future of SEO

- Google Search Console’s Metrics Get Fully Integrated Into Google Analytics

- Object Recognition Works in Combination With Image Optimization for Better Results in Google

- Google Site Search and Custom Search Within Site Will Become One

- HTTPs Ensures That the Information Users See Is What the Owner of the Site Provides

- URLs Should Have Less Than 1,000 Characters

- You Can Have Multiple H1 Elements on a Page

- New Content Should Be Linked High in the Site Architecture

- Google Says It’s Ok to Have Affiliate Links

- Google Will Treat Nofollow Link Attribute as a ‘Hint’

- The Number of Words on a Page Is Not a Ranking Factor

- Google Does Not Render Anything Unless It Returns a 200 Status Code

1. You Can Get a Site Architecture Penalty

Does Panda take site architecture into account when creating a Panda score? was one of the questions answered on a Google Webmaster Central Hangout.

Google Panda is an algorithm that looks out to the overall quality of the website and its content. In a previous blog post, we detailed how this Google update (the Panda algorithm) can affect websites and how it could actually be a topical authority.

From a Google point of view, Google Panda is more of a general quality evaluation and it takes into account everything. The site architecture is included. If somehow it affects the quality of the site overall, you might get a Google Panda penalty for bad site architecture.

Panda penalizes websites for “low-quality sites” or “thin content sites” and aims to return results with higher-quality sites near the top of search results. Let’s say, for example, that your website has issues that affect the quality of the site, such as a bad structure – then you should take some time to improve that.

If you are in the position of making a website redesign, creating a new site from scratch or doing something similar that might affect the architecture of your website, you should take into consideration what John Mueller said.

Follow the full conversation in the next Google Webmaster Central office-hours hangout with John from February 24, 2017 at 9:06:

2. Mobile PageSpeed Is Not Yet a Ranking Factor

Recently, another intriguing question was addressed at a Google Webmaster Central Hangout:

How much emphasis does mobile PageSpeed have as a ranking factor?

John Mueller made a full disclosure about this in the next video from March 2017 (at 46:44), saying that for the moment Google doesn’t use it at all. So you’ve all found the truth, my friends, said directly by Googlers:

Mobile PageSpeed is not an index ranking factor.

As plain and simple as that.

Having a mobile Page Speed improves user experience and it is a critical web redesign consideration, but until now it hasn’t been used as an index ranking factor to generate mobile searches.

From an SEO point of view, for the moment, Google doesn’t take it into account at all, but keeping count of mobile PageSpeed is an important SEO task you should not overlook. This way you can offer a nice experience to your users. If they are pleased, they might come back and increase your conversion rates. Invest to gain.

3. Search Console Doesn’t Count Knowledge Graph Clicks

Another intriguing fact about SEO revealed on Google Webmaster Central Hangout is that the Search Console doesn’t count Knowledge Graph sidebar clicks or impressions.

John Mueller said that:

If you look for your company’s name and it appears on the sidebar with a link that goes to your website, it isn’t counted. On the other hand, he said that sitelinks should be counted.

You can listen to his full explanation from the Google Webmaster Hangout in the next video from February 2016 at 30:15:

4. Don’t Canonicalize Blog Pages to the Root of the Blog

Another thrilling fact about SEO revealed in a Google Webmaster Hangout was about blog pages canonicalization to the root of the blog. List to what John says on February 2017 at 16:28:

Somebody asked if it’s a correct procedure to set up blog subpages with a canonical URL pointed to the blog’s main page as a preferred version since the subpages aren’t a true copy of the blog’s main page.

Setting up blog subpages with a canonical blog pointed to the blog’s main page it isn’t a correct set up because those pages are not an equivalent, from Google’s point of view.

And even if Google sees the canonical tag, it ignores it because it thinks it’s a webmaster’s mistake.

This method has been overly used by lots and lots of websites, but that doesn’t make it a good thing. Small or big companies make mistakes all the time.

5. Applying Multiple Hreflang Tags to One URL Is Possible

Another exciting SEO fact was unveiled after a Googler asked the next question:

Can you assign both x-default and en hreflang to the same page?

John Mueller gave a short and comprehensive explanation to this:

Yes, you can apply x-default, and en hreflang to the same page.

Also, it is possible to say to Google this is English for UK, and it is a default page. The x-default hreflang attribute doesn’t have to be a different page. Another thing you could do is to set multiple hreflang tags going to the page. For example, you can have one for UK English, Australian English, American English, and the UK English is the default one you want to use for a website.

You can take a look at the full disclosure from February 2017, starting with 21:06:

6. No Limit on the Number of URLs That Google Bot Can Crawl

The idea that Google can crawl only 100 URLs is a myth.

That is one of the most interesting pieces of information among the facts about SEO exposed on Google Webmaster Central Hangout. Somebody asked if there is a limit to the number of URLs that Googlebot can crawl. John Mueller answered that there is no limit. See the video below from April 2017 (47:58):

Up until now, we knew that Google could crawl a hundred pages from every website. There is no such thing. He also explained that they analyze the crawl budget. This means they try to see what and how much Google can and wants to crawl regarding the website itself.

The crawl budget topic was explained on the Google Webmaster Central Blog along with the factor that impacts this topic:

- Faceted navigation and session identifiers

- On-site duplicate content

- Soft error pages

- Hacked pages

- Infinite spaces and proxies

- Low quality and spam content.

7. Now You Can Get ‘Upsetting-Offensive’ Content Flag

Google’s effort to stop fake news and offer qualitative results never ends. They are rolling an on-going process of improving search value.

Paul Haahr, ranking engineer at Google, even said: “they’re explicitly avoiding the term ‘fake news,’ because it is too vague”.

Google is continuously trying to offer qualitative results and for that, they have worked with over 100,000 people to evaluate search results.

The people involved go under the name of Google search quality raters due to the fact that they conduct real studies and they are actually searching on Google to rate the quality of the pages that appear in SERP, without altering the results directly. These raters use a set of guidelines that have an entire section about the “Upsetting-Offensive” content flag that they can use.

A content could be flagged under the next circumstances:

- Has content that promotes hate or violence against a group of people based on criteria including (but not limited to) race or ethnicity, religion, gender, nationality or citizenship, disability, age, sexual orientation, or veteran status.

- Contains content with racial slurs or extremely offensive terminology.

- Includes graphic violence.

- Explicit how-to information about harmful activities (e.g., how-tos on human trafficking or violent assault).

- Other types of content which fall under the upsetting or offensive category.

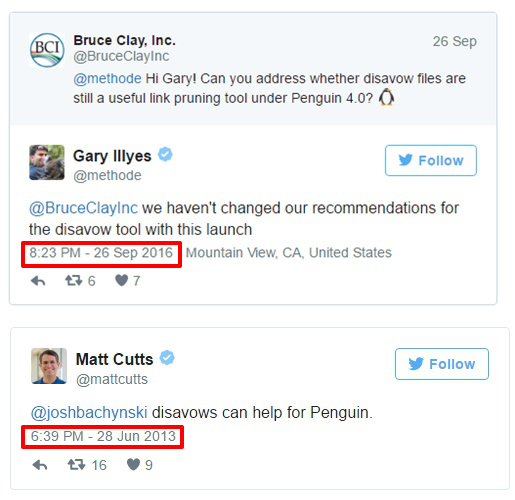

8. Disavowing Is Still Necessary for a Post-Penguin Real-Time Era

Another intriguing fact that Google exposed on Google Webmaster Central Hangout is the confirmation the following:

You should still use the disavow file even though Google Penguin is real time now.

John Mueller explains that if you know your website was using shady unnatural ways to generate backlinks that didn’t respect Google’s guidelines, then you need to disavow those links that could harm your website. You need to evaluate your backlink profile to see where you stand.

The intriguing question somebody asked him was “Now that Penguin runs real time would it be correct to think that if we found a few bad links on our site and disavow them, we might see a ranking improvement relatively quickly? Also, does Penguin now just devalue those low-quality links, as opposed to punish you for them?”

John Mueller declares that Google looks at the web spam on the whole website to see what decision they have to take. If you have damaging links and use the disavow file, Google will find out that you don’t want those links to be associated with you.

Google Penguin is a webspam algorithm and it doesn’t focus only on links. It decreases search rankings for those sites that violate Google’s Webmaster guidelines.

On the final base, disavowing unnatural links helps Google Penguin and it is a recommended practice. One of the facts about SEO backed up by John Mueller and Gary Illyes:

You can follow the discussion from October 2016 on the following Google Webmaster Hangout with John Mueller about this topic at 23:20:

9. URL Removal Tool Affects All Domain Variations

Take into consideration all variations when you want to use the URL removal tool, including the www/ non-www and http/ https. This is not a fix for canonicalization.

If you’re trying to take out the HTTP version after a site move, then both HTTP and HTTPs version will be removed and it is something you definitely don’t want.

It is recommended to use the URL removal only for urgent issues, not for site maintenance.

For example, if you want to remove a page from your website, you don’t have to use the URL removal tool because over time it will disappear automatically as Google will recrawl and reindex those pages.

Listen to John Mueller’s entire dialogue from December 2016 in a Search Console Google Webmaster Central Hangout at 17:18.

10. No Specific Limit for Keyword Density in Content

A compelling question has been quite recently asked at a Google Webmaster Hangout about the limit for keyword density in content. John Mueller gave a straightforward answer regarding this topic:

We expect content to be written naturally, so focusing on keyword density is not a good use of your time. Focusing too much on keyword density makes it look like your content is unnatural.

Also, if you stuff your content with keywords, it makes it harder for users to read and understand what your content is about. On top of that, search engines will recognize that instantly and ignore all the keywords on that page.

Instead of concentrating on the limit of keyword density in content, you should aim your attention at making your content easy to read.

Use the next trick, for a change. Try and give somebody a phone call to read the content out loud. If they understand what you said in the first 2 minutes, then you’re safe, your content could pass as natural.

11. Content Duplication Penalty Doesn’t Exist

Content duplication has been a long-debated topic.

Let’s break an SEO myth: duplicate content will bring you a Google penalty. It’s not true. It doesn’t exist. We debated this issue in a previous article as well.

Back in 2013, Matt Cutts, the former head of Google’s Webspam team, said that:

In the worst-case scenario (with spam free content), Google may just ignore duplicate content. I wouldn’t stress about this unless the content that you have duplicated is spammy or keyword stuffing. – Matt Cutts

Google tries to offer relevant results for every search query. That is why, in most cases, the results that appear in SERP are filtered, so the information isn’t shown more than once. It is not helpful for users to see two pieces of the same data on the first page.

In 2014, during a Google Webmaster Central hangout John Mueller said that Google doesn’t have a duplicate content penalty.

We won’t demote a site for having a lot of duplicate content – John Mueller

It was confirmed on June 2016, when Andrey Lipattsev, senior Google search quality strategist, repeated and said content duplication penalty doesn’t exist.

But you could get a penalty if you manipulate Google’s guidelines and create content in an automated way, content that is scraped from multiple locations and that doesn’t serve another purpose than generating traffic.

12. Better to Add Structured Data Directly on the Page Than Using Data Highlighter

The submitted question on this topic was the following:

Is is better to use the Data Highlighter from Search Console or it is better to add up markup from the web page? What’s the best practice?

John Mueller says it’s a great option to use and try out structured data. There are sites with content that needs to have structured data and Data Highlighter is an easy way to test it out.

Structured data implementation on your page and not through the Data Highlighter is something that needs a lot of attention. Yet, it is the recommended procedure if you think of the long-term perspective. Markup data is available for everyone. You can see if it’s implemented correctly and, more importantly, you don’t have to worry about it.

If you marked your content for each element, then when you change your layout, for example, your data will be shown properly. As for Data Highlighter, it has to learn that change.

You can listen to the whole explanation in the next Google Webmaster Hangout from April 2017 (starting with 46.04):

13. Have Relevant URL to Rank Your Images Higher in Google

Another important fact we’ve figured out from Google Webmaster Central Hangout is about the impact of the URL of an image in Google. Can it help Google understand better what an image is about?

And here is what Google had to say about this:

We do look at the URL for images specifically and we try to use that for ranking, but if the URL is irrelevant for that query and nobody is searching for that term, it doesn’t help Google.

The main point is that Google looks at the URL when ranking images. It is an important step because Google aims to deliver qualitative results. The correlation between the domain name and the query is highly significant. It is an effective SEO tactic you could apply.

SEO Friendly URLs can ultimately lead to higher perceived relevance, leading back to an edge from a SEO perspective. And we know this for a fact, from a study conducted by us on 34k keywords. Here is what John had to say about this on February 2017, at 40:17:

Let’s say that the title name of a URL is 123 and the image is about flowers. In this situation, the URL won’t disappear because of its irrelevant file name but there might be a problem here on how and for what query that image will show.

Even though Google is becoming smarter and smarter at object recognition, we still need to help it by offering descriptive information about that image (follow the guidelines from point 20 on this matter).

SEO Friendly URLs are a must when we talk about content, images or any other types of information on your website. It is important to offer a higher importance when naming your files; an SEO fact also backed up by Matt Cutts.

14. Low-Quality Pages Influence the Whole Domain Authority (DA)

Another interesting fact about SEO that Google debated on Google Webmaster Central Hangouts was whether low-quality pages influence Domain Authority.

The exact question addressed to Google’s representatives was “Can low-quality pages on the site affect the overall authority?”

In general, when the Google algorithm looks at a website, it also takes into consideration individual pages.

You can find more on this subject in the following Google Webmaster Hangout from March 2017 at 10:05:

Let’s say you have a small website. If you have bad quality links on a website, then it could affect the domain authority. On the contrary, if you have a large site and only a sprinkle of bad pages, then the damage is not so big. Google understands that, in a bigger picture, those pages aren’t the main issue.

The main pain point is that low-quality pages affect the way Google views the website overall and this is something you should fix either way.

Find a solution for that pages. You could either remove them or try to improve their quality.

15. Google Works With Over 150,000 Users and Webmasters Against Webspam

Among the most interesting facts about SEO that Google exposed on Google Webmaster Hangout is the way Google fought spammers in searches. Webspam is a persistent problem in our days. It fired an alarm signal when they saw the data in the most recent SEO statistics report, published afterward on the blog.

In 2016, there was a 32% increase of hacked sites compared to 2015.

But now, with the help of users, the problem of spam Google search results is being stopped. Last year, Google started working with users, besides webmasters, to improve the quality of SERP, to clean it of spammy websites and to make it a safe web environment.

The Webmaster Central stuff managed to work with over 150,000 website owners, webmasters and digital marketers against webspam. Also, users tackled webspam by helping Googlers.

Approximately 180,000 spam reports were submitted by users around the world, as we spotted on Google Webmaster Central Blog.

Besides making Google Penguin live and improving the algorithm to take actions against webspam, the webmasters from the spam department at Google performed manual reviews on sites’ structured data markup and took manual action on more than 10,000 sites that did not meet the quality guidelines and followed black hat SEO techniques.

16. Google Knows Your Work Place and It Isn’t Afraid to Admit It

Nowadays, we take our smartphone everywhere. It’s like it’s attached to our hand because we have a lot of information on the phone. We are logged into email, we have easily access to our accounts, to our contacts and we can easily find everything we want with a quick search on Google even if we walk or ride.

Google Assistant makes it easier to search and find what we want by offering us the possibility to make voice searches and personalize our content on Android, even when we’re offline.

We’ve debated the voice search topic leisurely on a previous blog post I invite you to browse.

With this feature you can take lots of actions on your phone and on Google search:

- Find contacts call, email, send messages on social apps (Facebook, Twitter, etc).

- Set different actions on your personal phone (set alarm, turn on NFC, turn on Bluetooth, brightness, take a picture).

- Organize your calendar and see future events or schedule a meeting.

- Open apps you have on your smartphone.

- Listen to songs.

On a more complex level, you could ask the Google Assistant questions such as “what do I have to do today?”, “How long will it take to go from Princeton to New York?”, “Book a table at East Restaurant”.

You can see the personalized touch on your phone if you search in Apps for a person, task, event and so on. You’ll find the results for that specific query from all your installed apps (as you can see in the picture above).

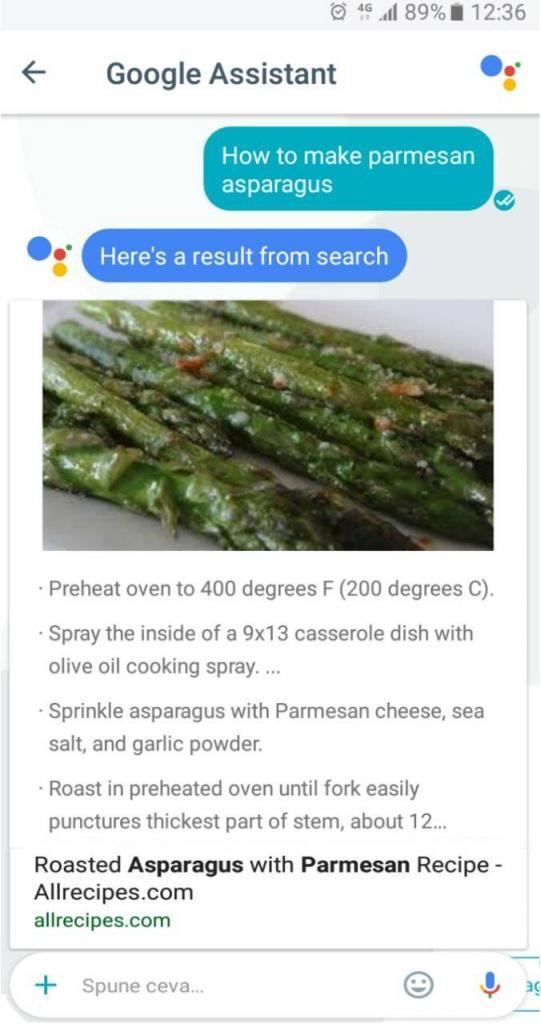

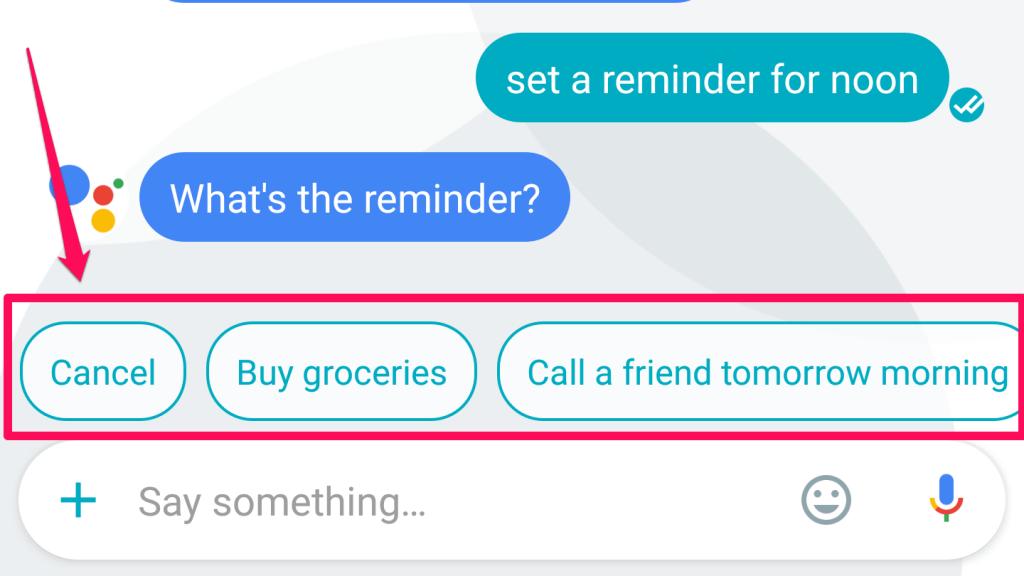

17. Personal Assistant Search Optimization (PASO) Might Be the Future of SEO

PASO comes from Personal Assistant Search Optimization. PASO might be the future of SEO because mobiles undergo a continuous development, now with the Google Assistant and the AMP feature for mobile optimization.

Google is trying to simplify the search process and to make it as easy as possible to get the information you want with fewer taps on your mobile device.

An important aspect why PASO might be the future is due to the fact that it offers personalized information for each user. It is an important aspect to keep in mind for getting improved search engine rankings.

Google Assistant is the quickest and easiest way to get your hands on the information. It doesn’t matter if you ask for a receipt, for explanations/definitions, for directions. See the example below:

You ask and Google Assistant gives you the information in a heartbeat. It is more likely that the personal assistant result has a higher CTR, because it is tailored to your answer and it’s the only one you see comparing with the results from search engines. And besides that, it offers you suggestions related to your previous search.

If you want to get featured on Google Assistant you need to make sure you answer a question and your piece of content is optimized for humans.

You should follow the same steps as optimizing your content for ranking number zero. One way would be to ask the question and then answer it. This way you take a shot to get displayed in the answer box.

18. Google Search Console’s Metrics Get Fully Integrated Into Google Analytics

The Google Search Console helps webmasters manage the way their websites appear in SERP and Google Analytics helps users to integrate services like Webmaster, Adwords, and other tools to see results based on the source (paid search, organic search result).

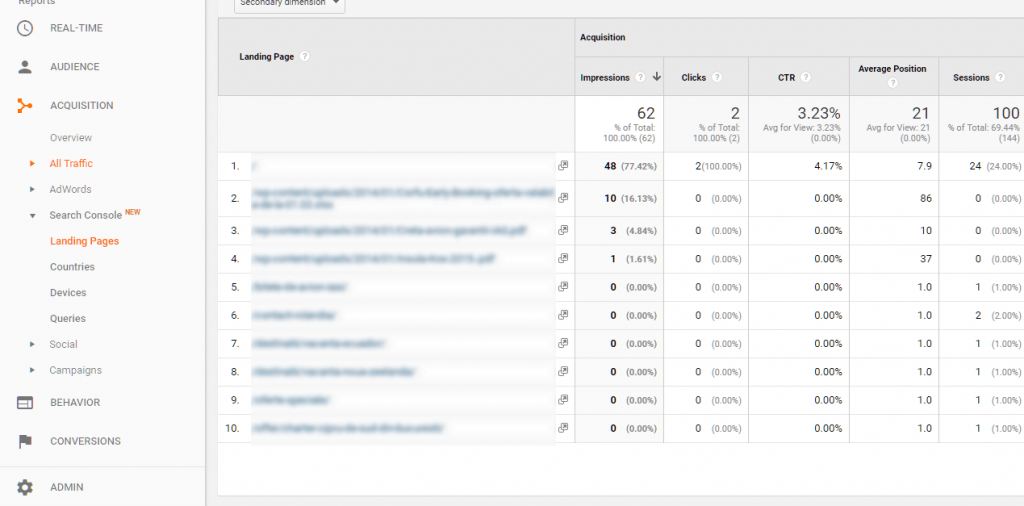

Along the years, users could link Google Webmaster tools with Analytics, but they could see the results separately, in different sections. The Search Console results appeared in Google Analytics under the Acquisition section, as you can see in the next screenshot (this option is still available):

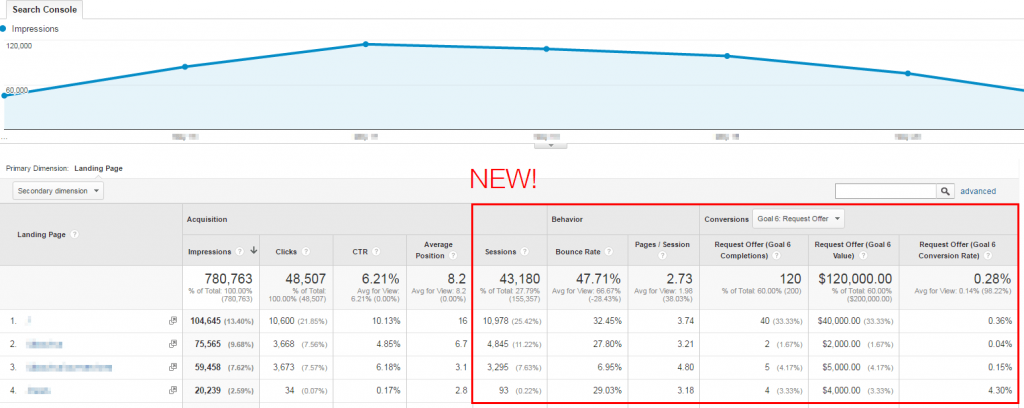

In the old version, you could only see how users came on the website and not what they did once they got there. Now, with the next improvement, webmasters can see all the metrics in one place and make decisions based on the data combined with those two tools.

You have access to the Acquisition, Behavior and Conversions data in one place. Due to this update, you could have many more possibilities to use the search data, as mentioned on the on Google Webmaster Central Blog:

- discover the most engaging landing pages that bring visitors through organic search;

- discover the landing pages with the highest engagement but with low attractions of visitors through organic search;

- detect the best ranking queries for each landing page;

- segment organic performance for each device in the new Device report.

19. Object Recognition Works in Combination with Image Optimization for Better Results in Google

The question that popped out in another Google Webmaster Central Hangout was:

Google is getting better and better at object recognition. Does this means that we no longer need to optimize our images description with descriptive files names, alt tags, title tags, etc.?

Having optimized images is a good indication for getting improved search engine rankings.

At this moment, we still need to optimize our images in order to rank as high as possible.

We won’t have to do that, maybe in the future. For the time being, having a relevant file name, alt description, title tag, caption on the image will help Google understand. Also, the text around the image helps Google understand what that page is about and what it needs to rank for.

Maybe in the future, 5-10 years from now, if things go very well, all that information won’t be necessary, but for now optimizing images helps object recognition to offer better results.

You can listen to the whole conversation on intriguing facts about SEO in the next video from February 2017, at 13:07:

20. Google Site Search and Custom Search Within Site Will Become One

Do you know if Google Site Search is going to shut down and transfer to Custom Search? What’s the impact?

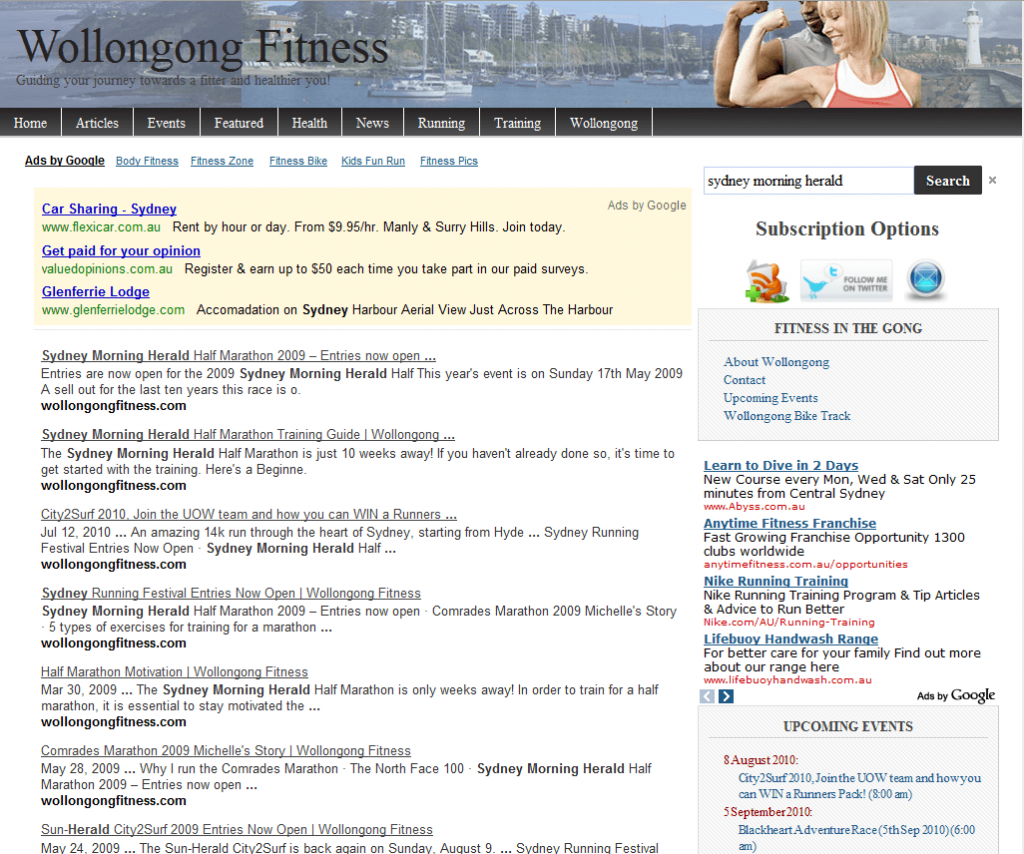

It’s about that special site search you can set up and search within your website. You can see how it looks like in the example below:

The Custom Search is quite similar as you can see in the next screenshot:

Google Site Search will combine with Custom Search because they do almost similar things. Site Search is used for one website and Custom Search can be used on different sites or just one.

You can also listen to what John Mueller from Google says about this change on February 2017, even though he isn’t directly working on this project (32:32):

21. HTTPs Ensures That the Information Users See Is What the Owner of the Site Provides

Another interesting SEO fact debated on Google Webmaster Central Hangout was about how relevant HTTPs is as a ranking factor for sites with information only.

The official response from Google is that:

HTTPs is relevant for any types of website. It doesn’t matter if the site has information only; it is still relevant. HTTPs doesn’t apply only for encrypting information like credits cards and passwords, but it also ensures that the information users see is what the owner of the website is providing.

An overly used technique to hack takes place in hotels, airports, cafes or, in general, other public spaces that offer free wi-fi access. They can take a look at the HTTP pages and add a tracking information or ads on those pages because the owner of the website failed to increase the security system. I bet that nobody wants spammy ads on their website, even if it’s informational only.

HTTPs helps users to see the content that was meant to be shown.

More on this matter you can find in the video below (56:30) registered on February 2017:

22. URLs Should Have Less Than 1,000 Characters

In July 2019, John Mueller recommended keeping URLs under a thousand characters. While you might think that’s not much, consider the fact that sub-folders can greatly expand the length of a URL. Moreover, studies showed that shorter URLs carry lots of SEO advantages. A concise URL can help you have a higher position in the search results hierarchy. Plus, it will come in handy for users that are casual and don’t bother remembering the name.

The URLs should be short enough to be easily recognized.

You can review the full answer in the next recording of the Webmaster Central Hangouts:

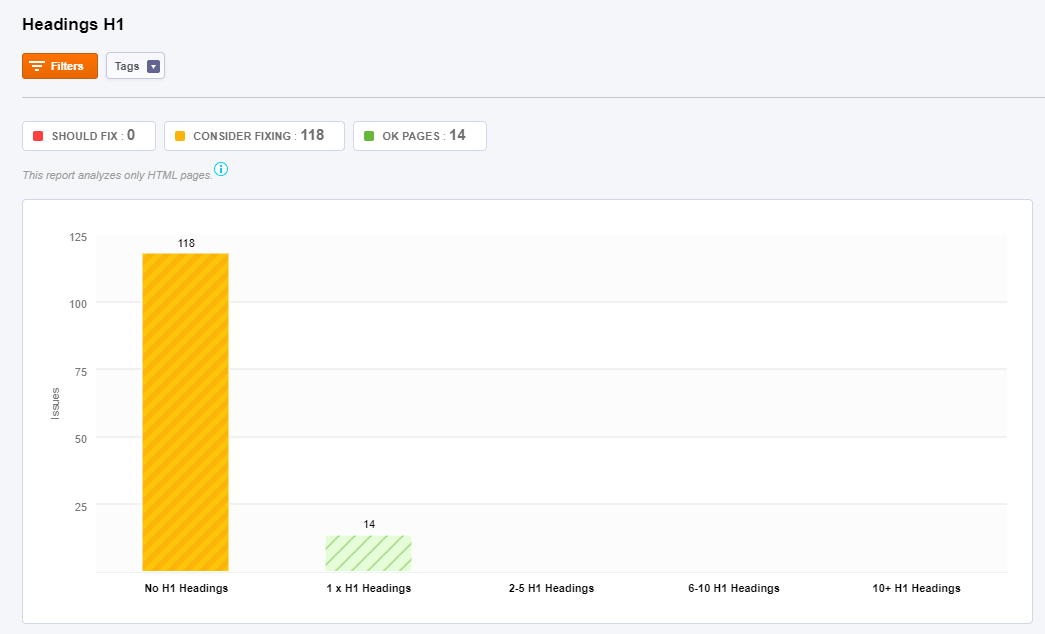

23. You Can Have Multiple H1 Elements on a Page

John Mueller said that you can have as many H1 tags as you want on a single page. If you have ever thought that adding a single h1 tag on a single page is the recommended way to proceed, well you should know that you can heave multiples headings. Don’t worry about that matter.

As many as you want.

— 🍌 John 🍌 (@JohnMu) April 12, 2017

If you look at the following video, you can find out the explanation, too.

With HTML5, it is not a problem to have multiple H1’s. It is common to have them in different parts of the page, in your website’s template, theme or other sections. Whether you have HTML5 or not, it is completely fine to use multiple heading tags of the same type on a page.

On the other side, having no h1 tag on a page is a problem and it should be fixed. That means you have pages without the main heading, and both Google and the user wouldn’t know what it’s about.

Did you know?

The Site Audit from cognitiveSEO automatically classifies the headings and shows you all the related issues .

Add the website to audit and the tool will extract information regarding indexability, content (title, headings, duplicate pages, thin content canonical pages, mixed content, images), architecture, mobile and performance. In the Content section you‘ll get a list with all the headings types and graphs with information from you site.

24. New Content Should Be Linked High in the Site Architecture

A well-structured architecture can change the faith of your website drastically. Web crawlers, like Googlebot, crawl the website architecture. The goal is to index the content and return it in the search results.

Sometimes, crawlers can’t discover your pages and you need to help them. Google admits that sometimes it doesn’t seem to be finding all the pages on your site. It can happen because it can’t crawl them, or it can’t understand them properly to index those pages.

To help Google index and crawl it, make sure you have a sitemap and new content is linked high up in the site architecture. Anything that you want to push a little bit in the search results, it will definitely help Google if you add it higher in the site architecture.

John Mueller recommends that it is the correct way to do it.

| What also makes a big difference for us, especially if your Homepage is really important for your website, is that newer content is linked pretty high within the structure of your website, so maybe even on your Homepage. | |

| John mueller | |

| Webmaster Trends Analyst at Google | |

You can find the full script below:

25. Google Says It’s Ok to Have Affiliate Links

The question that popped into one of the latest Google Webmasters Tools discussed the need for affiliate links and the correct way to mark them. Google considers affiliate links to have a commercial background since you’re trying to make some money pointing to a distributor or somebody that you trust.

John Mueller confirms it:

| From our point of view, it is perfectly fine to have affiliate links. That’s a way of monetizing your website. We accept that these links are marked appropriately so that we understand that those are affiliate links. | |

| John Mueller | |

| Webmaster Trends Analyst at Google | |

An option would be to use the rel =”nofollow” or use the newest rel=“sponsored”. The sponsored rel link attribute was introduced a few days ago to mark links that were created as part of advertising, sponsorships or similar agreements.

The additional attributes (rel=“sponsored” and rel=“ugc” – for user-generated content) are meant to help Google understand the nature of links:

| Links contain valuable information that can help us improve search, such as how the words within links describe content they point at. Looking at all the links we encounter can also help us better understand unnatural linking patterns. By shifting to a hint model, we no longer lose this important information, while still allowing site owners to indicate that some links shouldn’t be given the weight of a first-party endorsement. | |

Follow the whole explanation from John in the next video:

26. Google Will Treat Nofollow Link Attribute as a ‘Hint’

As of March 2020, Google will treat all link attributes, be them sponsored, user-generated and nofollow as a hint for ranking purposes. For crawling and indexing purposes all those links will become hints. That means Google might count a link as credit, consider it as part of spam analysis or for other ranking purposes.

The company is making the change to better identify link schemes while still considering the link attribute signals.

| For example, if we see that a website is engaging in large scale link selling, then that’s something where we might take manual action, but for the most part, our algorithms just recognize that these are links we don’t want to count then we don’t count them. | |

| John mueller | |

| Webmaster Trends Analyst at Google | |

27. The Number of Words on a Page Is Not a Ranking Factor

Somebody from the Google Webmaster Central Office Hours Hangouts said that YouTube should be removed from search results:

Please remove YouTube form Google search results. Let websites compete with websites. It’s not fair when I write a 4,000-word article covering every aspect of a topic and then get over ranked by a 500-word article just because this website has more authority.

The fact that Google offers different types of content for a search query means diversity. There are different ways of finding relevant content to each individual user. The number of words on a page doesn’t necessarily reflect relevancy for a search query.

| John says that the number of words of a page is not a factor they use for ranking. It doesn’t matter if you have 4,000 words on a single webpage and somebody else has 500 words, your page won’t be automatically more relevant than another page. | |

| john mueller | |

| Webmaster Trends Analyst at Google | |

Rather than focusing on the number of words, consider the quality of your content. Instead of writing an extra article, make sure that all the content on your site is of the highest quality possible.

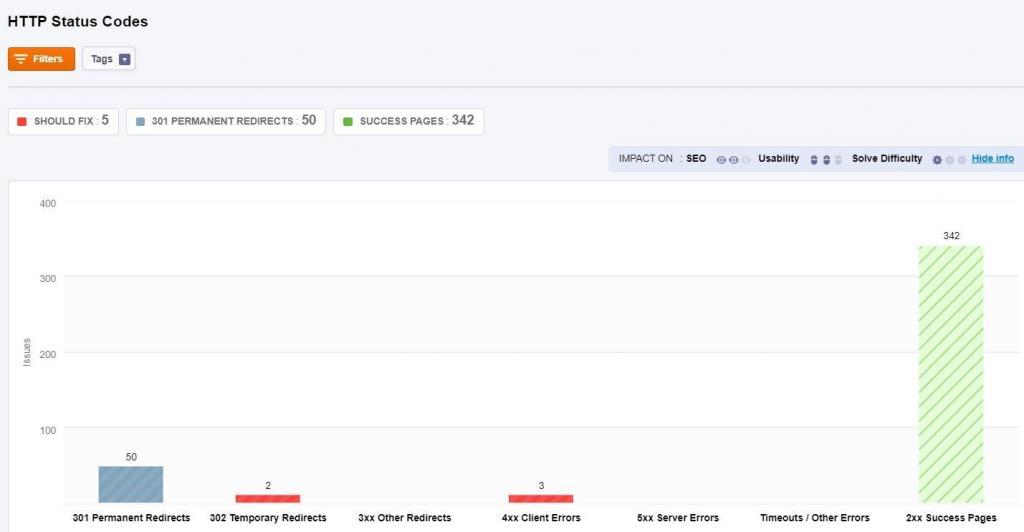

28. Google Does Not Render Anything Unless It Returns a 200 Status Code

A question about status code popped in one of the Google Webmaster Hangouts:

Does Google check status code before anything else, for example for rendering content?

John said that they do check the status code. Google does not render anything unless it returns a 200 status code.

| We index the content or render content if it’s a 200 status code, but if it’s 400 or 500 error or redirect then obviously those are things we wouldn’t render. | |

| JOhn Mueller | |

| Webmaster Trends Analyst at Google | |

If you have a beautiful 404 page, that’s very good but that page won’t be indexed in Google.

If it returns 404 then we just won’t render anything there.

Same thing happens for redirects. For example you have page A, and you create a redirect – which will be page B. According to Google they will only show page B, with its new content, and page A as you knew it won’t be indexed in Google.

To fix any issues you might have associated with your website and feed Google with the right content, use the Site audit from cognitiveSEO and then check your sitemap. Google looks to sitemaps to understand which pages to crawl and index. If your 301 status code pages don’t technically exist, there’s no need to keep them. You’ll waste crawl budget. Keep only the final redirect URL.

Because pages with 301 status codes no longer technically exist, there’s no point asking Google to crawl them

Did you know?

The Site Audit from cognitiveSEO automatically shows the status code for your webpages.

Add the website to audit and the tool will extract information about your pages. Correct any issues and make sure you have 200 status code pages. A well optimized site should not have any 3xx ,4xx or 5xx issues.

Below you can find the full video:

Conclusion

Take into account these SEO facts, as they will be helpful in planning your search engine optimization strategy, content marketing strategy or in any decision you take for your business and your website regarding the online marketing area. It’s a win win relationship: you will be updated with the SEO news and (hopefully) you will do things respecting the Google recommendations. And you can stay connected really easy: check the Google webmaster central office hours and see what’s going on.

Of course, keeping track off ALL the hangouts can be a burden. This is why, the previous 21 SEO stats are important for each webmaster and shouldn’t be ignored. On the long run, don’t lose focus on your plan and stick to your goals. These videos can help everyone, both rookies and SEO experts alike. They are quite a juicy resource, from keyword research, content creation, link building to other effective SEO strategies and SEO tactics.

Having organic clicks from organic searches is still an important advantage in the lead generation path. These intriguing facts about SEO can be applied in the social media marketing strategy and in the whole inbound marketing process as well as in any other strategies, as long as you have an online presence.

Furthermore, other domains can positively influence your SEO efforts, if you’ve done your job right. If you follow the previous tips for boosting SEO you could have a good chance to succeed.

Site Explorer

Site Explorer Keyword tool

Keyword tool Google Algorithm Changes

Google Algorithm Changes

Interesting read thanks Andreea. Good to get clarification on the Mobile Pagespeed issue, although I do d=think it will eventually become a ranking factor.

Thanks, Dave! You might be right. If we hear something, we’ll let you know.

Excellent post Andreea – so many useful quotes and snippets that I can use to refute common myths for my students. Thank you.

Thank you, Kate. Good to hear that. Hope the students will appreciate it!

Wow, it is very thorough post. Now I have something to read during the weekend. Just looking at the headlines, I see that predictions about bigger influence of PASO are true.

Thank you, PJ! Enjoy the reading.

Very interesting, especially the SEO myths that we see around everyday and now we realize that they are actually nonsense.

It is best to make quality content and respect the natural parameters of writing. It can seem a little difficult but is the best thing an SEO agency can advise.

Original message: Muy interesante, terminó con mitos que en el SEO son muy cotidianos y que ahora vemos que finalmente son una tontería.

Lo mejor es hacer un contenido de calidad y que respete los parámetros naturales de redacción. Si parece un poco complejo pero siempre empre está la opción a una agencia SEO que pueda asesorar.

Hi, Isabel! Thank you for your feedback. Really glad you find it interesting. Hope to see you more on the blog.

This is a fantastic resource Andreea!

Just an anecdote about HTTPS: I noticed an almost immediate ranking boost when I added SSL to my site. Number of organic keywords I ranked for nearly increased 1.5 times within a week of adopting HTTPS.

So anyone still on the fence about this, now might be a good time to switch.

I’ll be adding this page to my list of growth hacking resources by the way. Great list!

Thank you, Puranjay!

We did an entire article about switching to HTTPS in that area: https://cognitiveseo.com/blog/13431/recover-facebook-shares-https/

Thank you for the input. Nice to hear it worked so good for you.

I’m the one who asked the 5th question regarding multiple hreflang tags assigned to one page!

Thanks for sharing these takeaways Andreea. I can imagine how difficult it is to watch through all videos and collect all the insights. Nice work!

Nice to meet you!

It was a little bit of work, but it was all worth it.

Thank you!

So, if i want to make a section in my website of news from others websites ill not be penaliced for google or get my rank down?

Hi, Javier! As long as you follow Google’s Quality Guidelines, you should be alright.

Great insight! Thank you!

I didn’t knew about this hangouts thing that google does, it seems very helpful but Im sure a lot of us are always thinking “is this the true answer or just the answer that they want us to think its true?”

Anyways, very good resume, keeping your blog on my bookmarks 🙂