This is a TRUE & SUCCESSFUL story written and documented by Florian Hieß, a digital performance strategist specialized on SEO, SEA and conversion optimization. He is working in an agency creating the online strategies of top national and international companies. More details about the author can be found at the end of the article.

In this case study I’m looking at a local business to identify what was done wrong in the past with the link building strategy of this webdesign agency and what is to be done to rise like a phoenix out of this link penalty. As you can see in the SEO visibility chart, the domain has grown quite a good visibility for a local business from 2013 till the penguin everflux update at end of 2014. After that the visibility is constantly going down and not coming up again, unless action is taken.

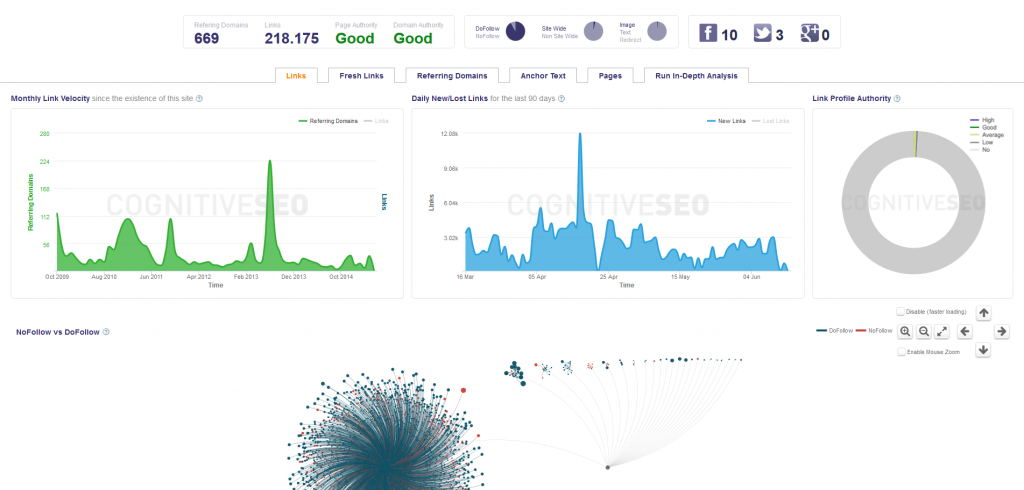

First of all I got an overview with the Site Explorer tool in cognitiveSEO. Here I saw that for a local business the link velocity is pretty high on a constant level and some peaks are pointing out. But there are no details that would give us more information why this domain is going down. To see more details I set up a campaign. The campaign wizard asks you to fill out your domain, some competitors domains, link analysis and rank tracking. Now it needs some time to crawl and provide all the data, but you get a notification in your inbox when everything is ready to dive deep into.

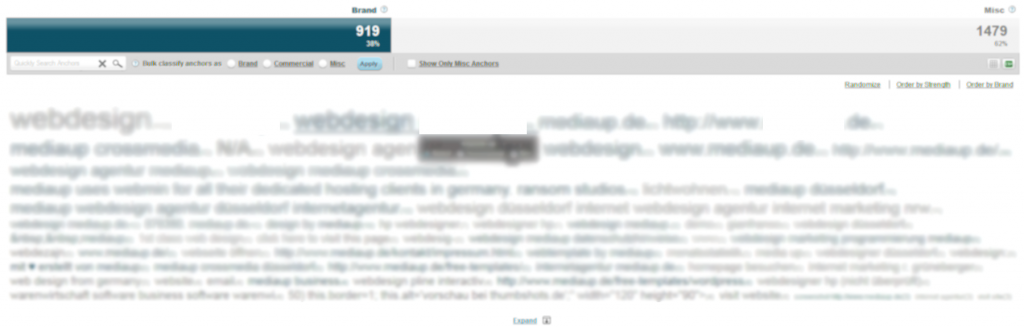

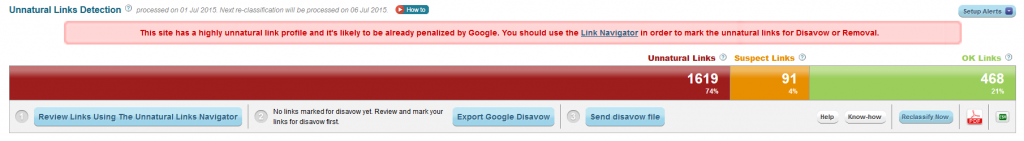

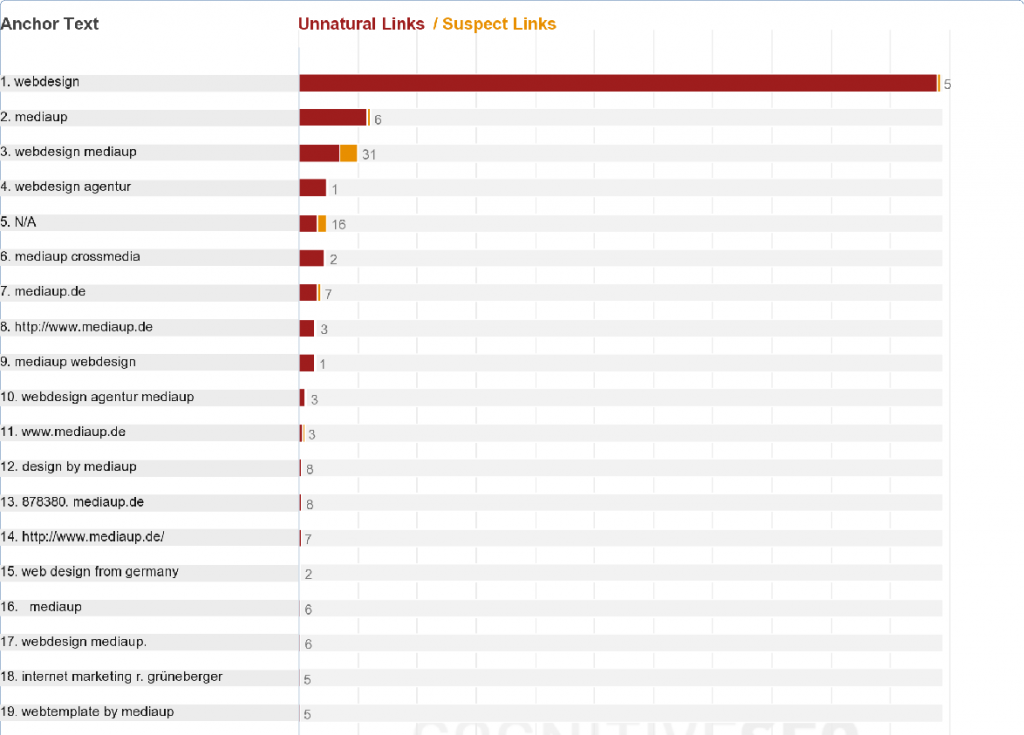

Then I took a look at the link risk panel to see how many unnatural and suspect links are found automatically for this website. To rate them correctly you should classify your links first. Normally they are classified pretty well automatically, but it’s worth going through all anchor texts to see if the algorithm worked out fine. This step is very important to build a good data base for the deep dive inbound link analysis.  Now you can see the total number of links and what the ratio of brand, commercial and misc link anchor texts is. A rating for your links and categorization will also be the result of this step. With 1,673 unnatural, 110 suspected and 500 good links this sounds like a lot of work in checking all the websites where links are placed. But before we take action let’s go a bit deeper into the backlink structure and data we have.

Now you can see the total number of links and what the ratio of brand, commercial and misc link anchor texts is. A rating for your links and categorization will also be the result of this step. With 1,673 unnatural, 110 suspected and 500 good links this sounds like a lot of work in checking all the websites where links are placed. But before we take action let’s go a bit deeper into the backlink structure and data we have.

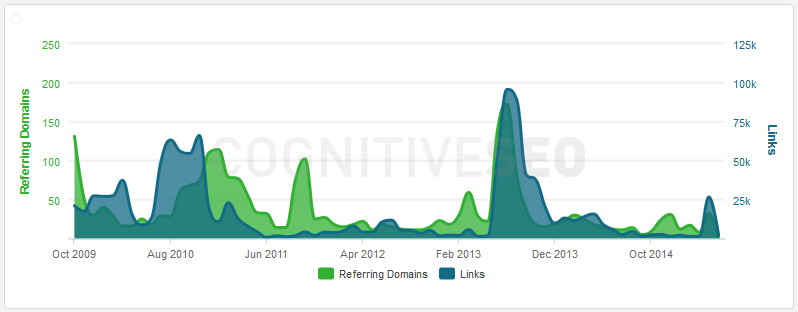

The graph is based on actual and historical data and will give you a fast overview of what happened in the past.

In the case of our local service we can see that there was a pretty good level of gained domains linking to the website every month, but also in some time frames a lot more links than domains which point to sidewide link positioning. In the screenshot below you can also see some peaks, possibly from focusing too much on linkbuilding at that time. This is the referring domains graph where you can see how high the amount of referring domains and links is during the last years.

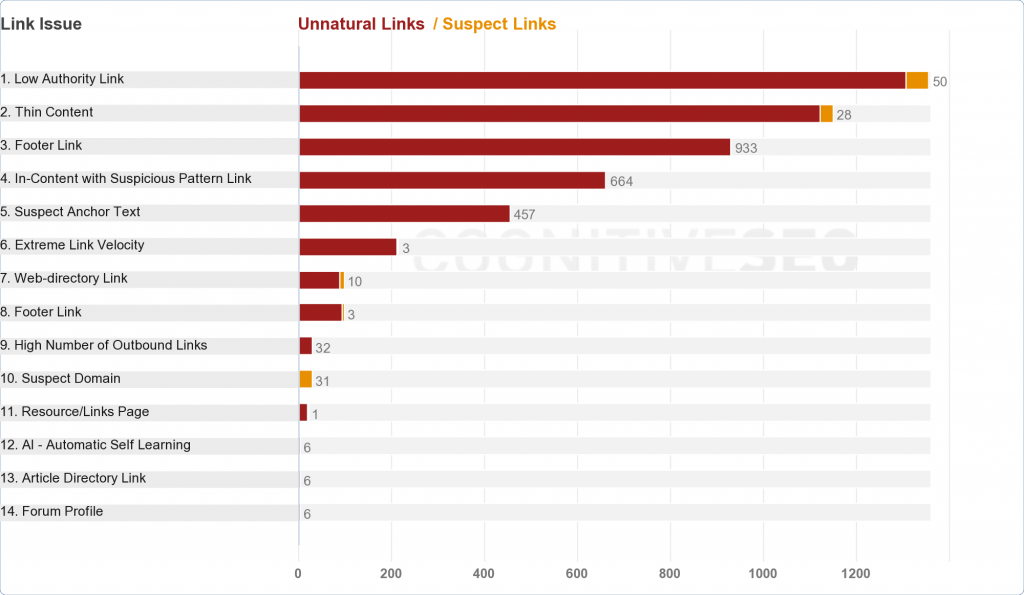

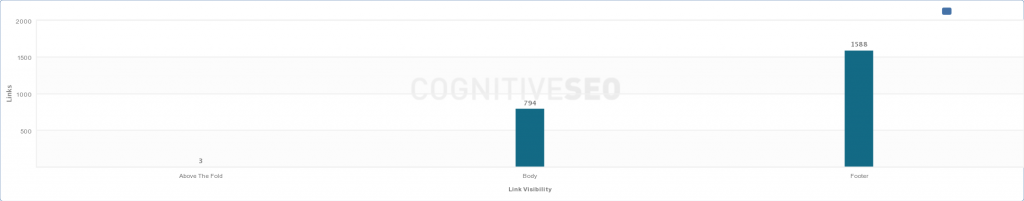

This graph of the in-depth analysis shows us the link risk rating of the analyzed backlink profile, especially the unnatural and suspected links which are not according to the Google guidelines. In this case we can see a lot of low authority links, thin content, footer links and suspicious links as most unnatural patterns.

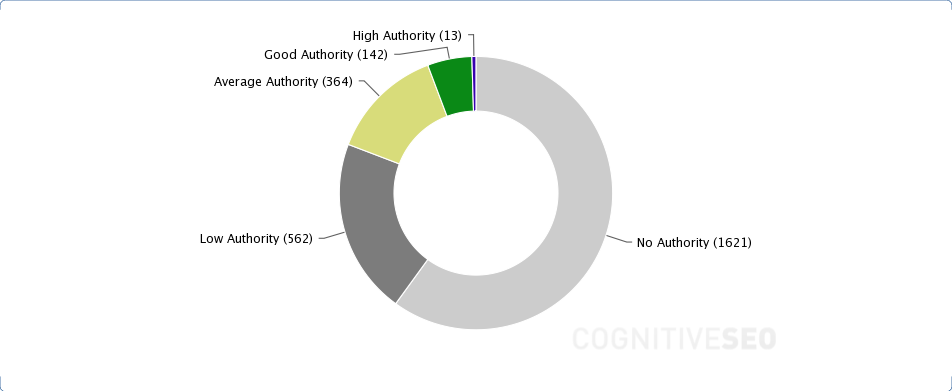

Based on this information it’s no big surprise that more than three quarters of all links are low quality or have no authority.

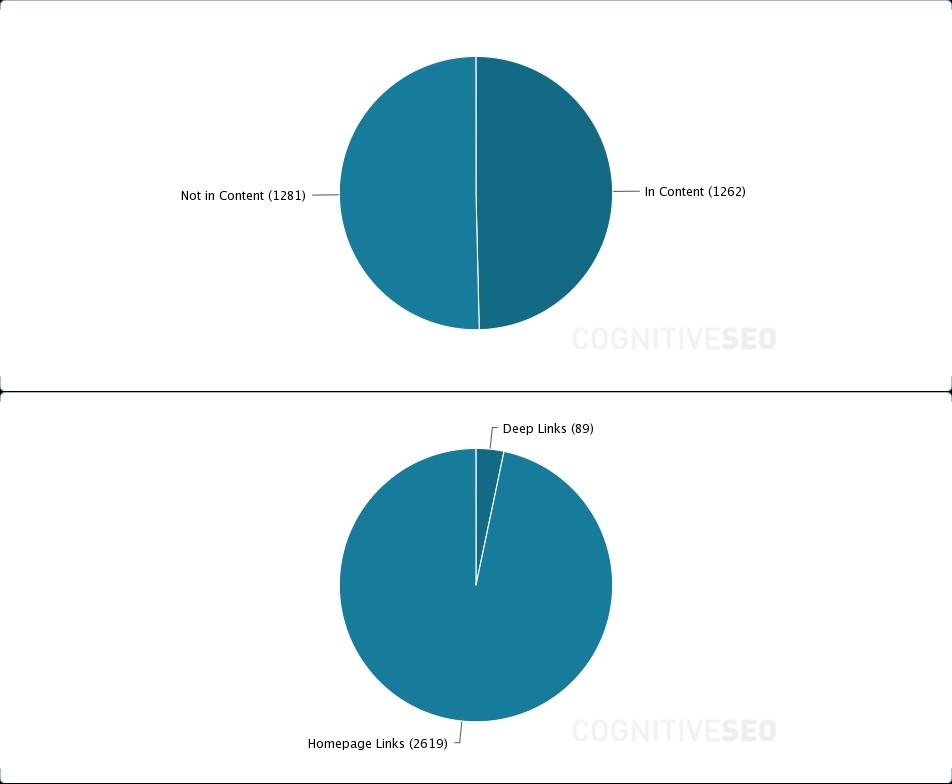

A small part has an average authority, some of them are good quality links and nearly zero links are high authority. On another two pie charts we can see that about 50% of all links are not in the content and the other 50% are incontent links. 95% of all links point to the homepage and the rest are deeplinks.

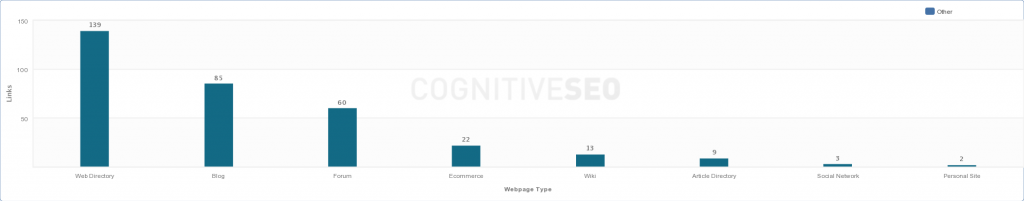

In the following three bar charts we take a quick step into the diversification of link sources. Web directories, sources with short paragraph of texts, footer links and also links from copyright paragraphs are very dominant in this link profile. Some of the other metrics like the nofollow / dofollow ratio, non-sitewide / sitewide ratio, the ratio of text/image/redirect links, anchor targets, referring IPs, links from country TLDs, class-C IPs and domain TLD distribution look all fine so far.

So it’s no big surprise that the unnatural and suspect links take a really high amount of total links. Now the hard work starts with a 3 step process which is meant to review all websites and the links on them and mark possible harms to disavow them later with the disavow tool in the Google Search Console.

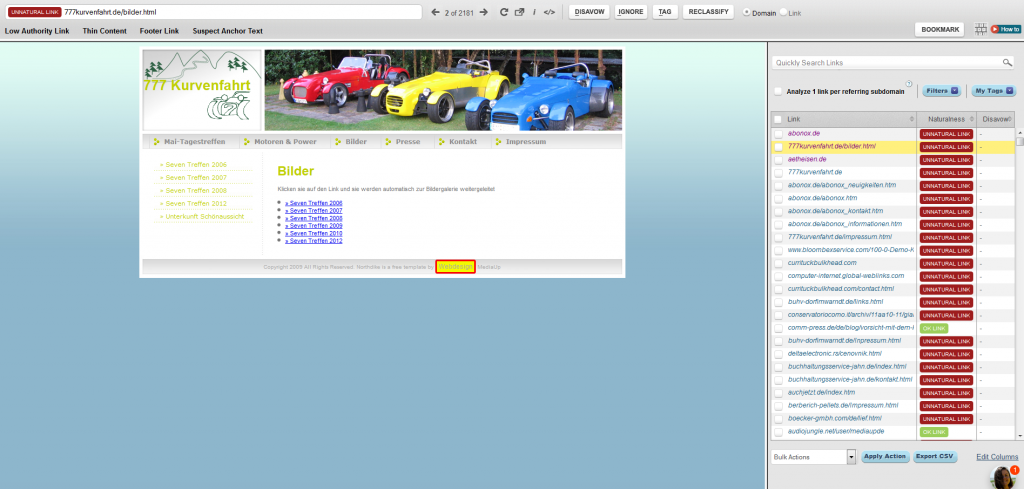

Now I’m going into the link navigator to review every single site that points with a backlink to our local webdesign website. As you can see on the scrollbar this means a lot of work.

The filtering options and tags are of a great support here, especially when you are reviewing really huge link profiles.

Site by site and link by link mark the unnatural links for disavowing on a link or domain level and the good links for keeping them.

In this case it took me about 4-5 hours to go through 2181 unnatural or suspected links and rate them.

After the first third I got quite an impression why the Google Penguin update hit this website. But I also wondered why it took till end of 2014 for the website to get penalized.

When I break the link building strategy down to what was done to get penalized it’s some patterns like link building that were done some years ago and worked out well.

A lot of footer links from templates were seeded all over the internet for free, including: spammy web catalogues, blog comments with keyword or keyword combinations as name, hard keyword anchor text links, bookmark links and article directories. Some links were of relevant topics of self owned domains but a lot of them were from domains with an irrelevant topic, such as travel, city, health, medicine and so on. Also a high amount of money keywords and hard anchor text links, like webdesign, webdesign agency together with broad combinations. After finishing the reviewing process of all these links I created a disavow file with all the bad links. In fact there is not much left in the link profile, just a few good and natural links.

But if you get penalized you have to take it all to celebrate a come-back in the search engine result pages.

So there is not a big chance that this domain will get the old rankings back, but there is a chance to get back at a certain level and do some good link building to improve the rankings for the future.

Disclosure

This is not a paid post and cognitiveSEO didn’t make any kind of agreement with the author. This is an analysis of Florian Heiss, written and documented by himself.

About the author

|

Florian Hieß got specialized on topics such as linkbuilding, link risk management, backlink audits and penalty recovery during his work and master thesis. He trained himself a lot in his working but mostly in his private time and he also wrote several case studies about websites that got hit by Google algorithmic penalties or manual actions. He also wrote a book about sustainable link building strategies and risk management. |

Site Explorer

Site Explorer Keyword tool

Keyword tool Google Algorithm Changes

Google Algorithm Changes

Leave a Reply!