There are many attributes that an SEO must have in order to be successful, but one of the most important ones is being willing to improve all the time. Improvement doesn’t always come from making things right. In fact, the only way you’ll really improve is by failing, over and over again.

However, when it comes to SEO, a mistake might pass by unnoticed. You might not have any idea that you’re doing something wrong. Now, there are thousands of horrible mistakes that you could be making, like not adding keywords in titles or engaging in low quality link building.

But here are some more subtle, modern mistakes that SEO might make these days.

In order to find out people’s most common modern SEO mistakes, I decided to ask a number of renowned SEO experts the following question:

Can you think of one major SEO mistake that is holding people’s websites back today?

Some of them were kind enough to take some of their time and share the wisdom with us, so keep reading because there’s top quality information lying ahead in this list of 10 SEO mistakes to avoid in 2020 and later on!

- You Don’t Fix Broken Pages with Backlinks

- You Publish Too Many Poor Quality Pages

- You Have Duplicate Content Issues

- You Target out of Reach Keywords

- You Ignore the Organic Search Traffic You Already Have

- You’re Using Unconfirmed SEO Theories

- You Don’t Consider User Search Intent While Writing Content

- You Don’t Optimize for the Right Keywords & Use Improper Meta Description

- You Over-Complicate Things

- You’re Not Starting with SEO in Mind Early On

1. You Don’t Fix Broken Pages with Backlinks

Broken pages are really one major issue for websites, especially if they have backlinks pointing to them through those web pages. Ignoring broken pages can be a big mistake.

John Doherty, Founder & CEO at Credo, a portal for connecting digital marketing experts with businesses, knows this and marks it as one of the biggest mistakes people make, as well as one of his team’s top priorities when optimizing websites:

| One major SEO mistake that I see holding back websites these days is not fixing their site’s broken pages that have backlinks pointing to them. I work with a lot of very large (100,000+ page) websites, and the first thing I do when we begin our engagement is look at their 404s/410s and which ones of those have inbound external links. We then map out the 1:1 redirects and redirect those. This always shows a good gain in organic search traffic, and then we build on that momentum from there. | |

|

John Doherty |

| CEO at Credo / @dohertyjf | |

In the answer, John mentions a few things:

Broken pages: First, we have the broken pages. Broken web pages are bad for the internet and, thus, bad for your website.

Why you ask?

Well, to understand why we first have to understand what a broken page actually is. A broken page is simply a page that doesn’t exist. You see, it’s not actually the broken page that matters, but the link that’s pointing to it.

A page doesn’t really exist until another page links to it.

When Google crawls a website, it always starts from the root domain. It crawls https://www.yoursite.com and then looks for links.

Let’s assume that the first link the crawler finds is under the About Us anchor text and it links to https://www.yoursite.com/about-us/ but the page returns a 404 response code, because there’s no resource on the server at that address.

When Google’s crawler finds 404 pages, it wastes time and resources and it doesn’t like it.

In theory, there is an infinity of 404 pages, as you could type anything after the root domain, but a 404 page doesn’t really take form until some other page that exists links to it.

Now broken pages can occur due to two factors:

- You delete a page that has been linked to (broken link due to broken page)

- Someone misspells a URL (broken page due to broken link)

Both the linking website as well as the linked website containing the 404 have to suffer. If you have too many broken links on your site, Google will be upset because you’re constantly wasting its resources.

Backlinks: The second point John makes is regarding the backlinks pointing to the broken pages. As previously mentioned, some may occur due to people misspelling a URL, which isn’t your fault.

However, if you have 10 websites that link to one of your pages and you delete that page because you think it’s no longer relevant, then you’re losing the equity that those 10 backlinks were providing. Bad for SEO!

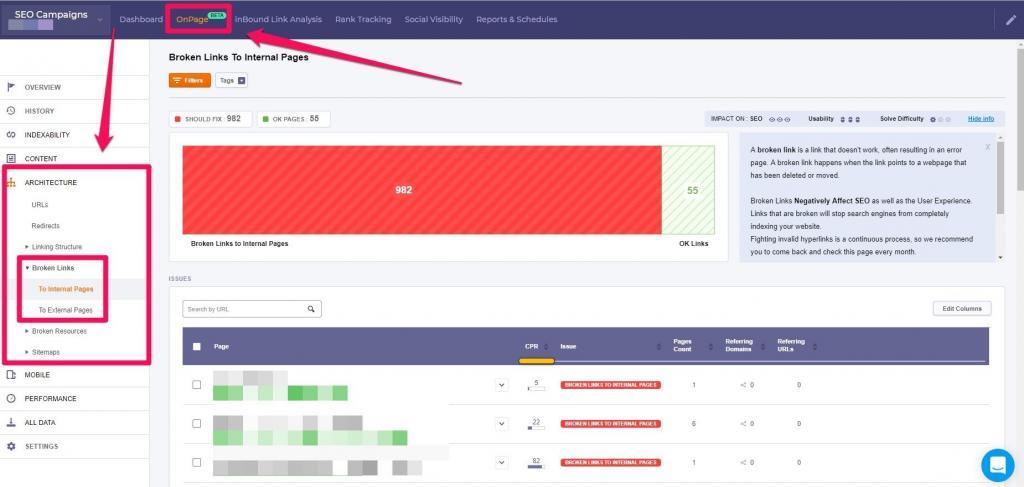

The cognitiveSEO Site Explorer is great for finding out backlinks that point to broken pages on your website:

You can also have internal broken links, as well as external broken links pointing from your website to 404 pages on other sites and you should also fix those! Soon, on the 12 of December 2018, cognitiveSEO will launch its OnPage module which you’ll be able to use to determine if you have any broken internal links so make sure you check it out!. Here’s for the first time a quick preview to it.

Big websites: After that, we see that John mentions something about big websites. Why? Pretty simple. It’s easier to mess things up on a big website. On a small website, you might have one or two 404s but they will be easy to spot and very easy to fix.

They might have some backlinks each, but not much is lost. However, when you have hundreds of thousands of pages, that link equity scales up pretty quickly.

Redirects: Lastly, there are the redirects. John tells us that to fix the issue, he always does the proper redirects. By using a 301 redirect from the broken link/page to another page that is relevant, we can pass the link equity from the wasted backlinks.

For maximum effect, don’t just redirect to the homepage or some page you want to rank if it’s not relevant. Instead, link to the most relevant page and then use internal links on relevant anchor texts surrounded by relevant content sections to pass the equity to more important pages.

This is the type of SEO fix that might bring invisible results ‘overnight’. Thanks, John, for this wonderful input!

2. You Publish Too Many Poor Quality Pages

Another issue that is generally related to bigger websites is the ‘thin content’ issue.

| One very common mistake sites make is that they publish way too many poor quality pages on their site. As a result, Google sees the site has a lot of “thin content” and lowers the site’s rankings across the board. | |

|

ERIC ENGE |

| CEO at Stone Temple / @stonetemple | |

Although not always the case, when you have a small website, it’s pretty easy to come up with some decent pages. However, when you have a site with thousands of pages, the effort required to have qualitative content on all of them is a lot bigger.

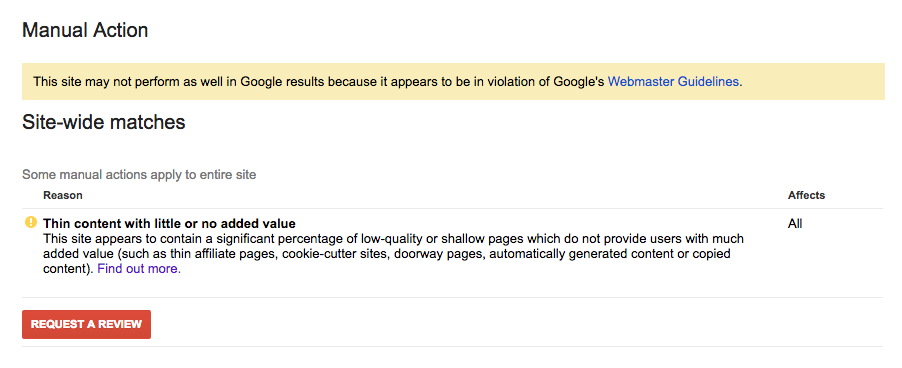

Thin content pages are pages that have no added value to what’s already on the web. Google doesn’t really have a reason to index the site so you’ll either end up in the omitted results or get this message in your Search Console:

The message above is a manual action, which means that you’ll have to submit a review request and someone hired by Google will actually take a look at your website to determine if you’ve fixed the issue. This might take a long time, so be careful! However, it’s possible that the algorithm ‘penalizes’ your website without any warnings, by simply not ranking it.

Matt Cutts, the former Head of Spam at Google puts it like this:

The thing is, thin content doesn’t always mean the site requires text. Why am I saying this? Well, Matt Cutts mentions doorway pages as an example but doesn’t really give an alternative to them. What if you do have a website that offers the same service in 1,000 cities? Should you write ‘unique’ content for each page?

The truth is there’s no alternative to doorway pages. They’re either thin content or they have unique qualitative content. However, there’s much more than content when it comes to ranking. So if you have a car rental service, it’s not necessary to add filler content to every page, but you have to do other things well. Structure it very well, make sure your design is user-oriented and maybe consider having a blog to add relevant content to your website.

I talk more about this in this article about doorway pages alternatives.

However, although it does take a lot of time to add original content to every page, it might be worth the shot if you really want to stand out. Google has absolutely no reason to include you in the search results if the info you’re providing is already there, exactly in the same manner.

Again, the CognitiveSEO OnPage Tool which will be launched on December 12, 2018 can help you identify pages with thin content.

Thin content is a very big issue these days, especially for bigger eCommerce websites and should be treated as such. Thanks, Eric for this great addition to our list of mistakes!

You see, Google has a singular focus on delivering the most relevant and highest quality results to its users. Devesh Sharma, founder of DesignBombs explains how damaging are low-quality pages for your site.

| The days of churning out low-quality content that adds little-to-no value to Google’s users, in the hopes of ranking high, are long gone. In fact, low-quality content may even get your site penalized. Today, if you wish to rank with the help of content, it must be of the highest possible quality – comprehensive, well-researched, original, and engaging – so that the user completes their search journey satisfied. | |

| Devesh Sharma | |

| Founder DesignBombs / @devesh | |

Their strategy was quality and they’ve always focused on creating detailed, high-quality content on their blog. The results are rewarding and show how valuable high-quality content is.

3. You Have Duplicate Content Issues

Very closely related to thin content pages are duplicate pages, which are even worse. Andy Drinkwater (I know, that’s his real name! Pretty cool, right?) from iQ SEO knows this very well:

| Probably the one that is the most prevalent through the audits that I conduct, is the duplication of pages. This tends to often be done because site owners might have read (or believe) that multiple pages targeting the same or similar phrases, is a good thing. Page duplication/keyword cannibalisation leads to Google seeing a site that is trying too hard with its SEO and is often heavily over-optimized (not something that they want site owners doing). | |

|

ANDY DRINKWATER |

| Founder at iQ SEO / @iqseo | |

Duplicate pages can occur due to many factors. For example, one client of mine had a badly implemented translation plugin, which created duplicates of the main language for the pages that did not have any translations. Bad for SEO.

I have to admit, however, that I’m kind of surprised that any said people would do this voluntarily to themselves. Although… I did have one client that asked me why his competitors ranks with two pages for the same keyword. On his own, he might just have duplicated his pages, who knows?

Either way, duplicate content is bad. Not only that you’re not providing anything of value and you’ll get into Google’s omitted results, but you’re wasting Google’s resources and that might eventually affect your entire website.

I swear I did not cherry-pick these answers, but again, Andy’s input fits perfectly with our soon to release OnPage module. You’ll be able to use the tool to easily identify which pages are 100% exact copies of others and even which pages only have similar content.

Duplicate content is definitely an issue affecting many websites. Thanks, Andy, for sharing this with us!

4. You Target out of Reach Keywords

It’s good to have big dreams, but sometimes, too big dreams can overwhelm and demoralize you. If you want to be able to lift those 250 pounds, you first have to be able to lift 50.

Andy Crestodina from Orbit Media tells us that people should set realistic keywords:

| By far, the most common SEO mistake is to target phrases that are beyond reach. Even now a lot of marketers don’t understand competition. They target key phrases even when they have no chance of ranking. There are a lot of mistakes you can make in SEO and a million reason why a page doesn’t rank. But this is the big one. | |

|

Andy Crestodina |

| Co-Founder at Orbit Media / @crestodina | |

Often times, competition is hard to explain to clients because authority and page rank are often times misunderstood or far too complex subjects. Thus, Andy has come up with a little system to better and more easily explain how people should target their keywords.

Because it’s difficult to explain links and authority, I’ve started using a short-hand way to validate possible target phrases:

- If you have a newer, smaller or non-famous website, target five-word phrases.

- If you’re relevant in your niche, but not a well-known brand, target four-word phrases.

- If you’re a serious player with a popular site, go ahead and target those three-word phrases.

Here’s a chart that helps make that recommendation…

Source: Orbit Media

I feel the need to point out that although there’s always been a correlation between lower search volume and higher word count, that’s not always the case. Many of you probably consider long tail keywords to be keywords with more words in the phrase but the term ‘long tail’ actually comes from the search graph:

So, theoretically, you can find high search volume, high word count keywords and also low search volume, low word count keywords. Some of these two or three keyword phrases might even have very low competition.

More on the true meaning of long tail keywords can be read here.

However, as Andy stated above, it’s pretty difficult to understand what ‘low competition’ really is in terms of SEO so, since there’s a correlation between low search volume and high word count, there’s a big chance you won’t go wrong with it.

When you’re first starting out, it’s always better to start targeting lower competition keywords and build your way up. Thanks, Andy, for the input and also for the very useful chart!

5. You Ignore the Organic Search Traffic You Already Have

Since we’ve just talked about what keywords you want to rank for, why not talk a little about keywords you’re already ranking for? Cyrus Shepard, ex Mozzer and current founder of Zyppy knows the value of ranking keywords data very well:

| I regularly see websites make the mistake of only optimizing for the traffic they want, and ignoring the traffic they have. Lots of sites perform an “SEO optimization” when they create content: choosing keywords, writing titles, structuring headlines, etc. Sadly, a lot of folks stop there. After you publish and receive a few months of traffic, Google freely gives you a ton of data on how your content fits into the larger search ecosystem. This includes the exact queries people use to find you, which is also an indication of what Google thinks you deserve to rank for. By performing a “secondary optimization” around this real-world data, you take guessing out of the equation and take advantage of more targeted opportunities, hopefully leading to more traffic. | |

|

Cyrus Shepard |

| Founder at Zyppy / @CyrusShepard | |

You know… you probably have no idea what keywords you’re actually ranking for. You’re probably thinking “I already rank for them, why should I care?”

Well… the truth is that you might get some traffic from keywords you already rank for, but not all of it. If you’re getting 5 searches from a keyword that has 100 monthly searches, you’re probably not on 1st position because the average CTR for 1st position is around 30%.

It’s either you’re number 5 or below or you have really bad CTR and won’t last long in the top spots. If you’re on position 5+, then there’s still room for improvement.

You can monitor the keywords you’re already ranking for in your website analytics or by using Google Tag Manager on your website and adding Google Analytics to it. There’s a good amount of info in the Search Console as well.

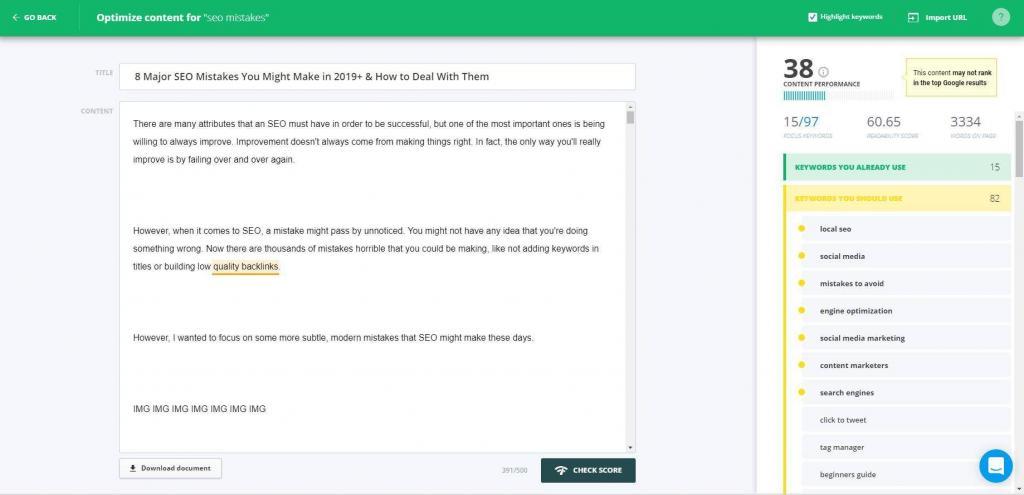

You can also use the CognitiveSEO Content Optimization Tool to easily identify terms which you should add to your content to make it more relevant for specific keywords:

Cyrus talks more about this here. Make sure you give it a good read! Cyrus, thanks so much for sharing your wisdom with us!

6. You’re Using Unconfirmed SEO Theories

If your SEO moves rely on unconfirmed theories, then it’s pretty much likely that you won’t be ranking high very quickly.

Josh Bachynski, science freak and renowned Google stalker, is very fond of this. He recommends that people should take a more scientific approach when it comes to search engine ranking factors because, in the end, it’s an algorithm:

| The biggest mistake people are making in SEO these days is in not using scientific methods to determine their theories about ranking factors and instead just wild guesses which of course are not as good and eventually will be completely off and they won’t be able to rank and have no idea why. | |

|

Josh Bachynski |

| Vlogger on YouTube / @joshbachynski | |

The point Josh is trying to make is that you should always test your SEO methods before you actually implement them and you should test them the right way.

One good example here we can give is the content pruning technique. We tried it and it apparently worked. Our organic search rankings started to rise. However, the test was not isolated, so therefore it wasn’t really scientific. We don’t really know if the organic search traffic increase was due to the content pruning or other factors because this happened over a longer period of time.

This can be said about other tests, as well. Most of the time people approach search engine optimization from multiple angles at the same time and it really is difficult to attribute growth to only one factor. You can read more about the content pruning technique here.

He also tells you not to trust Google a lot. I tend to agree. Here’s one example: “social media isn’t a ranking factor”. Take it as is and you will completely ignore building a social media marketing strategy. However, if you also take into account that social media can bring your website some backlinks, then you might reconsider things. Sure, blindly posting on Facebook daily while getting 0 likes is a waste of time, but get some engagement going and you’ll definitely see how it can boost you up.

You can check Josh’s YouTube for more on his scientific approach on things and how he determines his ranking factors. It’s also kind of funny to watch how he keeps poking Google officials with a stick.

7. You Don’t Consider User Search Intent While Writing Content

Shane Barker, CEO at Shane Barker Consulting, considers that one big SEO mistake is never thinking on user search intent.

| One of the biggest mistake I have seen marketers make is to not consider user search intent while writing content. You can find hot industry topics and write good content, but it will all be in vain if it’s not targeted at the right people and is not useful to them. | |

| Shane Barker | |

| CEO at Shane Barker Consulting/ @shane_barker | |

So, when you do your keyword research or conduct your next SEO audit, check the user intent and the kind of content they expect when they search for a particular keyword. This will help you write quality content that is useful to your readers and optimize your existing content. This, in turn, will improve your search rankings.

8. You Don’t Optimize for the Right Keywords & Use Improper Meta Description

When we’re thinking at on-site SEO, the very basic elements means keyword research and content optimization.

When we’re doing keyword research we must make a good strategy: select relevant keyword based on their type of keywords (branded, informational, commercial), with high volume, then look at the competitors and see what approach do they follow and the structure of their content.

Afterwards think on making a structure for your content, that beats the competition. And write similar content to the type of keyword. For example if you have a branded keyword, write branded content. Apply the same strategy for informational and commercial keywords.

Make sure to proper optimize your images, metadescription, URL and follow the user intent to share all the valuable information in your post.

It is important to chose the right keywords. Lots of people make the mistake of choosing an irrelevant keyword for what they’re tying to write, or don’t even bother to optimize the content.

Jitendra Vaswani, founder of BloggersIdeas.com says that the big SEO mistake that most marketer do is improper meta description usage and incorrect keywor optimization.

| Many times I have seen SEO experts never focus on right keywords for their website, they have generic Keywords which never gives them any customer, these keywords only bring free visitors. Focusing on keywords which can bring targeted leads is where your website will start having higher conversion rates. Also using of improper meta description hurt your ranking on search engines, so why no optimize with proper keywords & user intent. | |

| Jitendra Vaswani | |

| Founder of BloggersIdeas.com / @JitendraBlogger | |

9. You Over-Complicate Things

On a completely different note, many people over-complicate things when it comes to search engine optimization. This is mostly true, especially for beginners or startups that want high organic search rankings in a record amount of time.

Although what Josh says is true, that you should test your theories and choose your techniques wisely, most of the times it’s just better to stick to the basics.

Kevin Gibbons, CEO at Re:Signal knows this very well and points it out in his answer:

| The biggest mistake I find people making in SEO is that they over-complicate things. SEO can be very simple. Start with what’s the goal and find the easiest way to hit your target. If you start with identifying the real opportunity, you won’t go far wrong – but quite often people are doing what they think is best, without understanding what question they are answering. e.g. we just need more links… that might be correct, but first identify why – as it might not be the real problem to solve. | |

|

Kevin Gibbons |

| Founder & CEO at Re:Signal / @kevgibbo | |

Kevin makes a really good point. I’ve recently had a client that told me about her previous local SEO expert and his work. I was shocked! Over 100 backlinks built in the past 6 months (that’s a lot for the Romanian market), while the title of the homepage was still only the brand name, written in capital letters. Literally, no page on the website was targeting any keywords with the title! Unforgivable!

Thanks, Kevin for the answer and the awesome presentation you gave at the 2018 WeContent Content Marketers’ Conference in Bucharest, Romania.

On the same note we have Aleyda Solis, international SEO speaker and consultant with astonishing results:

| From SEOs: Overlooking the SEO pillars while trying to chase specific algorithm updates. I see many putting so much effort trying to identify the factors behind some of the latest updates that might have affected them while overlooking that at the end is about addressing the principles and fundamentals that will help them grow in the long-run: From relevance, organization and format of information to better addressing the targeted queries to user satisfaction, … It’s normal to have the need to keep updated and trying to identify potential causes that could potentially affect our SEO processes, but at the same time we should avoid getting obsessed with them and falling into the “can’t see the forest for the trees” situation. | |

|

Aleyda Solis |

| CEO at Orainti / @aleyda | |

SEO is an ever-changing domain and you have to constantly keep an eye for major updates such as the mobile-first index update. However, these updates follow a real issue, which is that most websites aren’t mobile friendly and most users now use mobile devices to perform their searches.

But constantly running after the next update, shifting your strategy 180 degrees or abandoning the essentials to pursue some rather unsure things won’t do any good.

Although it’s good to stay up to date with things (and you should), it would be a better idea if the foundation of your strategy were based on the basics of SEO, the long-lasting ones.

Aleyda was kind enough to give us two answers so keep reading:

10. You’re Not Starting with SEO in Mind Early On

Big words of wisdom here from Aleyda. I kept this one for the end because it’s so true and many SEO specialists will resonate with it. Most of the time, it’s not even the SEOs that make the biggest SEO mistakes, but the clients themselves:

| From other specialists (developers, designers, copywriters) as well as business owners: Implementing certain actions thinking that SEO “can wait” to be included later on in the process, without taking into consideration than doing it so might mean to having to “re-do” the whole project sometimes just because there wasn’t a timely validation in the first place, that can help to save so much in the long-run. | |

|

Aleyda Solis |

| CEO at Orainti / @aleyda | |

SEO can often be postponed because it seems like other things are more important. Many people build their website with “I’ll start SEO later” in mind, just to wake up to the reality that their website is built completely wrong and major changes are required in order to make it SEO friendly.

Business owners would rather hire PPC experts than content marketers. They only ask an SEO consultant on their opinion after most of the development for the website has been done.

For example, this study found out that ‘load more’ buttons convert better than pagination when it comes to eCommerce. Read it and you might immediately want to switch to ‘load more’ buttons or build your website that way from the beginning. However, you might completely ignore the fact that you’ll basically remove hundreds of pages from your site and hide their content under some JS that there’s no guarantee Google will ever see. Good for conversion, but bad for SEO.

If you want to see good results with SEO and also minimize costs, you should start with SEO as soon as you start developing your website. Otherwise, things will only be harder and they will take more time.

Thanks, Aleyda, for sharing your experience with us!

Conclusion

If you’re an SEO or a digital marketing professional and you’re making any of these mistakes, take action immediately to fix them, as they affect your websites dramatically! Hopefully, this list of common SEO mistakes to avoid in 2020 and onward will be useful to you and your team.

What SEO mistakes have you done so far? Share it with us in the comments section so that we may all learn from it!

Site Explorer

Site Explorer Keyword tool

Keyword tool Google Algorithm Changes

Google Algorithm Changes

Major mistake which I believe people make is they start building spammy links and do not watch over their link and how each websites is making their links. Some websites make more one link and that is very hazardous for SEO of the website. On the other hand, many people have a habit of copy-pasting the same content everywhere to get abrupt rankings. Even many agencies do it. I still remember we lost a client because we ask him some time on SEO project to rank because it takes time to build sustainable natural links but unfortunately we lost that client and it went to other agency which did spam and posted a single article at 40 places resulting in fast ranking.. but website got banned in 4 months.

Yes, it’s very common that Agencies don’t tell the clients about the risks! They promise the client the sky and the moon in order to make the sale… they lie. I have an upcoming article about how to overcome objections when trying to sell SEO services, so stay tuned! 🙂 Thanks for sharing your experience with us, Rachel!

Very good compilation of major SEO mistakes. Some of them have made me rethink my website.

I’d like to add that one one the major mistakes everyone does and affects SEO as well as the overall marketing is not having a clear and unique Online Value Proposition.

This helps you to find different ways to offer your services and the possibility to rank for broader terms related to your main one.

Great work. Congrats!

Glad you liked it! Yes, lacking value proposition and general strategy in general can be devastating on the long run. If you don’t know exactly what you’re doing, what you want to achieve and how you’re going to achieve it, it will be a lot more difficult.

Awesome blog,people nowadays ignore these small topics which are very important to take into notice.

here are some more mistakes mentioned below which are important:

1-forgetting about local searches

2-mistakes with 301 and 302 redirects

3-Causing Poor Load Times with an Overloaded Website

4-not focusing on relevant content

Gotta be honest but I always get in shock when I read that even in 2018 people fight the duplicated content issues.

I feel you David, but most of the time it’s either eCommerce or platform technical issues. Many new webmasters have no idea.

I’ve seen time and again websites with 1500+ words per page outrank almost every national competitor, even some with almost identical link profiles. I having every page a quality page has the opposite effect of poor quality pages devaluing the entire site. In this case, it seems to heighten the overall value of the site.

I feel you David, but most of the time it’s either eCommerce or platform technical issues. Many new webmasters have no idea.